-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Radeon RX 7800 XT Based on New ASIC with Navi 31 GCD on Navi 32 Package?

- Thread starter btarunr

- Start date

- Joined

- Sep 17, 2014

- Messages

- 21,210 (5.98/day)

- Location

- The Washing Machine

| Processor | i7 8700k 4.6Ghz @ 1.24V |

|---|---|

| Motherboard | AsRock Fatal1ty K6 Z370 |

| Cooling | beQuiet! Dark Rock Pro 3 |

| Memory | 16GB Corsair Vengeance LPX 3200/C16 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Samsung 850 EVO 1TB + Samsung 830 256GB + Crucial BX100 250GB + Toshiba 1TB HDD |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Fractal Design Define R5 |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | XTRFY M42 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| Software | W10 x64 |

This is the thing. RDNA2 as well... I cannot for the life of me understand why they didn't get more out of that. I cannot understand why they aren't presenting the full value story 'while still doing 99% of all the things nicely', instead we get random responsive blurbs about VRAM important. At the same time, I guess with the misfire they keep having on GPU launches, what marketing can you really make on that. The chances of looking absolutely stupid are immense. 'Poor Volta' ... the best marketing stunt in years eh@Vayra86 I agree with most of your post. I was screaming about RT performance and was called Nvidia shill back then. With 7900XTX offering 3090/Ti RT performance, how could someone be negative about that? How can 3090/Ti performance being bad today when it was a dream yesterday?

The answer is marketing. I believe people buy RTX 3050 cards over RX 6600 because of all that RT fuss.

I mean really, I'm looking at an 7900XT and I have yet to find fault with it in actual usage. Its virtually the same experience as an Nvidia card, and in terms of settings/GUI its a bit better. No Geforce Experience nagging you, but a proper functional and complete thing instead containing all the stuff you want. In games, I see a GPU that boosts and clocks dynamically, isn't freaking out over random stuff thrown at it, and just does what it must do, while murdering any game I throw at it.

Did we hear about a new package for Navi 31 die anywhere else before Tom leaked it?Average MLID leak

"Supoosed bug fix" already?So there is no Navi32 GCD with the supposed bug fixes, i guess AMD would like to skip this gen and move on (while putting RDOA3 on fire sale)

And where is a "bug" in the first place?

In your mind?

I have a reference model of 7900XTX and play on LG 4K/120Hz OLED TV with VRR over HDMI port.Though I am curious what these clock "bugs" are, even when it comes to problems seems like Navi31 has more relevant concerns that need to be solved first.

Gameplay is smooth and 10-bit images are fantastic.

Is there anything wrong with my card? I keep hearing that Navi 31 has 'issues'. I can't see them. Is anyone able to enlighten me?

"Fail to offer better performance over RDNA2..." What kind of nonsense is this?I haven't spend much time reading stuff about AMD's older Navi 32, but with RDNA3's failure to offer (really) better performance and better efficiency over RDNA2 equivalent specs, those specs of the original Navi 32 where looking like a sure fail compared to Navi 21 and 22. Meaning we could get an 7800 that would have been at the performance levels or even slower than 6800XT or even plain 6800 and 7700 models slower or at the same performance levels with 67X0 cards. I think the above rumors do hint that this could be the case. So, either AMD threw into the dust bin the original Navi 32 and we are waiting for mid range Navi cards because AMD had to build a new chip, or maybe Navi 32 still exists and will be used for 7700 series while this new one will be used for 7800 series models.

Just random thoughts ...

7900XTX is 50% faster in 4K than 6900XT. To be 50% faster it needed 20% more CUs. All that for exactly the same price as in November 2020, no inflation included. So, the card is effectively even cheaper despite the same nominal value.

Where is alleged "failure" in any of this?

Last edited:

- Joined

- Sep 6, 2013

- Messages

- 3,050 (0.78/day)

- Location

- Athens, Greece

| System Name | 3 desktop systems: Gaming / Internet / HTPC |

|---|---|

| Processor | Ryzen 5 5500 / Ryzen 5 4600G / FX 6300 (12 years latter got to see how bad Bulldozer is) |

| Motherboard | MSI X470 Gaming Plus Max (1) / MSI X470 Gaming Plus Max (2) / Gigabyte GA-990XA-UD3 |

| Cooling | Νoctua U12S / Segotep T4 / Snowman M-T6 |

| Memory | 16GB G.Skill RIPJAWS 3600 / 16GB G.Skill Aegis 3200 / 16GB Kingston 2400MHz (DDR3) |

| Video Card(s) | ASRock RX 6600 + GT 710 (PhysX)/ Vega 7 integrated / Radeon RX 580 |

| Storage | NVMes, NVMes everywhere / NVMes, more NVMes / Various storage, SATA SSD mostly |

| Display(s) | Philips 43PUS8857/12 UHD TV (120Hz, HDR, FreeSync Premium) ---- 19'' HP monitor + BlitzWolf BW-V5 |

| Case | Sharkoon Rebel 12 / Sharkoon Rebel 9 / Xigmatek Midguard |

| Audio Device(s) | onboard |

| Power Supply | Chieftec 850W / Silver Power 400W / Sharkoon 650W |

| Mouse | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Keyboard | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Software | Windows 10 / Windows 10 / Windows 7 |

Your OPINION is that my OPINION is wrong.It doesn’t matter what you’re a fan of. The opinion you keep reiterating is flat out wrong.

First time in a forum?

Igor's Lab tested recently Pro W7800 to simulate future 7800XT possible performance with 70CUs. It was up to 12% faster than 6800XT. Treat it as very rough attempt.there is nothing that tells what the performance of the 7800XT will be

- Joined

- Sep 6, 2013

- Messages

- 3,050 (0.78/day)

- Location

- Athens, Greece

| System Name | 3 desktop systems: Gaming / Internet / HTPC |

|---|---|

| Processor | Ryzen 5 5500 / Ryzen 5 4600G / FX 6300 (12 years latter got to see how bad Bulldozer is) |

| Motherboard | MSI X470 Gaming Plus Max (1) / MSI X470 Gaming Plus Max (2) / Gigabyte GA-990XA-UD3 |

| Cooling | Νoctua U12S / Segotep T4 / Snowman M-T6 |

| Memory | 16GB G.Skill RIPJAWS 3600 / 16GB G.Skill Aegis 3200 / 16GB Kingston 2400MHz (DDR3) |

| Video Card(s) | ASRock RX 6600 + GT 710 (PhysX)/ Vega 7 integrated / Radeon RX 580 |

| Storage | NVMes, NVMes everywhere / NVMes, more NVMes / Various storage, SATA SSD mostly |

| Display(s) | Philips 43PUS8857/12 UHD TV (120Hz, HDR, FreeSync Premium) ---- 19'' HP monitor + BlitzWolf BW-V5 |

| Case | Sharkoon Rebel 12 / Sharkoon Rebel 9 / Xigmatek Midguard |

| Audio Device(s) | onboard |

| Power Supply | Chieftec 850W / Silver Power 400W / Sharkoon 650W |

| Mouse | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Keyboard | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Software | Windows 10 / Windows 10 / Windows 7 |

Keep reading the thread. I posted more nonsense for you to enjoy.What kind of nonsense is this?

- Joined

- Jun 2, 2017

- Messages

- 8,122 (3.18/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

Yes but do not the actual specs that it will have.Igor's Lab tested recently Pro W7800 to simulate future 7800XT possible performance with 70CUs. It was up to 12% faster than 6800XT. Treat it as very rough attempt.

We know this. You are telling us 'news' that the water is wet... No reason to repeat what we know and what is on their list for driver update.This is from the original review of TechPowerUp but I think it still remains a problem even today.

Besides, anyone can try to fit this at home by lowering refresh rate by 1Hz.

Your OPINION is that my OPINION is wrong.

First time in a forum?

So because a random arm chair enthusiast says there are no efficiency and or performance gains from RDNA2 to RDNA3 we should just completely ignore the objective facts presented to us; legitimately tested data on the exact site you’re making up facts on literally proves your opinion to be false. There is nothing else to say.

It doesn't really matter. 7800XT will have at least 70CUs, if not a few more. So, if lucky, it will reach the performance close to 6950XTYes but do not the actual specs that it will have

It doesn't really matter. 7800XT will have at least 70CUs, if not a few more. So, if lucky, it will reach the performance close to 6950XTYes but do not the actual specs that it will have

- Joined

- Sep 6, 2013

- Messages

- 3,050 (0.78/day)

- Location

- Athens, Greece

| System Name | 3 desktop systems: Gaming / Internet / HTPC |

|---|---|

| Processor | Ryzen 5 5500 / Ryzen 5 4600G / FX 6300 (12 years latter got to see how bad Bulldozer is) |

| Motherboard | MSI X470 Gaming Plus Max (1) / MSI X470 Gaming Plus Max (2) / Gigabyte GA-990XA-UD3 |

| Cooling | Νoctua U12S / Segotep T4 / Snowman M-T6 |

| Memory | 16GB G.Skill RIPJAWS 3600 / 16GB G.Skill Aegis 3200 / 16GB Kingston 2400MHz (DDR3) |

| Video Card(s) | ASRock RX 6600 + GT 710 (PhysX)/ Vega 7 integrated / Radeon RX 580 |

| Storage | NVMes, NVMes everywhere / NVMes, more NVMes / Various storage, SATA SSD mostly |

| Display(s) | Philips 43PUS8857/12 UHD TV (120Hz, HDR, FreeSync Premium) ---- 19'' HP monitor + BlitzWolf BW-V5 |

| Case | Sharkoon Rebel 12 / Sharkoon Rebel 9 / Xigmatek Midguard |

| Audio Device(s) | onboard |

| Power Supply | Chieftec 850W / Silver Power 400W / Sharkoon 650W |

| Mouse | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Keyboard | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Software | Windows 10 / Windows 10 / Windows 7 |

Oh, didn't knew the water is wet. So, maybe we should close up the forum section of this site now that we know water is wet.We know this. You are telling us 'news' that the water is wet... No reason to repeat what we know and what is on their list for driver update.

Besides, anyone can try to fit this at home by lowering refresh rate by 1Hz.

So, first time in a forum.So because a random arm chair enthusiast says there are no efficiency and or performance gains from RDNA2 to RDNA3 we should just completely ignore the objective facts presented to us; legitimately tested data on the exact site you’re making up facts on literally proves your opinion to be false. There is nothing else to say.

You jump in this thread that is now in 5th page with probably ABSOLUTELY NONE knowledge of what is written after page 1 and instead of hitting the brakes and tell to yourself "wait, let's just see how things progressed in this thread" you keep playing the same song.

This is beyond boring. Find someone else to vent your nerves.

- Joined

- Jun 24, 2015

- Messages

- 7,788 (2.39/day)

- Location

- Western Canada

| System Name | ab┃ob |

|---|---|

| Processor | 7800X3D┃5800X3D |

| Motherboard | B650E PG-ITX┃X570 Impact |

| Cooling | NH-U12A + T30┃AXP120-x67 |

| Memory | 64GB 6400CL32┃32GB 3600CL14 |

| Video Card(s) | RTX 4070 Ti Eagle┃RTX A2000 |

| Storage | 8TB of SSDs┃1TB SN550 |

| Case | Caselabs S3┃Lazer3D HT5 |

If you're looking as hard as you did, for, and at issues and only came up with the three I just read, I wouldn't call that a shocking amount relative to Intel and Nvidia. GPUs but that's me, fan stopping really seamed to bother you plus multi monitor idle above all else, one of those doesn't even register on my radar most of the time, as for stuttering, I don't use your setup you do, some of that stuttering Was down to your personal set-up IE cable's, glad you got it sorted by removing the card entirely but for many other silent users and some vocal, few of your issues applied.

And more importantly, this Is made by the same people, but it isn't your card, so I think your issues might not apply to this rumour personally.

Been thinking about going back, actually. Pulse was not out when I had mine, and has seen some good prices lately. Not out of discontent with current card, just impulsive and ill-advised curiosity I guess. Maybe curiosity to see if they've done anything about the various different VRAM behaviours.

The multi monitor VRAM fluctuation is no longer on the latest release bug list, but also isn't listed as being fixed either, so not sure if they forgot to write it or only add it under issues later once it's verified to still exist.

AMD clearly cares and can achieve some excellent power optimization (Rembrandt and Phoenix), but struggle when chiplets are involved. It's no easy task, to be clear, but they did advertise fanout link as being extremely efficient and capable of aggressively power management when Navi31 came out. Frustrating, because GCD power management is clearly outstanding, only to be squandered twice over by the MCDs and fanout link.

- Joined

- Feb 18, 2005

- Messages

- 5,351 (0.76/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | 3x AOC Q32E2N (32" 2560x1440 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G602 |

| Keyboard | Logitech G613 |

| Software | Windows 10 Professional x64 |

Guess it goes to show that nerds can agree about something sometimesUnfortunately I have to agree with @Assimilator in his above comment. And I am mentioning Assimilator here because he knows my opinion about him. Simply put, he in in my ignore list!

But his comment here is correct.

- Joined

- Feb 3, 2017

- Messages

- 3,530 (1.32/day)

| Processor | R5 5600X |

|---|---|

| Motherboard | ASUS ROG STRIX B550-I GAMING |

| Cooling | Alpenföhn Black Ridge |

| Memory | 2*16GB DDR4-2666 VLP @3800 |

| Video Card(s) | EVGA Geforce RTX 3080 XC3 |

| Storage | 1TB Samsung 970 Pro, 2TB Intel 660p |

| Display(s) | ASUS PG279Q, Eizo EV2736W |

| Case | Dan Cases A4-SFX |

| Power Supply | Corsair SF600 |

| Mouse | Corsair Ironclaw Wireless RGB |

| Keyboard | Corsair K60 |

| VR HMD | HTC Vive |

They work in a similar way. Shader is a shader and the differences in large picture are minor. There are differences in organization and a bunch of add-on functionalities but unless there is a clear bottleneck somewhere in that - again, in the large picture of performance it does not really matter.It's an invalid comparison because they aren't the same architecture or work in a similar way, remember back in the Fermi v. TeraScale days, the GF100/GTX 480 GPU had 480 shaders (512 really but that config never shipped) while a Cypress XT/HD 5870 had 1600... nor can you go by the transistor count estimate because the Nvidia chip has several features that consume die area such as tensor cores and an integrated memory controller and on-die cache that the Navi 31 design does not (with L3 and IMCs being offloaded onto the MCDs and the GCD focusing strictly on graphics and the other SIPP blocks). It's a radically different approach in GPU design that each company has taken this time around, so I don't think it's "excusable" that the Radeon has less compute units because that's an arbitrary number (to some extent).

RDNA3 and Ada are closer in many aspects than quite a few previous generations. Nvidia went for 5nm-class manufacturing process for Ada, RDAN3 is using the same, RDNA3 doubled up on compute resources similarly to what Nvidia did in Turing/Ampere. Nvidia went with large LLC and narrower memory buses similarly to what AMD did in RDNA2.

Fermi vs TeraScale was different times. And DX11/DX12 transition on top of that. Off the top of my head these 480 shaders ion GTX480 were running at twice the clock rate of rest of the GPU. Wasn't TeraScale plagued by occupancy problems due to VLIW approach making those perform much slower than theoretical compute capabilities? The drawbacks present back then have been figured out for quite a while now.

Transistor counts by and large still follow the shader count and in recent times the large cache. Other parts - even if significant like Tensor cores - are comparatively smaller.

RDNA3 chiplet is not a radically different approach. It is clever, should be good for yields (=cost) but there really was no radical breakthrough here.

What would that case be?If you ask me, I would make a case for the N31 GCD being technically a more complex design than the portion responsible for graphics in AD102.

RT has become (much) more relevant and RDNA3 competitor is not Ampere.With 7900XTX offering 3090/Ti RT performance, how could someone be negative about that? How can 3090/Ti performance being bad today when it was a dream yesterday?

Also, while 7900XTX offers 3090Ti RT performance its raster performance is a good 20% faster...

Last edited:

- Joined

- Apr 30, 2020

- Messages

- 868 (0.58/day)

| System Name | S.L.I + RTX research rig |

|---|---|

| Processor | Ryzen 7 5800X 3D. |

| Motherboard | MSI MEG ACE X570 |

| Cooling | Corsair H150i Cappellx |

| Memory | Corsair Vengeance pro RGB 3200mhz 16Gbs |

| Video Card(s) | 2x Dell RTX 2080 Ti in S.L.I |

| Storage | Western digital Sata 6.0 SDD 500gb + fanxiang S660 4TB PCIe 4.0 NVMe M.2 |

| Display(s) | HP X24i |

| Case | Corsair 7000D Airflow |

| Power Supply | EVGA G+1600watts |

| Mouse | Corsair Scimitar |

| Keyboard | Cosair K55 Pro RGB |

MI300 has double the amount of transistor the RTX 4090 currently has.So, is Navi 41 going to be a chiplet GCD, inspired by MI300?

MI300 has GCD die with up to 38CUs.

Navi 41 could have three or even four of those dies.

- Joined

- Feb 24, 2023

- Messages

- 2,299 (4.97/day)

- Location

- Russian Wild West

| System Name | DLSS / YOLO-PC |

|---|---|

| Processor | i5-12400F / 10600KF |

| Motherboard | Gigabyte B760M DS3H / Z490 Vision D |

| Cooling | Laminar RM1 / Gammaxx 400 |

| Memory | 32 GB DDR4-3200 / 16 GB DDR4-3333 |

| Video Card(s) | RX 6700 XT / RX 480 8 GB |

| Storage | A couple SSDs, m.2 NVMe included / 240 GB CX1 + 1 TB WD HDD |

| Display(s) | Compit HA2704 / Viewsonic VX3276-MHD-2 |

| Case | Matrexx 55 / Junkyard special |

| Audio Device(s) | Want loud, use headphones. Want quiet, use satellites. |

| Power Supply | Thermaltake 1000 W / FSP Epsilon 700 W / Corsair CX650M [backup] |

| Mouse | Don't disturb, cheese eating in progress... |

| Keyboard | Makes some noise. Probably onto something. |

| VR HMD | I live in real reality and don't need a virtual one. |

| Software | Windows 10 and 11 |

I'm paying nothing, I've never bought no RTX card in my life. I utterly despise what jerket man is doing and I wish nVidia to be hit by something really heavy. All they do is a negative effort.You're paying every cent twice over for Nvidia's live beta here, make no mistake.

AMD literally has a Jupiter sized window wide open to adjust their products and drivers, yet we only see some performance improvements, nothing special, like AMD is thinking they're Intel of 2012 when they had no one to compete with so making a dozen percent better product than the one from three years ago is completely fine.

It's not. Neither party deserves a cake. The only way I'm buying their BS SKUs is major discounts because they openly disrespect customers, me included. And AMD is worse because they don't use massive blunders by nVidia.

This is only because we're a decade, maybe a couple decades too early for this technology. Nothing powerful enough to push this art to its beaut. RT is the answer to complete mirrors and reflections deficite in games. And the only stopping factor is hardware. It can't process it fast enough as of yet. When my post will be as old as me now RT will be a default feature, not even gonna doubt that (unless TWW3 puts us back to the iron age)I can honestly not always tell if RT is really adding much if anything beneficial to the scene

nGreedia being nGreedia became obvious when they released their first RTX cards. It doesn't nullify my point though.do consider the fact Ampere 'can't get DLSS3' for whatever reason

That being said, I'm just completely pessimistic about GPU market of the nearest couple generations. GPUs are ridiculously expensive and games are coming in "quality" so terrible it's them who must pay us to play it, not the opposite.

- Joined

- Jan 17, 2018

- Messages

- 388 (0.17/day)

| Processor | Ryzen 7 5800X3D |

|---|---|

| Motherboard | MSI B550 Tomahawk |

| Cooling | Noctua U12S |

| Memory | 32GB @ 3600 CL18 |

| Video Card(s) | AMD 6800XT |

| Storage | WD Black SN850(1TB), WD Black NVMe 2018(500GB), WD Blue SATA(2TB) |

| Display(s) | Samsung Odyssey G9 |

| Case | Be Quiet! Silent Base 802 |

| Power Supply | Seasonic PRIME-GX-1000 |

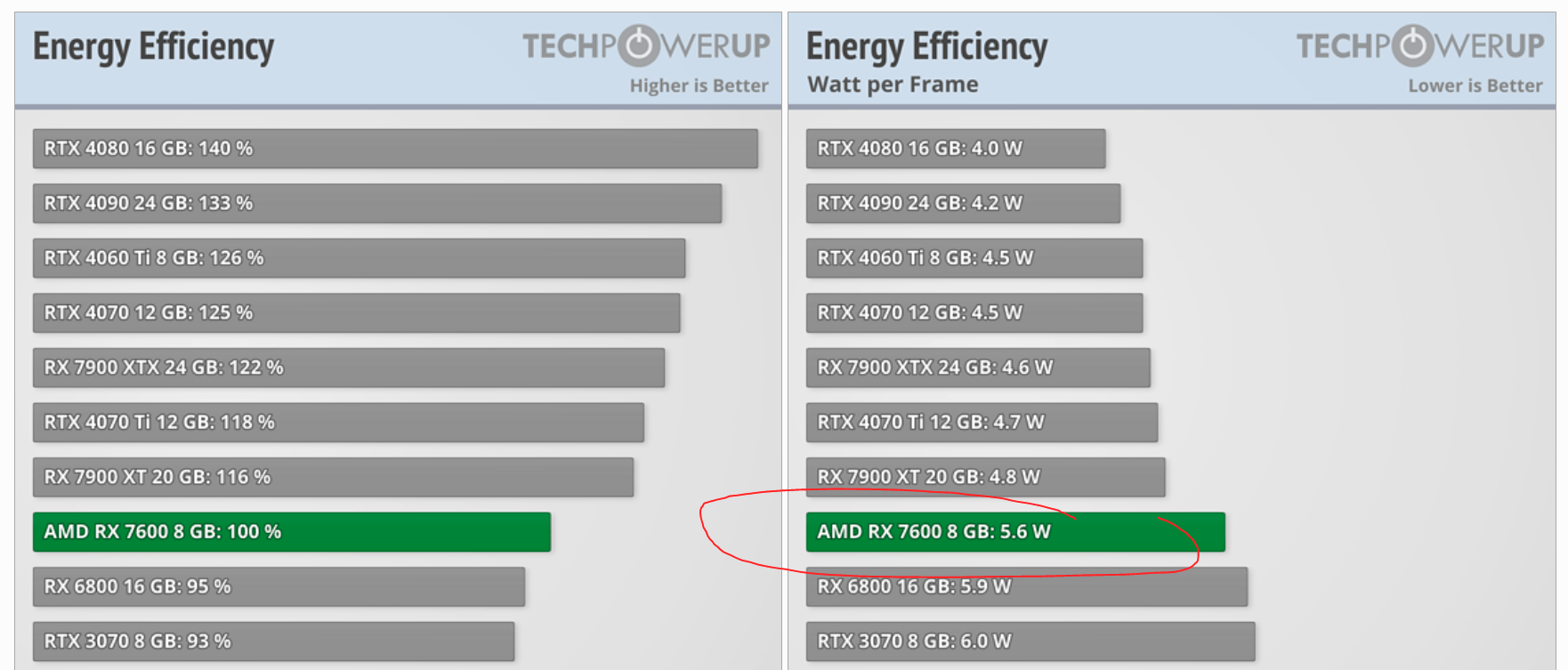

I'm sorry you don't seem to understand efficiency. There's a thing called performance, and there's a thing called power consumption. Different programs & scenario's use different power and they also perform differently. I haven't read what Wizard uses to weigh efficiency, but I imagine it's probably something along the lines of average power consumption & performance over the duration of the gaming suite.Just go one page before the one you point in that review and explain me those numbers.

AMD Radeon RX 7600 Review - For 1080p Gamers - Power Consumption | TechPowerUp

Again, this doesn't change the fact that the 7600 is, at the very least, 20% more efficient than the 6600XT.

The node change for the 7600, by the way, was mostly insignificant. TSMC 7nm to "6nm" was a density increase with little-to-no efficiency increase. This is more clearly seen on the watt-per-frame graph, where the 7900XT & 7900XTX, both 5nm chips, have frames-per-watt equal to that of Nvidia's 4000 series, and better efficiency than the 7600.Before answering remember this

7600 new arch, new node both should be bringing better efficiency for 7600. But what do we see? In some cases 6600XT with lower power consumption, in some cases 7600 with lower power consumption.

Nvidia on the other hand, went from a mediocre Samsung 8nm node to a much superior TSMC 4nm node with the transition from the 3000 series to the 4000 series. It's not surprising to see a boost in efficiency.

At this point, there's not that much separating Nvidia and AMD GPU's in terms of hardware. Everything disappointing with the current GPU generation is the price with the ridiculous naming "upsell" of all cards by both AMD & Nvidia.

- Joined

- Jun 14, 2020

- Messages

- 2,678 (1.85/day)

| System Name | Mean machine |

|---|---|

| Processor | 13900k |

| Motherboard | MSI Unify X |

| Cooling | Noctua U12A |

| Memory | 7600c34 |

| Video Card(s) | 4090 Gamerock oc |

| Storage | 980 pro 2tb |

| Display(s) | Samsung crg90 |

| Case | Fractal Torent |

| Audio Device(s) | Hifiman Arya / a30 - d30 pro stack |

| Power Supply | Be quiet dark power pro 1200 |

| Mouse | Viper ultimate |

| Keyboard | Blackwidow 65% |

Okay, plenty of games (dota 2 as an example) crash the drivers when run on dx11. It was a very common issue with RDNA2 but im hearing it also happen with RDNA3.So would be interested in knowing a shocking number of other bug's with proof.

Diablo 4 on RDNA2 makes your desktop flicker when you alt tab to it, happens on my g14 laptop.

Driver installation requires you to disconnect from the internet. Which I guess is okay if you already know about it, I didn't, took me couple of hours to figure out why my laptop isn't working.

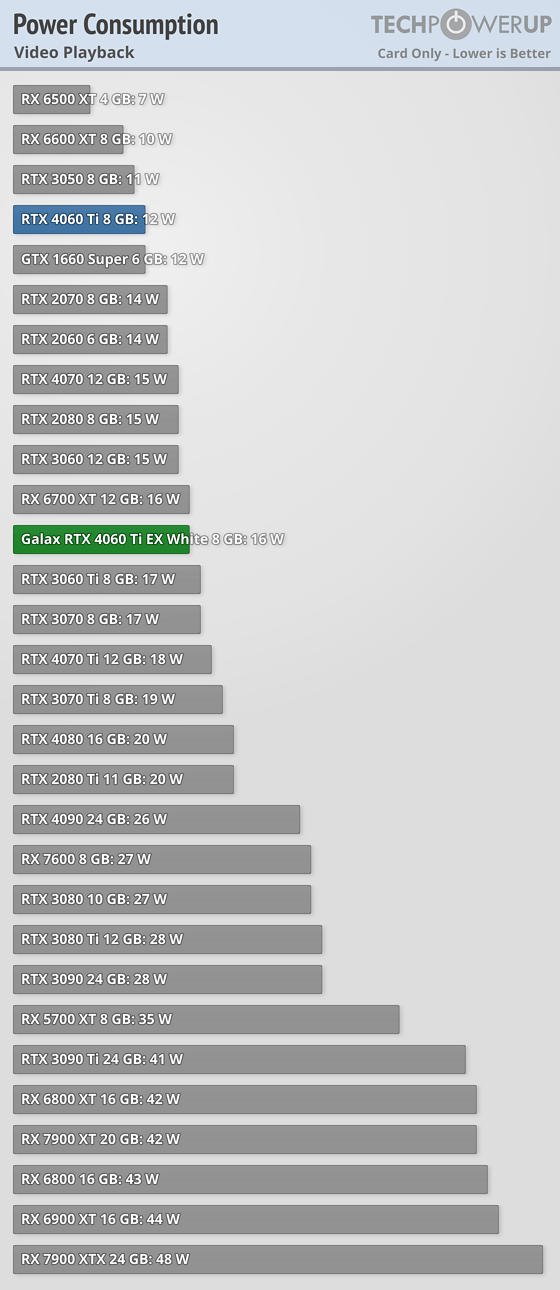

Interesting. Are you of the same opinion with CPU's? Cause amd cpus consume 30-40w sitting there playing videos while intel drops to 5 watts.I mean why waste an extra of 30W of power while watching a movie?

- Joined

- Sep 6, 2013

- Messages

- 3,050 (0.78/day)

- Location

- Athens, Greece

| System Name | 3 desktop systems: Gaming / Internet / HTPC |

|---|---|

| Processor | Ryzen 5 5500 / Ryzen 5 4600G / FX 6300 (12 years latter got to see how bad Bulldozer is) |

| Motherboard | MSI X470 Gaming Plus Max (1) / MSI X470 Gaming Plus Max (2) / Gigabyte GA-990XA-UD3 |

| Cooling | Νoctua U12S / Segotep T4 / Snowman M-T6 |

| Memory | 16GB G.Skill RIPJAWS 3600 / 16GB G.Skill Aegis 3200 / 16GB Kingston 2400MHz (DDR3) |

| Video Card(s) | ASRock RX 6600 + GT 710 (PhysX)/ Vega 7 integrated / Radeon RX 580 |

| Storage | NVMes, NVMes everywhere / NVMes, more NVMes / Various storage, SATA SSD mostly |

| Display(s) | Philips 43PUS8857/12 UHD TV (120Hz, HDR, FreeSync Premium) ---- 19'' HP monitor + BlitzWolf BW-V5 |

| Case | Sharkoon Rebel 12 / Sharkoon Rebel 9 / Xigmatek Midguard |

| Audio Device(s) | onboard |

| Power Supply | Chieftec 850W / Silver Power 400W / Sharkoon 650W |

| Mouse | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Keyboard | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Software | Windows 10 / Windows 10 / Windows 7 |

First you say I don't understand efficiency, then you say that you don't know what Wizard does to measure it. You just grab and hold that 20% number that suits your opinion.I'm sorry you don't seem to understand efficiency. There's a thing called performance, and there's a thing called power consumption. Different programs & scenario's use different power and they also perform differently. I haven't read what Wizard uses to weigh efficiency, but I imagine it's probably something along the lines of average power consumption & performance over the duration of the gaming suite.

Again, this doesn't change the fact that the 7600 is, at the very least, 20% more efficient than the 6600XT.

You do realize the above shows that you don't understand efficiency either. You just throw away the power consumption numbers in the previous page of the review and keep that efficiency number because supports your opinion.

What I see is that efficiency is measured under a very specific scenario, which is Cyberpunk 2077. So, if 7600 enjoys an updated optimized driver in that game the result is in it's favor.

INSTG8R

Vanguard Beta Tester

- Joined

- Nov 26, 2004

- Messages

- 7,970 (1.12/day)

- Location

- Canuck in Norway

| System Name | Hellbox 5.1(same case new guts) |

|---|---|

| Processor | Ryzen 7 5800X3D |

| Motherboard | MSI X570S MAG Torpedo Max |

| Cooling | TT Kandalf L.C.S.(Water/Air)EK Velocity CPU Block/Noctua EK Quantum DDC Pump/Res |

| Memory | 2x16GB Gskill Trident Neo Z 3600 CL16 |

| Video Card(s) | Powercolor Hellhound 7900XTX |

| Storage | 970 Evo Plus 500GB 2xSamsung 850 Evo 500GB RAID 0 1TB WD Blue Corsair MP600 Core 2TB |

| Display(s) | Alienware QD-OLED 34” 3440x1440 144hz 10Bit VESA HDR 400 |

| Case | TT Kandalf L.C.S. |

| Audio Device(s) | Soundblaster ZX/Logitech Z906 5.1 |

| Power Supply | Seasonic TX~’850 Platinum |

| Mouse | G502 Hero |

| Keyboard | G19s |

| VR HMD | Oculus Quest 2 |

| Software | Win 10 Pro x64 |

Poor Intel now he's they're problem with the "Raja Hype" I had Fury I had Vega I loved them both but they were in lack of a better terms just "Experiments" that while not terrible were never great either outside of the unique tech.we can blame Raja"HBM"Koduri.

- Joined

- Feb 3, 2017

- Messages

- 3,530 (1.32/day)

| Processor | R5 5600X |

|---|---|

| Motherboard | ASUS ROG STRIX B550-I GAMING |

| Cooling | Alpenföhn Black Ridge |

| Memory | 2*16GB DDR4-2666 VLP @3800 |

| Video Card(s) | EVGA Geforce RTX 3080 XC3 |

| Storage | 1TB Samsung 970 Pro, 2TB Intel 660p |

| Display(s) | ASUS PG279Q, Eizo EV2736W |

| Case | Dan Cases A4-SFX |

| Power Supply | Corsair SF600 |

| Mouse | Corsair Ironclaw Wireless RGB |

| Keyboard | Corsair K60 |

| VR HMD | HTC Vive |

The methodology is there on the review pages. Efficiency page does state Cyberpunk 2077 but not the resolution/settings but power consumption page does. RX7600 is a full 26% faster than RX6650XT in Cyberpunk at 2160p with ultra settings and that probably persists to the power/efficiency results despite lower texture setting.First you say I don't understand efficiency, then you say that you don't know what Wizard does to measure it. You just grab and hold that 20% number that suits your opinion.

You do realize the above shows that you don't understand efficiency either. You just throw away the power consumption numbers in the previous page of the review and keep that efficiency number because supports your opinion.

What I see is that efficiency is measured under a very specific scenario, which is Cyberpunk 2077. So, if 7600 enjoys an updated optimized driver in that game the result is in it's favor.

The problem with this efficiency result is that overall relative performance on both RX7600 and RX6650XT at 2160p is basically the same. Similarly, power consumption for these two is also basically the same.

I do not believe it is the driver. But RDNA3 has tweaks in the architecture and setup that are likely to benefit a cutting edge game like Cyberpunk 2077.

- Joined

- Sep 6, 2013

- Messages

- 3,050 (0.78/day)

- Location

- Athens, Greece

| System Name | 3 desktop systems: Gaming / Internet / HTPC |

|---|---|

| Processor | Ryzen 5 5500 / Ryzen 5 4600G / FX 6300 (12 years latter got to see how bad Bulldozer is) |

| Motherboard | MSI X470 Gaming Plus Max (1) / MSI X470 Gaming Plus Max (2) / Gigabyte GA-990XA-UD3 |

| Cooling | Νoctua U12S / Segotep T4 / Snowman M-T6 |

| Memory | 16GB G.Skill RIPJAWS 3600 / 16GB G.Skill Aegis 3200 / 16GB Kingston 2400MHz (DDR3) |

| Video Card(s) | ASRock RX 6600 + GT 710 (PhysX)/ Vega 7 integrated / Radeon RX 580 |

| Storage | NVMes, NVMes everywhere / NVMes, more NVMes / Various storage, SATA SSD mostly |

| Display(s) | Philips 43PUS8857/12 UHD TV (120Hz, HDR, FreeSync Premium) ---- 19'' HP monitor + BlitzWolf BW-V5 |

| Case | Sharkoon Rebel 12 / Sharkoon Rebel 9 / Xigmatek Midguard |

| Audio Device(s) | onboard |

| Power Supply | Chieftec 850W / Silver Power 400W / Sharkoon 650W |

| Mouse | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Keyboard | CoolerMaster Devastator III Plus / Coolermaster Devastator / Logitech |

| Software | Windows 10 / Windows 10 / Windows 7 |

The bold part is what makes me saying that in the end I don't see from RDNA3 anything meaningful compared to RDNA2.The methodology is there on the review pages. Efficiency page does state Cyberpunk 2077 but not the resolution/settings but power consumption page does. RX7600 is a full 26% faster than RX6650XT in Cyberpunk at 2160p with ultra settings and that probably persists to the power/efficiency results despite lower texture setting.

The problem with this efficiency result is that overall relative performance on both RX7600 and RX6650XT at 2160p is basically the same. Similarly, power consumption for these two is also basically the same.

I do not believe it is the driver. But RDNA3 has tweaks in the architecture and setup that are likely to benefit a cutting edge game like Cyberpunk 2077.

Now, going to the power consumption page we see

Idle : 7600 2W, 6600XT 2W

Multi monitor : 7600 18W, 6600XT 18W

Video Playback: 7600 27W, 6600XT 10W

Gaming: 7600 152W, 6600XT 159W

Ray Tracing: 7600 142W, 6600XT 122W

Maximum: 7600 153W, 6600XT 172W

VSync 60Hz: 7600 76W, 6600XT 112W

Spikes: 7600 186W, 6600XT 207W

From the above I see some optimizations in gaming and some odd problems. VSync 60Hz probably (IF I understand it correctly) shows that RDNA3 is way better than RDNA2 when asked to do some job that doesn't needs to push the chip's performance at maximum. Raster, maximum and spikes, that 7600 is more optimized than 6600XT. And optimizations there could be on the rest of the PCB and it's components, not the GPU itself. Idle at 2W can't help, multi monitor at 6600XT's levels, that probably AMD didn't improved that area over 6000 series. Raytracing is extremely odd and Video playback problematic. Best case scenario AMD to do what it did with 7900 series and bring the power consumption of video playback at around 10-15W.

In any case a new arch on a new node should be showing greens everywhere but idle, where it was already low. And not at almost equal average performance. 7600 should had the performance of 6700 and a power consumption equal or better in everything compared to 6600XT. We don't see this, especially the performance.

- Joined

- Dec 25, 2020

- Messages

- 4,908 (3.91/day)

- Location

- São Paulo, Brazil

| System Name | Project Kairi Mk. IV "Eternal Thunder" |

|---|---|

| Processor | 13th Gen Intel Core i9-13900KS Special Edition |

| Motherboard | MSI MEG Z690 ACE (MS-7D27) BIOS 1G |

| Cooling | Noctua NH-D15S + NF-F12 industrialPPC-3000 w/ Thermalright BCF and NT-H1 |

| Memory | G.SKILL Trident Z5 RGB 32GB DDR5-6800 F5-6800J3445G16GX2-TZ5RK @ 6400 MT/s 30-38-38-38-70-2 |

| Video Card(s) | ASUS ROG Strix GeForce RTX™ 4080 16GB GDDR6X White OC Edition |

| Storage | 1x WD Black SN750 500 GB NVMe + 4x WD VelociRaptor HLFS 300 GB HDDs |

| Display(s) | 55-inch LG G3 OLED |

| Case | Cooler Master MasterFrame 700 |

| Audio Device(s) | EVGA Nu Audio (classic) + Sony MDR-V7 cans |

| Power Supply | EVGA 1300 G2 1.3kW 80+ Gold |

| Mouse | Microsoft Ocean Plastic Mouse |

| Keyboard | Galax Stealth |

| Software | Windows 10 Enterprise 22H2 |

| Benchmark Scores | "Speed isn't life, it just makes it go faster." |

The bold part is what makes me saying that in the end I don't see from RDNA3 anything meaningful compared to RDNA2.

Now, going to the power consumption page we see

Idle : 7600 2W, 6600XT 2W

Multi monitor : 7600 18W, 6600XT 18W

Video Playback: 7600 27W, 6600XT 10W

Gaming: 7600 152W, 6600XT 159W

Ray Tracing: 7600 142W, 6600XT 122W

Maximum: 7600 153W, 6600XT 172W

VSync 60Hz: 7600 76W, 6600XT 112W

Spikes: 7600 186W, 6600XT 207W

From the above I see some optimizations in gaming and some odd problems. VSync 60Hz probably (IF I understand it correctly) shows that RDNA3 is way better than RDNA2 when asked to do some job that doesn't needs to push the chip's performance at maximum. Raster, maximum and spikes, that 7600 is more optimized than 6600XT. And optimizations there could be on the rest of the PCB and it's components, not the GPU itself. Idle at 2W can't help, multi monitor at 6600XT's levels, that probably AMD didn't improved that area over 6000 series. Raytracing is extremely odd and Video playback problematic. Best case scenario AMD to do what it did with 7900 series and bring the power consumption of video playback at around 10-15W.

In any case a new arch on a new node should be showing greens everywhere but idle, where it was already low. And not at almost equal average performance. 7600 should had the performance of 6700 and a power consumption equal or better in everything compared to 6600XT. We don't see this, especially the performance.

That's because there aren't any. Not that these 20 something watt improvements even matter, you're not running a GPU like this on a system with an expensive 80+ Titanium PSU anyway, and depending on the machine it's installed, conversion losses on budget PSUs could potentially make the 7600 worse off if system load isn't high enough to achieve the power supply's optimal conversion range. Yet here you are comparing an early stepping of the equivalent previous generation ASIC to the newest one on the newest drivers! (this isn't a dig at you, just at how preposterous this concept is)

I don't feel duty-bound to defend the indefensible, these GPUs are hot garbage, not that Nvidia's are any better below the 4090 and I've pointed out my bone with the 4090 being heavily cutdown more than once, this is a lost generation and I only hope the next one is better. I want a battle royale with Battlemage, RDNA 4 and Blackwell in the midrange, and a competent AMD solution at the high end. I literally want to give AMD my money, but they don't make it easy! Every. Single. Generation. there's some bloody tradeoff, some but or if, some feature that doesn't work or some caveat that you have to keep in mind. This is why I bought my RTX 3090 after 4 generations of being a Radeon faithful. I no longer have the time or desire to spend hours on end debugging problems, working around limitations, or missing out on new features because AMD deems them "not important" or they "can't afford to allocate resources to that right now" or "we'll eventually make an open-source equivalent" that is either worse than the competition (such as FSR) or never gets adopted. I want a graphics card I can enjoy now, not potentially some day down the road. The day AMD understands this they will have gone half way through the road to glory.

- Joined

- Sep 17, 2014

- Messages

- 21,210 (5.98/day)

- Location

- The Washing Machine

| Processor | i7 8700k 4.6Ghz @ 1.24V |

|---|---|

| Motherboard | AsRock Fatal1ty K6 Z370 |

| Cooling | beQuiet! Dark Rock Pro 3 |

| Memory | 16GB Corsair Vengeance LPX 3200/C16 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Samsung 850 EVO 1TB + Samsung 830 256GB + Crucial BX100 250GB + Toshiba 1TB HDD |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Fractal Design Define R5 |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | XTRFY M42 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| Software | W10 x64 |

Gosh I hadn't even looked at the 7600 review, because my interest in it does not even register on a scale with negatives, but yeah. Why did they release this POS?

It's an RDNA2 refresh with no USPs - look at that efficiency gap. That's not typical of RDNA3.

But yeah I share some of your pessimism, OTOH, its been worse. During Turing and mining for example. What an absolute shitshow we've been having. Perhaps we're still on the road to recovery altogether.

The Vapor Chamber Fail on AMD side was worse though, I agree.

But Nvidia polished? That was three generations ago. GTX was polished. RTX is open beta, and featureset isn't even backwards compatible within its own short history. DLSS3 not being supported pre Ada is absolutely not polish and great product and caring about customers. Ampere was a complete shitshow and Ada is now positioned primarily to push you into a gen-to-gen upgrade path due to lack of VRAM on the entire stack below the 4080. I think you need a reality check, and fast.

It's an RDNA2 refresh with no USPs - look at that efficiency gap. That's not typical of RDNA3.

My 'you' is always a royal you unless specified otherwiseI'm paying nothing, I've never bought no RTX card in my life. I utterly despise what jerket man is doing and I wish nVidia to be hit by something really heavy. All they do is a negative effort.

AMD literally has a Jupiter sized window wide open to adjust their products and drivers, yet we only see some performance improvements, nothing special, like AMD is thinking they're Intel of 2012 when they had no one to compete with so making a dozen percent better product than the one from three years ago is completely fine.

It's not. Neither party deserves a cake. The only way I'm buying their BS SKUs is major discounts because they openly disrespect customers, me included. And AMD is worse because they don't use massive blunders by nVidia.

This is only because we're a decade, maybe a couple decades too early for this technology. Nothing powerful enough to push this art to its beaut. RT is the answer to complete mirrors and reflections deficite in games. And the only stopping factor is hardware. It can't process it fast enough as of yet. When my post will be as old as me now RT will be a default feature, not even gonna doubt that (unless TWW3 puts us back to the iron age)

nGreedia being nGreedia became obvious when they released their first RTX cards. It doesn't nullify my point though.

That being said, I'm just completely pessimistic about GPU market of the nearest couple generations. GPUs are ridiculously expensive and games are coming in "quality" so terrible it's them who must pay us to play it, not the opposite.

But yeah I share some of your pessimism, OTOH, its been worse. During Turing and mining for example. What an absolute shitshow we've been having. Perhaps we're still on the road to recovery altogether.

You have been looking at the wrong numbers, as I also pointed out somewhere earlier or somewhere else - RDNA3's efficiency is very close to Ada, and is chart topping altogether. See above. The worst case scenario gap between RDNA3 and Ada is 15% in efficiency; if you take the 4080 at 4.0W versus the 4.6 of 7900. Also take note of the fact that monolithic and pretty linearly scaled Ada is itself showing gaps of 15% between cards in its own stack just the same.You have the card, we look at numbers from TPU review. Either your card works as it should, which will be great and something went wrong with the review numbers, or something else is happening.

Reading this being said of the company that presented us with the shoddy and totally unnecessary 12VHWPR is... ironic. The same thing goes for all those 3 slot GPUs that have no business being a 3 slotter given their TDPs. Ada is fucking lazy, and much like RDNA3 it barely moves forward, the similarities are staggering. There is a power efficiency jump from the past gen, and that's really all she wrote. The rest is marketing BS. Nvidia just presents its thumb up ass story in a better way, that is really all it is. Geforce is a hand-me-down from enterprise-first technology, now more than ever.I love how people are using this image as evidence that AMD isn't rubbish at low-load power consumption. Look at the bottom 5 worst GPUs. LOOK AT THEM. Who makes them?

Then look at the 3090 and 3090 Ti. They're near the bottom, yet their successors are in the middle of the pack - almost halving power consumption. It's like one company is concerned with making sure their product is consistently improving in all areas generation-to-generation, while the other is sitting in the corner with their thumb up their a**.

The fact that AMD has managed to bring down low-load power consumption since the 7000-series launch IS NOT something to praise them for, because if they hadn't completely BROKEN power consumption with the launch of that series (AND THEIR TWO PREVIOUS GPU SERIES), they wouldn't have had to FIX it.

Now all of the chumps are going to whine "bUt It DoESn'T mattER Y u MaKIng a fUSS?" IT DOES MATTER because it shows that one of these companies cares about delivering a product where every aspect has been considered and worked on, and the other just throws their product over the fence when they think they're done with it and "oh well it's the users' problem now". If I'm laying down hundreds or thousands of dollars on something, I expect it to be POLISHED - and right now only one of these companies does that.

It's an incredibly lazy non-argument used by those who are intellectually bankrupt. The best course of action is to ignore said people.

The Vapor Chamber Fail on AMD side was worse though, I agree.

But Nvidia polished? That was three generations ago. GTX was polished. RTX is open beta, and featureset isn't even backwards compatible within its own short history. DLSS3 not being supported pre Ada is absolutely not polish and great product and caring about customers. Ampere was a complete shitshow and Ada is now positioned primarily to push you into a gen-to-gen upgrade path due to lack of VRAM on the entire stack below the 4080. I think you need a reality check, and fast.

Last edited:

- Joined

- Feb 24, 2023

- Messages

- 2,299 (4.97/day)

- Location

- Russian Wild West

| System Name | DLSS / YOLO-PC |

|---|---|

| Processor | i5-12400F / 10600KF |

| Motherboard | Gigabyte B760M DS3H / Z490 Vision D |

| Cooling | Laminar RM1 / Gammaxx 400 |

| Memory | 32 GB DDR4-3200 / 16 GB DDR4-3333 |

| Video Card(s) | RX 6700 XT / RX 480 8 GB |

| Storage | A couple SSDs, m.2 NVMe included / 240 GB CX1 + 1 TB WD HDD |

| Display(s) | Compit HA2704 / Viewsonic VX3276-MHD-2 |

| Case | Matrexx 55 / Junkyard special |

| Audio Device(s) | Want loud, use headphones. Want quiet, use satellites. |

| Power Supply | Thermaltake 1000 W / FSP Epsilon 700 W / Corsair CX650M [backup] |

| Mouse | Don't disturb, cheese eating in progress... |

| Keyboard | Makes some noise. Probably onto something. |

| VR HMD | I live in real reality and don't need a virtual one. |

| Software | Windows 10 and 11 |

Sorry, it was very late at night and I'm generally retarded. Forgot this royal "you" ever existed.My 'you' is always a royal you unless specified otherwise

Turing was a textbook example of "what the heck," whereas mining was a catalyst, not a real reason for their treason. Now is worse. They now have no single excuse for their sheer greed except for the fact it's de facto nGreedia's monopoly since AMD haven't struck back since... late noughties I might guess. Their so-far-best product line aka RX 6000s was borderline impossibly priced at launch. Be that mining hysteria non-existent, AMD would be forced to almost half their prices in order to compete with Ampere devices. RX 6700 XT was slower than RTX 3070 at launch and didn't perform RT-wise at all, also lacking DLSS. Their MSRPs are quite the same (480 USD in 6700 XT versus 500 USD in 3070).been worse. During Turing and mining for example.

So, despite nGreedia deserving their greedy men status, we all have to consider AMD even worse because they don't have no reason to be greedy.