- Joined

- Feb 21, 2006

- Messages

- 2,384 (0.34/day)

- Location

- Toronto, Ontario

| System Name | The Expanse |

|---|---|

| Processor | AMD Ryzen 7 9800X3D |

| Motherboard | Asus Prime X670E-Pro Wifi BIOS 3222 AGESA PI 1.2.0.3a |

| Cooling | Corsair H150i Elite LCD XT |

| Memory | 64GB G.SKILL Trident Z5 Neo RGB DDR5 6000 CL 30-40-40-96 1T |

| Video Card(s) | XFX Radeon RX 7900 XTX Magnetic Air (25.6.1) |

| Storage | WD SN850X 2TB / Corsair MP600 1TB / Samsung 860Evo 1TB x2 Raid 0 / Asus NAS AS1004T V2 20TB |

| Display(s) | LG 34GP83A-B 34 Inch 21: 9 UltraGear Curved QHD (3440 x 1440) 1ms Nano IPS 160Hz |

| Case | Fractal Design Meshify S2 |

| Audio Device(s) | Creative X-Fi + Logitech Z-5500 + HS80 Wireless |

| Power Supply | Corsair AX850 Titanium |

| Mouse | Corsair Dark Core RGB SE |

| Keyboard | Corsair K100 |

| Software | Windows 10 Pro x64 22H2 |

| Benchmark Scores | https://valid.x86.fr/asijsu https://browser.geekbench.com/v6/cpu/11073923 |

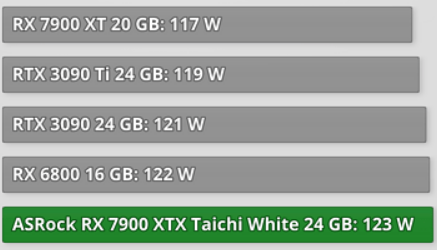

can you post a source?It's widely known that the 7900 series chug power when the hardware is under relatively light loads

They're sipping power like cracy in 4k and/or high refresh rate (80W more than a 4090FE), dual monitor, window movement & video playback. In gaming it's even worse where in most games a FPS cap has almost zero effect on power consumption. AMD also crippled their underclocking capabilities to 10% (6000 Series was going with up to 50%). With their latest driver they claimed improvements, ComputerBase tested and found ZERO improvements. If they can't fix it more than a half year after release, it's very likely a hardware issue (which can't be fixed).

They're sipping power like cracy in 4k and/or high refresh rate (80W more than a 4090FE), dual monitor, window movement & video playback. In gaming it's even worse where in most games a FPS cap has almost zero effect on power consumption. AMD also crippled their underclocking capabilities to 10% (6000 Series was going with up to 50%). With their latest driver they claimed improvements, ComputerBase tested and found ZERO improvements. If they can't fix it more than a half year after release, it's very likely a hardware issue (which can't be fixed).

And then do the

And then do the