- Joined

- Oct 9, 2007

- Messages

- 47,845 (7.39/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

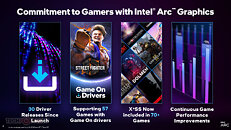

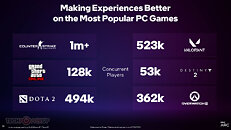

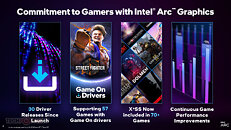

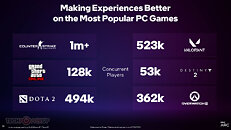

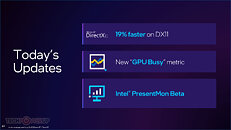

Intel Graphics today announced the Q3-2023 major update of its Arc GPU Graphics drivers, which will be released shortly. The latest driver promises to be a transformative update recommended for all Intel GPU users. The company says that it has re-architected several under-the-hood components of the drivers to make A-series GPUs significantly faster. The company also put in engineering effort to reduce frame-times, and introduce a new way of measuring the GPU's contribution to it; so users can figure out whether they are in a CPU-limited scenario, or a GPU-limited one. Lastly, the company updated its PresentMonitor utility with a new front-end interface.

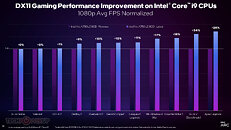

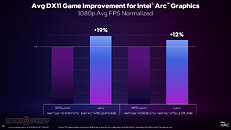

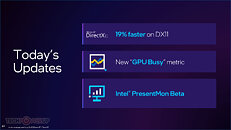

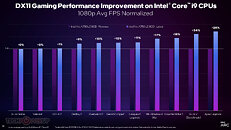

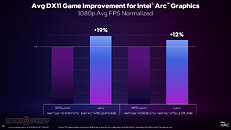

Intel Arc "Alchemist" is a ground up discrete GPU graphics architecture that was designed mainly for DirectX 12 and Vulkan, but over time, relied on API translation for DirectX 9 games. With its Spring driver updates, the company had released a major update that uplifted DirectX 9 game performance by 43% on average. This was because even though API translation was being used for DirectX 9 games, there was broad scope for per-game optimization, and DirectX 9 remains a relevant API for several current e-sports titles. With today's release, Intel promises a similar round of performance updates, with as much as 19% performance uplifts to be had in DirectX 11 titles at 1080p, measured with an A750 on a Core i5-13400F based machine. These gains are averaged to +12% on the fastest i9-13900K processor. The logic being that the slower processor benefits greater from the changes Intel made to its DirectX 11 driver.

Intel has developed a new performance metric called "GPU Busy." Put simply, this is the time taken by the GPU alone to process an API call from the CPU. Game rendering is a collaborative workload between the CPU and GPU. For the generation of each frame in a game, the CPU has to tally the game-state with what needs to be displayed on the screen; organize this information into an API call, and send it to the GPU, which then interprets the API call and draws a frame.

Every time the CPU's end of calculations for a frame is done, it puts out a "present" call to the GPU driver. As the GPU is rendering the frame, the CPU thread responsible for the frame is essentially idle, until the GPU can post a "present return" state back to the CPU, so it can begin work on the next frame. The time difference between two presents is basically frame-time (the time it takes for you machine to generate a frame). After the generation of a frame by the GPU, it is pushed to the frame-buffer, and onward to the display controller and the display. Intel figured out a way to break down frame-time further into the GPU's specific contribution toward it, which the company calls GPU Busy.

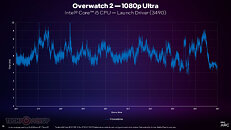

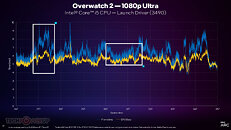

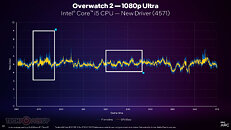

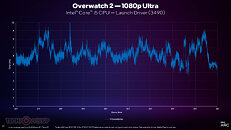

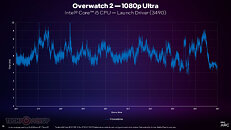

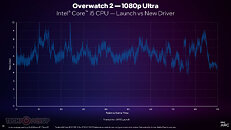

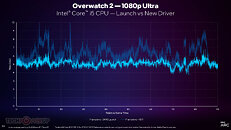

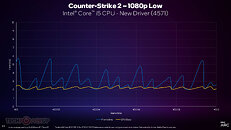

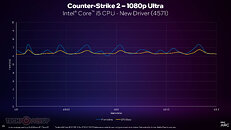

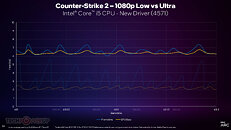

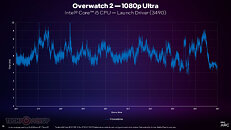

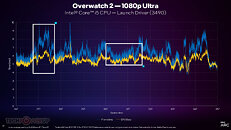

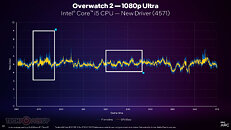

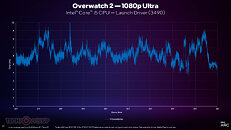

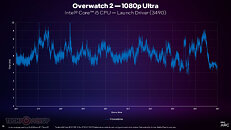

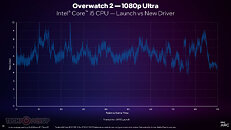

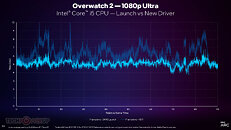

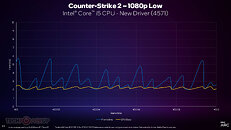

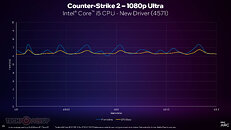

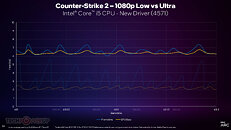

With the new GPU Busy counter, the company is able to show just how much the new latest drivers contribute to minimizing the frame-time. To do so, the company first showed us a frame-time graph of "Overwatch 2" with the launch driver, showing a wild amount of jitter. It then showed the GPU-Busy contribution to it. Since GPU Busy is a subset of frame-time, on a time-scale, it is a lower value. Every time there is a large gap between the GPU Busy value and the overall frame-time, you experience a CPU-limited scenario, whereas in regions of the graph with finer gaps between the two values, you are either GPU-limited, or balanced.

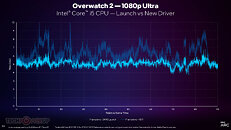

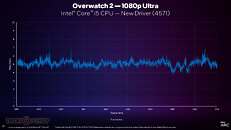

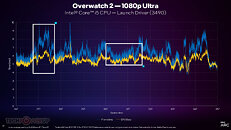

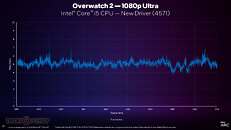

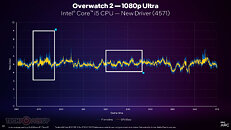

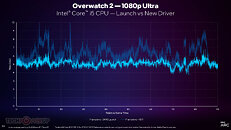

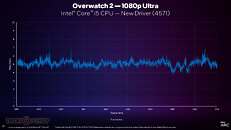

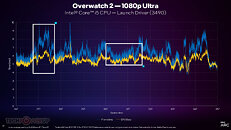

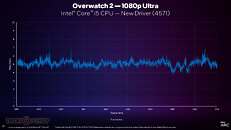

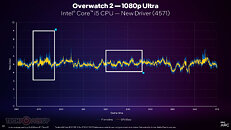

With that out of the way, the company showed us the frame-time graph of its latest driver, which shows significantly lower frame-times, and much less jitter. When a Core i5-13400F-powered machine is overlaid with an A750 using the latest driver, you notice that not only is the overall frame-time lower and jitter suppressed, but also the GPU Busy time is reduced. There's a greater coherence between the CPU and GPU performance. Overall, Intel's effort isn't directed toward improving frame-rates (performance), but also "smoothness" (reduced frame time jitter).

The GPU Busy metric can be measured using the latest version of PresentMon, which the company is releasing as its own standalone overlay application. You can read all about it here.

The complete slide-deck from Intel follows.

View at TechPowerUp Main Site

Intel Arc "Alchemist" is a ground up discrete GPU graphics architecture that was designed mainly for DirectX 12 and Vulkan, but over time, relied on API translation for DirectX 9 games. With its Spring driver updates, the company had released a major update that uplifted DirectX 9 game performance by 43% on average. This was because even though API translation was being used for DirectX 9 games, there was broad scope for per-game optimization, and DirectX 9 remains a relevant API for several current e-sports titles. With today's release, Intel promises a similar round of performance updates, with as much as 19% performance uplifts to be had in DirectX 11 titles at 1080p, measured with an A750 on a Core i5-13400F based machine. These gains are averaged to +12% on the fastest i9-13900K processor. The logic being that the slower processor benefits greater from the changes Intel made to its DirectX 11 driver.

Intel has developed a new performance metric called "GPU Busy." Put simply, this is the time taken by the GPU alone to process an API call from the CPU. Game rendering is a collaborative workload between the CPU and GPU. For the generation of each frame in a game, the CPU has to tally the game-state with what needs to be displayed on the screen; organize this information into an API call, and send it to the GPU, which then interprets the API call and draws a frame.

Every time the CPU's end of calculations for a frame is done, it puts out a "present" call to the GPU driver. As the GPU is rendering the frame, the CPU thread responsible for the frame is essentially idle, until the GPU can post a "present return" state back to the CPU, so it can begin work on the next frame. The time difference between two presents is basically frame-time (the time it takes for you machine to generate a frame). After the generation of a frame by the GPU, it is pushed to the frame-buffer, and onward to the display controller and the display. Intel figured out a way to break down frame-time further into the GPU's specific contribution toward it, which the company calls GPU Busy.

With the new GPU Busy counter, the company is able to show just how much the new latest drivers contribute to minimizing the frame-time. To do so, the company first showed us a frame-time graph of "Overwatch 2" with the launch driver, showing a wild amount of jitter. It then showed the GPU-Busy contribution to it. Since GPU Busy is a subset of frame-time, on a time-scale, it is a lower value. Every time there is a large gap between the GPU Busy value and the overall frame-time, you experience a CPU-limited scenario, whereas in regions of the graph with finer gaps between the two values, you are either GPU-limited, or balanced.

With that out of the way, the company showed us the frame-time graph of its latest driver, which shows significantly lower frame-times, and much less jitter. When a Core i5-13400F-powered machine is overlaid with an A750 using the latest driver, you notice that not only is the overall frame-time lower and jitter suppressed, but also the GPU Busy time is reduced. There's a greater coherence between the CPU and GPU performance. Overall, Intel's effort isn't directed toward improving frame-rates (performance), but also "smoothness" (reduced frame time jitter).

The GPU Busy metric can be measured using the latest version of PresentMon, which the company is releasing as its own standalone overlay application. You can read all about it here.

The complete slide-deck from Intel follows.

View at TechPowerUp Main Site