- Joined

- Oct 9, 2007

- Messages

- 47,895 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

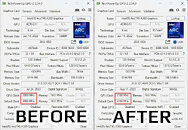

Intel Graphics figured out a unique way to step up performance of its entry-level Arc A380 graphics cards. While other companies prevent BIOS updates and generally discourage overclocking; Intel has given the A380 a free vendor overclock. With the latest Arc GPU Graphics drivers 101.4644 WHQL, Intel has increased the base frequency of the GPU. The driver installer includes a firmware update besides the driver. The A380 has a reference base clock of 2000 MHz, which boosts up to 2050 MHz. With the latest 101.4644 drivers (the new firmware), the base frequency has been increased to 2150 MHz. It's not much, but hey, who doesn't want a free 7.5% overclock to go with their recent 19% performance increase in DirectX 11 games, and over 40% increase in DirectX 9 ones?

View at TechPowerUp Main Site | Source

View at TechPowerUp Main Site | Source