- Joined

- Oct 9, 2007

- Messages

- 47,895 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Brazilian tech enthusiast Paulo Gomes, in association with Jefferson Silva, and Ygor Mota, successfully modded an EVGA GeForce RTX 2080 "Turing" graphics card to 16 GB. This was done by replacing each of its 8 Gbit GDDR6 memory chips with ones that have double the density, at 16 Gbit. Over the GPU's 256-bit wide memory bus, eight of these chips add up to 16 GB. The memory speed was unchanged at 14 Gbps reference, as were the GPU clocks.

The process of modding involves de-soldering each of the eight 8 Gbit chips, clearing out the memory pads of any shorted pins, using a GDDR6 stencil to place replacement solder balls, and then soldering the new 16 Gbit chips onto the pad under heat. Besides replacing the memory chips, a series of SMD jumpers need to be adjusted near the BIOS ROM chip, which lets the GPU correctly recognize the 16 GB memory size. The TU104 silicon by default supports higher density memory, as NVIDIA uses this chip on some of its professional graphics cards with 16 GB memory, such as the Quadro RTX 5000.

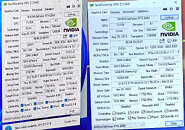

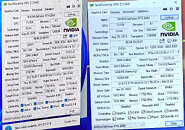

This is it, nothing to be done on the software side, and TechPowerUp GPU-Z should show you the detected memory size. A "Resident Evil 4" benchmark run with a performance overlay shows that the game is utilizing almost 9.8 GB of video memory compared to 7.7 GB on the original card, and is posting a 7.82% performance increase, from 64 FPS on average, to 69 FPS. This is greater than the kind of performance deltas you see between the 8 GB and 16 GB variants of the RTX 4060 Ti. Besides gaming performance increases, the 16 GB memory should significantly improve the generative AI performance of the RTX 2080.

View at TechPowerUp Main Site | Source

The process of modding involves de-soldering each of the eight 8 Gbit chips, clearing out the memory pads of any shorted pins, using a GDDR6 stencil to place replacement solder balls, and then soldering the new 16 Gbit chips onto the pad under heat. Besides replacing the memory chips, a series of SMD jumpers need to be adjusted near the BIOS ROM chip, which lets the GPU correctly recognize the 16 GB memory size. The TU104 silicon by default supports higher density memory, as NVIDIA uses this chip on some of its professional graphics cards with 16 GB memory, such as the Quadro RTX 5000.

This is it, nothing to be done on the software side, and TechPowerUp GPU-Z should show you the detected memory size. A "Resident Evil 4" benchmark run with a performance overlay shows that the game is utilizing almost 9.8 GB of video memory compared to 7.7 GB on the original card, and is posting a 7.82% performance increase, from 64 FPS on average, to 69 FPS. This is greater than the kind of performance deltas you see between the 8 GB and 16 GB variants of the RTX 4060 Ti. Besides gaming performance increases, the 16 GB memory should significantly improve the generative AI performance of the RTX 2080.

View at TechPowerUp Main Site | Source