- Joined

- Dec 28, 2012

- Messages

- 4,579 (1.00/day)

| System Name | Skunkworks 3.0 |

|---|---|

| Processor | 5800x3d |

| Motherboard | x570 unify |

| Cooling | Noctua NH-U12A |

| Memory | 32GB 3600 mhz |

| Video Card(s) | asrock 6800xt challenger D |

| Storage | Sabarent rocket 4.0 2TB, MX 500 2TB |

| Display(s) | Asus 1440p144 27" |

| Case | Old arse cooler master 932 |

| Power Supply | Corsair 1200w platinum |

| Mouse | *squeak* |

| Keyboard | Some old office thing |

| Software | Manjaro |

rDNA3 is an architecture, the 6900xt is a chip.RDNA3 had pretty decent uplift over the 6900xt in RT, where are you seeing the lack of improvement?

RDNA3 largely matched RTX 3090 in RT, while RDNA2 lagged behind it a fair bit.

Core counts and cache isn't everything that affects RT performance..

Which rDNA3 are you referring to? Because the 7800xt has roughly the same core config as a 6900xt, and near identical raytracing performance in most games.

Each CU contained raytracing hardware. more CUs means more raytracing performance. Per CU, rDNA3 had almost no performance improvements. Go look at TPU's 7800xt performance review, average of a whopping 3% faster in RT then the 6900xt.

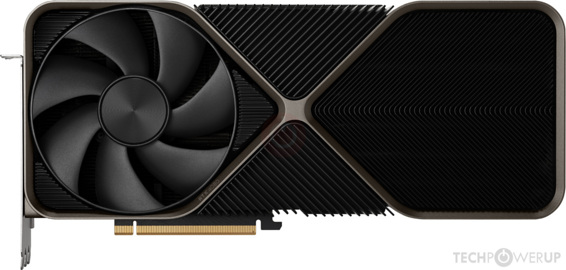

AMD Radeon RX 7800 XT Review

With the Radeon RX 7800 XT, AMD is going after the GeForce RTX 4070. Our review confirms that AMD has achieved performance parity in rasterization and is pretty close in ray tracing, at a much better price point, and they are giving you 16 GB VRAM, instead of just 12 GB.

or if you want the best in class shell out $2K for a 4090, who the fuck wants to spend used car money on a fricken GPU that will become obsolete in 2 years and get bummed for another $2K when the 5090 comes out? this is not the majority of buyers, and Nvidia is taking the piss, now more people are waiting multiple gens to upgrade cause $5-$600 for shit mid-gen GPU's is not realistic in the real world, I have a RX 6800 I bought for £350 and nothing new comes close to it in terms of performance/£ even after 2 years of newer GPU's, I am going to hodl this until at least Radeon 10 series or Nvidia 70**, they can charge their overpriced BS money for the same performance class all they want, both of them, I won't be spending a dime on either

or if you want the best in class shell out $2K for a 4090, who the fuck wants to spend used car money on a fricken GPU that will become obsolete in 2 years and get bummed for another $2K when the 5090 comes out? this is not the majority of buyers, and Nvidia is taking the piss, now more people are waiting multiple gens to upgrade cause $5-$600 for shit mid-gen GPU's is not realistic in the real world, I have a RX 6800 I bought for £350 and nothing new comes close to it in terms of performance/£ even after 2 years of newer GPU's, I am going to hodl this until at least Radeon 10 series or Nvidia 70**, they can charge their overpriced BS money for the same performance class all they want, both of them, I won't be spending a dime on either