You calculate the speed of the Cache against the bandwidth of the vRAM, it's an average. Edit: this is my take, AMD just states the *maximum* bandwidth instead, which is 3.5 TB/s for XTX and 2.9 TB/s for 7900 XT. 6900 XT's max. bandwidth is ~2 TB/s.

That should not be an average.

Did look up the launch slides now. Looking at 6900XT for example, using TPU's review and slides in there:

AMD's Radeon RX 6900 XT offers convincing 4K gaming performance, yet stays below 300 W. Thanks to this impressive efficiency, the card is almost whisper-quiet, quieter than any RTX 3090 we've ever tested. In our review, we not only benchmark the RX 6900 XT on Intel, but also on Zen 3, with fast...

www.techpowerup.com

There are two important numbers for the bandwidth discussion:

1. The VRAM bandwidth, in case of 6900XT 512GB/s

2. The cache bandwidth, in case of 6900XT 2TB/s

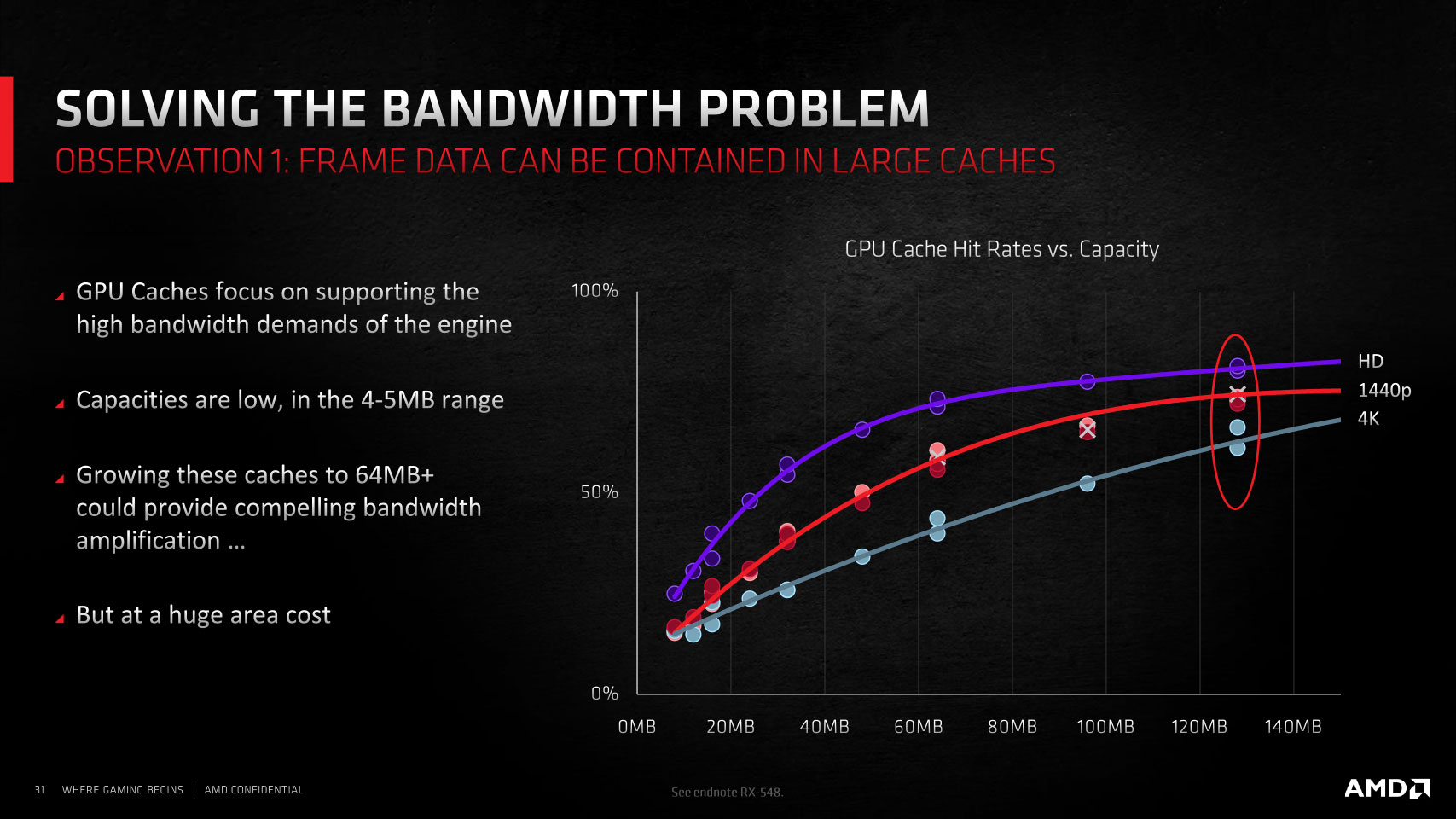

Effective bandwidth is not a specific number but varies across different usage scenarios. For obvious reasons effective bandwidth is somewhere between these two numbers. The logical way to calculate one number for effective bandwidth is to account for the cache hit rate. According to AMD slides the cache hit rate in games they tested at 2160p was 58%, so something along the lines of 58% at 2TB/s plus rest at 512 GB/s. 0,58 * 2048 + 0,42 * 512 = 1402 MB/s. As you can see from the slide the cache hit rate is higher at 1440p and 1080p which will give noticeably higher effective bandwidth at these resolutions. Of course while based on actual usage - as measured by AMD - this is pretty theoretical and the results will vary depending on what the memory usage actually is.

On slides AMD naturally uses the theoretical maximum effective bandwidth at 100% cache hit rate but this is expected from launch slides by a company trying to sell you on something.

Edit:

If you look at the AMD slide above that should give you a hint about why AMD went with smaller caches in the next generation. Cards with 256-bit memory bus are aimed at 1440p and 64MB does seem to be a pretty nice sweetspot for that. Yes, 2160p will somewhat suffer but the cards aimed for that in new generation also get a wider memory bus and accordingly, more cache.

Why would they want to reduce the amount of cache? Transistor budget. that 128MB of cache is 6-7 billion transistors. Navi 21 for 6900XT has 26.8 billion transistors in total. That is almost a quarter of transistors for the entire chip. If they can reduce the cache size by half and still have it almost as good, that is a big win. In context of Navi 21 halving the cache would cut the transistor count by 12-13% and that is a lot.

The 4090 has a much lower 'effective' bandwidth but slaughters 4K whereas the XTX plateaus heavily with much higher 'effective bandwidth'. Of course, we will then say 'but the 4090 is just fastuuhhr' due to ....

more shaders.... which effectively means the performance is

NOT capped by the bandwidth..... so the XTX's effective bandwidth also says nothing of its actual performance and it is clear it does not obtain a further benefit if you haven't got more core performance. If you compare the XTX and the 4090 like that, the only possible conclusion is that AMD balanced that card's bandwidth horribly, there's a huge amount of waste.... and what

@londiste said is probably true: there's no benefit to ever more cache or ever higher 'effective' bandwidth.

4090 probably has effective bandwidth in a similar range of 7900XTX. The architectural differences are noticeable though - like AcE noted before 40 series has L2 cache while RDNA3 has L3 cache. Usually the cache levels on GPU are muddied enough that it does not matter but architecturally it does apply. RDNA3 has the L3 as victim cache but in the end next to the memory (controller). Nvidia decided to enlarge L2 cache instead. It's a whole big optimization game - cache hit rate and cache size being the main - but not only - considerations. All-in-all it seems to play out quite evenly in this generation at least in terms of memory bandwidth, effective or not.

Whether game - or application for that matter - ends up being more compute or bandwidth limited varies. A lot. Something that relies on memory bandwidth probably has an easier time on 7900XTX, both due to larger cache and it being able to more effectively utilize its shaders while 4090s bigger shader array is more easily starved (4090 simply has about 30% more compute power that needs to be fed with data). Or even limited by something else - there are games that perform better on 7900XTX compared to 4090 which can probably be attributed in large part to 4090 having less ROPs and lower pixel fillrate.

I wouldn't say 7900XTX is balanced horribly. It is simply balanced to different strengths. Partially ending up weaker in compute power uncharacteristically for AMD. And from what it looks like so far the reliance on more compute in games finally went up, unfortunately for AMD.

Waste of time. Everything I've said is correct, I don't need this discussion. Some people are here, not to learn, but just to argue for their ego's sake.

Waste of time. Everything I've said is correct, I don't need this discussion. Some people are here, not to learn, but just to argue for their ego's sake.