It is super unpopular in DIY, but that's a subset of the real market.

I'm struggling to understand why anyone would go for a 9700X instead at $305, current pricing. Perhaps 9600X at $180, but I mean, $60 for more than 3x the cores and cheaper mobos meaning it's more like $30 more for the ARL chip...

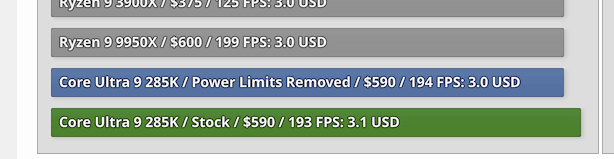

The "AMD is much better for gaming" is mostly from the X3Ds, which are almost twice the price, especially if you consider mobos. Against standard Zen 5? The 265K is faster than the 9900X in applications, and is essentially a 7700X in games, the 9700X is 5% faster with a 5090. That's with essentially the same efficiency, and with 200S (warranty) boost turned off, and ARL running slower RAM than it's rated for. Anyone able to argue for Zen 5 in this case? I find it a weak choice besides at the high end, with 9800X3D/9950X3D. Maybe 9600X3D at ~$250 changes things...

The advent of gaming performance charts/results generated with an RTX 5090 really throws off people's understanding of the actual relative performance with the GPUs they have I think. Besides the whole general ignoring of "application performance" charts.

The simple answer is upgradability, I was 99% sure i would be able to upgrade my cpu on AM5 to a new generation, even before 9000 series was announced, and im 98% sure i will be able to get a zen6 update can you say that for intel? that's their fundamental problem, is every intel platform is a dead end.

AMD is most popular for its 3D cache chips as you pointed out, Linux performance, SoCs, platform longevity and all P core architecture. To a lesser extent, AVX512 and SMT could be attractive to some. These attributes can be worth a premium to enough customers.

Edit: looks like Geofrancis likes the platform longevity.

As rarely, as I agree with dgianstefani, he has the point here. This is unpopular by you, and other TPU users, experienced enough, to make a viable, wise choice as a DIY user. But the thing is, that these CPUs being sold en masse, by Intel to OEMs/SI. This has never changed, and won't change any time soon, as AMD is still a niche in the consumer market (and is limited mostly by DIY customers of US and EU), as almost all their supply goes to EPYC and MI.

Don't get me wrong. I'm not preaching for either company. There are just the products and their strong and weak points.

There are areas, where Intel CPUs were and are the dominant. These are the embedded, industrial, and other manufacturing fields, where the CPUs being bought in droves, AMD has basically zero availability. And also, those who used to buy Intel for their lifetime, will continue to do so. And these price-cuts, are aimed

excactly at them. And this is the backbone of all CPU sales.

At this point, the Intel CPUs, unless they have serious manufacturing silicon/die defects, are pretty much great deal. As much as I dislike Intel, I must confess, that entire x86 computing, and software is turning around them. This is basically plug&play experience.

That's why most companies are lazy, or cautionous to try "new" endeavours. And also, their bias being fueled by wintel-nvidia lobbies, that exist here, after all these years, like AMD's Athlon and Ryzen never happened. You guys don't have a clue to what extent...

The another factor are the uneducated clients, that know nothing about the IT and CPU market, but "being told", heard the "claims", that Intel is "more stable", and other fairy tales, that dominates the market and companies's decisions on the upper level, due to Intel's corporate influence.

This hurts the small business the most. They would buy the cheapest all-rounder, regardless of platform/socket logevity and upgradability.

Both companies and their clients just see the "more cores" labels, and buy that (more cores is an old own AMD's game, at which Intel have completely outplayed them), having no clue about potential heterohenous core issues.

And they still don't know, that Ryzen has their five solid generations and several refreshes, that had their issues mostly ironed out. Not to mention EPYC, which simply wipes the floor with Xeon, for a good half-decade.

Another factor, is that despite the AMD's superiority, in many areas, their products are simultaneously more expensive. And there's simply no competition at the ently-level/low end, where the entire 12600K/B660 rig can be bought for just below $500. Go try the same with "alternatives" by AMD. There's simply no competition, and AMD ends up more expensive about $50-$100 more at every level. At least here.

You may say this is just one small local market, but this is exactly what Intel relies on, the each particular market, outside the US and EU, where they can sold their products in bulk, to OEM, or simply biased suppliers. The markets, that never happen in "big" outlets, and finacial reports.

Unless AMD will begin to treat themself "worthy" and reliable, solid rival, there's nothing consumers can do. No amount of tantrums about AMD's superiority, will help when the Intel's "inferior" SKUs dominate the mindshare and market sales globally. AMD must invest into consumer software and HW support and validation.