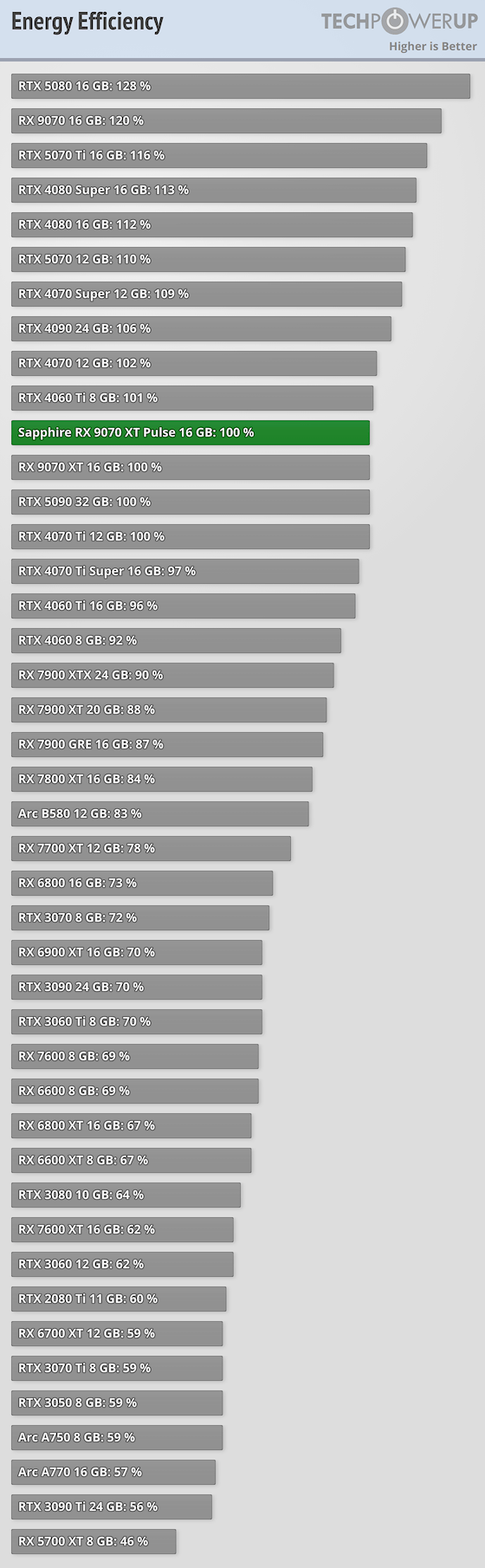

RTX 5090 is not as efficient as other RTX 50 Series gpus even some (almost all) RTX 40 Series gpus have better efficiency. RTX 5090 does not scale that well the same as RX 9070 XT when it comes to power consumption because they are highly overclocked gpus out of the box. Efficiency sweet spot is not optimal and UV is a big must have.

Energy Efficiency calculations are based on measurements using Cyberpunk 2077, Stalker 2 and Spider-Man 2. We record power draw and FPS rate to calculate the energy efficiency of the graphics card as it operates.

RTX 5080 being only 15% faster than RTX 4080 it's still way better deal than a RTX 5090

RTX 5090 is 52.5% faster @4k but costs 140% more in comparison terrible deal as a gaming gpu or difference between "worst" and "the worst".