Just got a new monitor that supports DP 1.2, 1.3 and 1.4 vs the old 1.2 and i'm seeing the exact same readings

Wondering if GPU-Z could be incorrect, or if I've been ripped off by a shoddy cable from amazon that claims to be 1.4, but is not

Is your monitor 4K/60 10-bit? If so, the answers are rather simple. Your monitor does not need more bandwidth than DP 1.2 can provide, even if it has DP 1.4 port. Monitors would default to lowest DP bandwidth standard it needs to convey the image even if its IC and ports support higher transmission rate.

- 4K/60 8-bit RGB image needs ~12.5 Gbps - DP 1.2 can do this

- 4K/60 10-bit RGB image needs ~16.5 Gbps - DP 1.2 can do this too

Different DisplayPort standards are not locking all features. So, for example HDR was defined in DP 1.4 standard, but it can also run on DP 1.2 link if a monitor does not need more bandwidth and if vendor implemented this feature with DP 1.2 link.

Extract from DisplayPort on WIkipedia to illustrate this point:

"HDR extensions were defined in version 1.4 of the DisplayPort standard. Some displays support these HDR extensions, but may only implement HBR2 transmission mode if the extra bandwidth of HBR3 is unnecessary (for example, on 4K 60 Hz HDR displays). Since there is no definition of what constitutes a "DisplayPort 1.4" device, some manufacturers may choose to label these as "DP 1.2" devices despite their support for DP 1.4 HDR extensions. As a result, DisplayPort "version numbers" should not be used as an indicator of HDR support. "

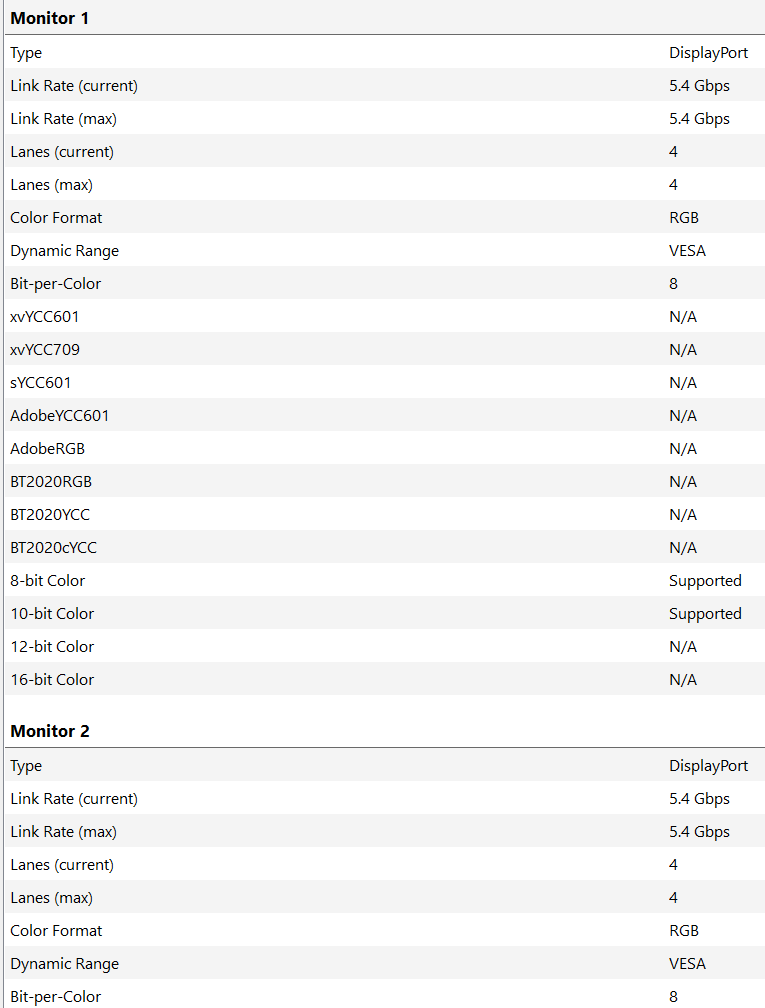

DP specs also include clock rates, so it's hard to tell what DP version this is running at without seeing those clock rates or HBR versions listed

In AMD Adrenaline software you can see a pixel clock and other information. Strangely, this is not displayed in GPU-Z tool.

You can easily calculate pixel clock for specific image on a monitor.

Is it because the link is still 5.4Gb, but with compression so GPU-Z cant report the higher speed link?

Link speed and compression are separate things. If DSC is used, data packets would still run over the same link speed and this link speed would be visible.

Some monitors with DP 1.2 IC can run DSC 1.1 up to 3:1 ratio, which was introduced before DP 1.4(a) with DSC 1.2(a). DSC 1.1 was not an integrated feature of DP 1.1 spec but monitor vendors could inslude it if monitor needed more bandwidth than ports can provide.

Using CRU the displayport and HDMI timings are very, very different on this display. That's weird.

The reason why link speed went down to DP 1.1, aka 2.7 Gbps per lane is because Nvidia's software changed RGB colour space to chroma 4:2:2. Or you changed it? Chroma 4:2:2 needs even less bandwidth on 4K/60 display, roughly 8 Gbps with roughly 520 MHz pixel clock, so display link would default to DP 1.1 with HBR protocol, as it does not need HBR2 for such image.

Tried another DP cable this one rated for 8K, same result - bandwidth doesn't go past DP 1.2 settings

I hope you understand now, from my explnations, why this was not necessary.

Also, the reason why DP and HDMI have slightly different timing is because of fine-tuning by the vendor. HDMI 2.0 link of 18 Gbps would default to 594 MHz, whereas DP has more flexibility for tuning 520-540 MHz and blanking intervals.

My HDMI 1.2 display has a single HDMI 2.0 port, and using a DP 1.4 to HDMI 2.0 adaptor, i see the higher link speeds

This is necessary as HDMI 2.0 sends 6 Gbps per each of three data lanes (18 Gbps together), so the adapter must use HBR3 with 8.1 Gbps per lane to accommodate HDMI bandwidth needs. DP 1.2 with 5.4 Gbps would not be able to fit 6 Gbps per lane from HDMI signal. Any DP-HDMI 2.0 adapter must support HBR3 protocol. If not, such adapter could support only HDMI 1.4b signal.

Why offer DP 1.4 if it doesnt get used?!?

Good question. It's because the IC chip in this monitor model is more advanced due to support for 10-bit and HDR. Such ICs come with default support for DP 1.4 in anticipation of displays with higher resolution that need more bandwidth. That's why DP 1.4 is there. I am sure LG used this IC in 4K displays with higher resolution that can use provided bandwidth. For them, using one IC on a range of different 4K monitors saves a lot of costs. You simply have a monitor with a faster port than it needs, but the main reason why you have this IC is 10-bit with HDR inside the monitor. Resolution is secondary here. They can install the same IC chip in 60Hz, 120Hz or 144Hz monitors.

The monitor and the gpu uses the DP 1.4 standard, it doesn't mean it needs to use the best transmission mode within that standard. If a lower transmission mode is good enough for the resolution and refresh rate the monitor is using, it doesn't need anything more.

This.

This seems to be the issue with my DP "1.4" display as it doesnt use HBR3 or DSC at all, but may support one of the other features like more audio channels

Yes. I explained this above. It's about IC capability for 10-bit panels. 60Hz monitors simply do not need more bandwidth. Even 4K/75Hz can use 5.4 Gbps link.

As this goes back to my original question of if GPU-Z was inaccurate or my monitor dishonest, i'm going to say that when i've tried three brands and four VESA certified "8K" cables, the monitor is the problem

I don't think there was a problem here anywhere.

DP 1.4 enables a higher compression mode - that may not be supported or used - new audio standards, and in theory uses less bandwidth for the same settings

As said, the IC chip in your monitor needs to deal with 10-bit signal and HDR. That's the most significant advancement in silicon routinely enabled with DP 1.4 standard, alongside other features your monitor simply does not need.

DP 1.4 is required to enable HDR

As said above, HDR was

defined for DP 1.4, but HDR can be used by displays running on HBR2 link too, like yours.

And here we go with an active DP to HDMI cable, which is showing HBR3 as supported but not active

HBR3 must be supported in cables that convert the signal into HDMI 2.0, as explained above. 6Gbps per lane on HDMi cannot run on HBR2 link.

All in all, I cannot see anything wrong with your display. Does it run as intended now?