- Joined

- Dec 12, 2012

- Messages

- 832 (0.18/day)

- Location

- Poland

| System Name | THU |

|---|---|

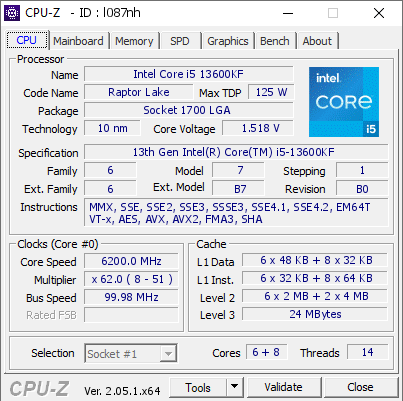

| Processor | Intel Core i5-13600KF |

| Motherboard | ASUS PRIME Z790-P D4 |

| Cooling | SilentiumPC Fortis 3 v2 + Arctic Cooling MX-2 |

| Memory | Crucial Ballistix 2x16 GB DDR4-3600 CL16 (dual rank) |

| Video Card(s) | MSI GeForce RTX 4070 Ventus 3X OC 12 GB GDDR6X (2610/21000 @ 0.91 V) |

| Storage | Lexar NM790 2 TB + Corsair MP510 960 GB + PNY XLR8 CS3030 500 GB + Toshiba E300 3 TB |

| Display(s) | LG OLED C8 55" + ASUS VP229Q |

| Case | Fractal Design Define R6 |

| Audio Device(s) | Yamaha RX-V4A + Monitor Audio Bronze 6 + Bronze FX | FiiO E10K-TC + Koss Porta Pro |

| Power Supply | Corsair RM650 |

| Mouse | Logitech M705 Marathon |

| Keyboard | Corsair K55 RGB PRO |

| Software | Windows 10 Home |

| Benchmark Scores | Benchmarks in 2025? |

If you only need gaming performance, there is no reason to switch. But it is just a simple CPU swap.best for gaming it also has good app performance. I'm not sure if I can switch from 12600k? It is more logical to wait for the 14th generation.

14th gen will require a new motherboard. We do not know much about Meteor Lake right now, except that it will use multiple dies in a single package. But rumors say that it will not be able to clock as high as Alder/Raptor Lake, which are on a very mature process. 14th gen will be the first one using the new Intel 4 process. I would not be surprised if gaming performance was actually lower because of that.

I do not think it is a major architecture change for the actual CPU cores. I think the main purpose is to switch to the multi-chip approach. It will have much better efficiency, but not peak performance. Mobile is the main focus here.