- Joined

- Jun 8, 2011

- Messages

- 17,884 (3.47/day)

- Location

- Somerset, UK

| System Name | Not so complete or overkill - There are others!! Just no room to put! :D |

|---|---|

| Processor | Ryzen Threadripper 3970X |

| Motherboard | Asus Zenith 2 Extreme Alpha |

| Cooling | Lots!! Dual GTX 560 rads with D5 pumps for each rad. One rad for each component |

| Memory | Viper Steel 4 x 16GB DDR4 3600MHz not sure on the timings... Probably still at 2667!! :( |

| Video Card(s) | Asus Strix 3090 with front and rear active full cover water blocks |

| Storage | I'm bound to forget something here - 250GB OS, 2 x 1TB NVME, 2 x 1TB SSD, 4TB SSD, 2 x 8TB HD etc... |

| Display(s) | 3 x Dell 27" S2721DGFA @ 7680 x 1440P @ 144Hz or 165Hz - working on it!! |

| Case | The big Thermaltake that looks like a Case Mods |

| Audio Device(s) | Onboard |

| Power Supply | EVGA 1600W T2 |

| Mouse | Corsair thingy |

| Keyboard | Razer something or other.... |

| VR HMD | No headset yet |

| Software | Windows 11 OS... Not a fan!! |

| Benchmark Scores | I've actually never benched it!! Too busy with WCG and FAH and not gaming! :( :( Not OC'd it!! :( |

Hey guys, I'm hoping and wondering if there's any hope left but also any chance that someone in this amazing forum, can help me fix a RAID 10 array

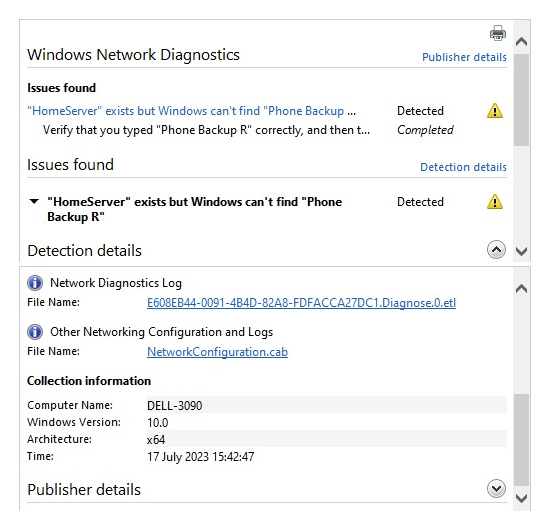

For some reason on Sunday morning when I was just going through the back up of my Synology box and whilst acting too quickly, I have lost access to my Synology.. For some reason, when I tried accessing it, it errors but shows the shared folders I have made on the system. Like so....

I did manage to get this to work, via installing Ubuntu and performing a few commands that I had picked up from the Synology help links -

Like so..

But, for some reason whilst I was trying to copy across some other folders, I believe two of the drives, dropped out and then even more fun array stuff started happening... i.e. it stopped working

When it stopped working, I stupidily as I was slightly paniced, removed the drive data cables rather than the power and tested each one, so to make sure they worked, which they did..

I've been trying to find a command or something to help with the repair of the array. I've even tried swapping the drives around so that the array matches the first picture, but I have been unsuccessful with that happening. It seems when trying to think cleverly and test a drive at a time, Ubuntu puts the same label on the drive, so whenever there's just one drive in the system, it will go to SBB3.... If there was a way of reading the information so I could find a serial number for each drive letter, I'd gladly go through and try to put it back how it was but it seems that would be too easy and well, I don't know Ubuntu hardly at all to do something like that.

I've tried re-enabling the array but I'm not sure what to really do and I don't wish to run the risk of damaging things even further. I have been able to gain information from each of the drives in the array -

I would dearly love to get the remaining data from the array, there's quite a bit on there but most of it I believe now, has been backed up, so I don't believe I'd be loosing masses but I could loose more of my time re-gaining the data and having to re-sort the few thousand photo's I've been very luckly able to gain back before it went silly after I had it copying nicely from Ubuntu the first time. I'm not 100% sure what happened but I believe I might have slightly tapped a cable or something that caused the issue.

Is there anyone out there who can save me from this mess?? Is there anything else you might require? I've got the drives installed on a separate machine now, with the latest Ubuntu install, 22.05 I believe it is?? I can't wait to hear from you Massive thank you's in advance

Massive thank you's in advance

For some reason on Sunday morning when I was just going through the back up of my Synology box and whilst acting too quickly, I have lost access to my Synology.. For some reason, when I tried accessing it, it errors but shows the shared folders I have made on the system. Like so....

I did manage to get this to work, via installing Ubuntu and performing a few commands that I had picked up from the Synology help links -

Like so..

But, for some reason whilst I was trying to copy across some other folders, I believe two of the drives, dropped out and then even more fun array stuff started happening... i.e. it stopped working

When it stopped working, I stupidily as I was slightly paniced, removed the drive data cables rather than the power and tested each one, so to make sure they worked, which they did..

I've been trying to find a command or something to help with the repair of the array. I've even tried swapping the drives around so that the array matches the first picture, but I have been unsuccessful with that happening. It seems when trying to think cleverly and test a drive at a time, Ubuntu puts the same label on the drive, so whenever there's just one drive in the system, it will go to SBB3.... If there was a way of reading the information so I could find a serial number for each drive letter, I'd gladly go through and try to put it back how it was but it seems that would be too easy and well, I don't know Ubuntu hardly at all to do something like that.

I've tried re-enabling the array but I'm not sure what to really do and I don't wish to run the risk of damaging things even further. I have been able to gain information from each of the drives in the array -

I would dearly love to get the remaining data from the array, there's quite a bit on there but most of it I believe now, has been backed up, so I don't believe I'd be loosing masses but I could loose more of my time re-gaining the data and having to re-sort the few thousand photo's I've been very luckly able to gain back before it went silly after I had it copying nicely from Ubuntu the first time. I'm not 100% sure what happened but I believe I might have slightly tapped a cable or something that caused the issue.

Is there anyone out there who can save me from this mess?? Is there anything else you might require? I've got the drives installed on a separate machine now, with the latest Ubuntu install, 22.05 I believe it is?? I can't wait to hear from you

Massive thank you's in advance

Massive thank you's in advance