- Joined

- Aug 19, 2017

- Messages

- 3,044 (1.08/day)

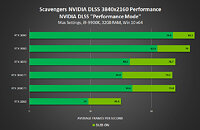

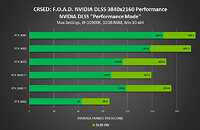

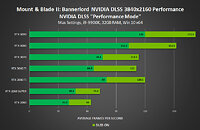

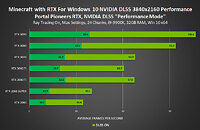

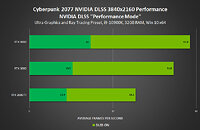

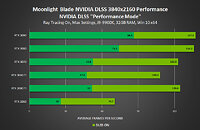

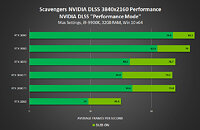

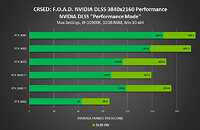

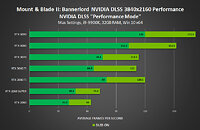

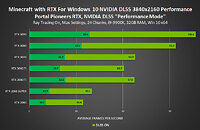

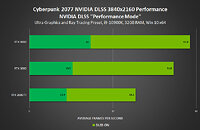

NVIDIA's Deep Learning Super Sampling (DLSS) technology uses advanced methods to offload sampling in games to the Tensor Cores, dedicated AI processors that are present on all of the GeForce RTX cards, including the prior Turing generation and now Ampere. NVIDIA promises that the inclusion of DLSS is promising to deliver up to a 40% performance boost, or even more. Today, the company has announced that DLSS is getting support in Cyberpunk 2077, Minecraft RTX, Mount & Blade II: Bannerlord, CRSED: F.O.A.D., Scavengers, and Moonlight Blade. The inclusion of these titles is now making NVIDIA's DLSS technology present in a total of 32 titles, which is no small feat for new technology.

Below, you can see the company provided charts about the performance of DLSS inclusion in the new titles,except the Cyberpunk 2077.

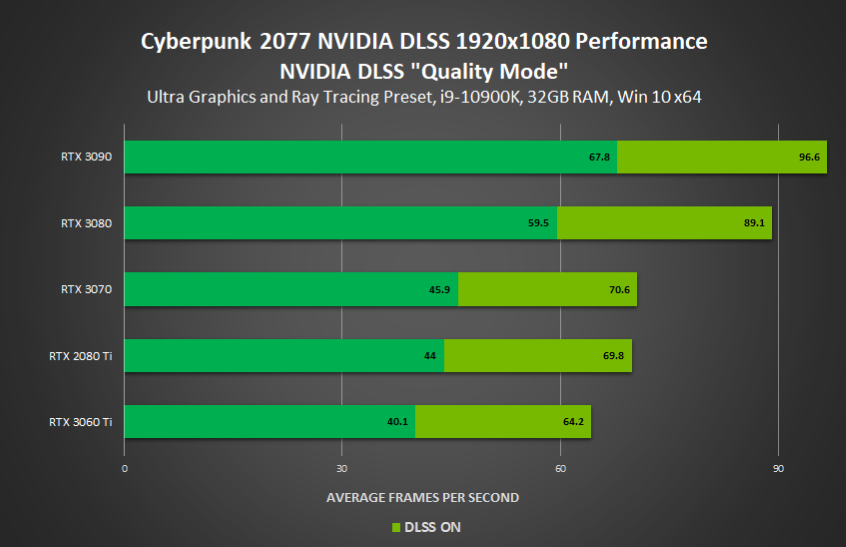

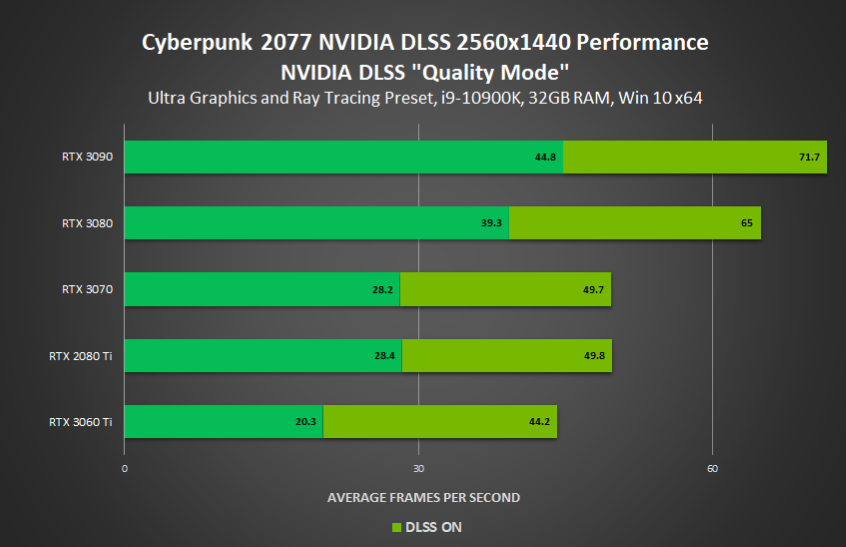

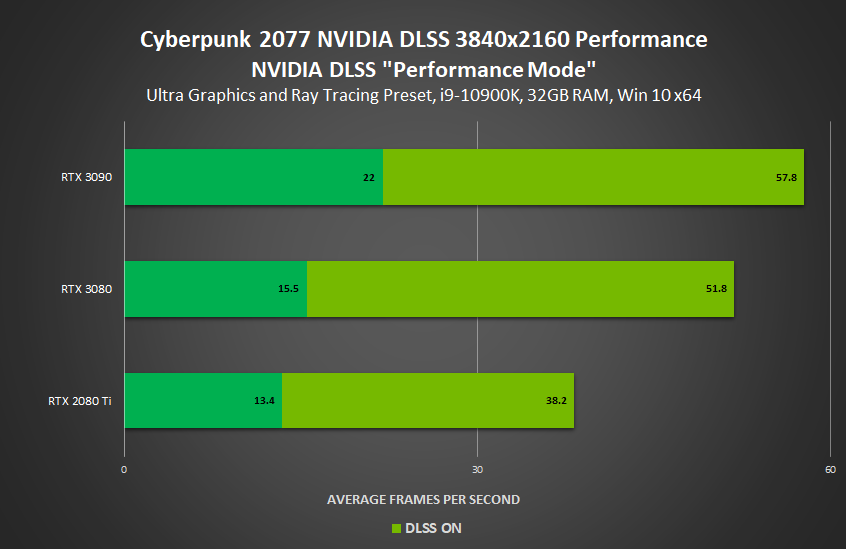

Update: The Cyberpunk 2077 performance numbers were leaked (thanks to kayjay010101 on TechPowerUp Forums), and you can check them out as well.

View at TechPowerUp Main Site

Below, you can see the company provided charts about the performance of DLSS inclusion in the new titles,

Update: The Cyberpunk 2077 performance numbers were leaked (thanks to kayjay010101 on TechPowerUp Forums), and you can check them out as well.

View at TechPowerUp Main Site