Ruru

S.T.A.R.S.

- Joined

- Dec 16, 2012

- Messages

- 14,102 (3.06/day)

- Location

- Jyväskylä, Finland

| System Name | 4K-gaming / console |

|---|---|

| Processor | 5800X @ PBO +200 / i5-8600K @ 4.7GHz |

| Motherboard | ROG Crosshair VII Hero / ROG Strix Z370-F |

| Cooling | Custom loop CPU+GPU / Custom loop CPU |

| Memory | 32GB DDR4-3466 / 16GB DDR4-3600 |

| Video Card(s) | Asus RTX 3080 TUF / Powercolor RX 6700 XT |

| Storage | 3TB SSDs + 3TB / 372GB SSDs + 750GB |

| Display(s) | 4K120 IPS + 4K60 IPS / 1080p projector @ 90" |

| Case | Corsair 4000D AF White / DeepCool CC560 WH |

| Audio Device(s) | Sony WH-CH720N / Hecate G1500 |

| Power Supply | EVGA G2 750W / Seasonic FX-750 |

| Mouse | MX518 remake / Ajazz i303 Pro |

| Keyboard | Roccat Vulcan 121 AIMO / Obinslab Anne 2 Pro |

| VR HMD | Oculus Rift CV1 |

| Software | Windows 11 Pro / Windows 11 Pro |

| Benchmark Scores | They run Crysis |

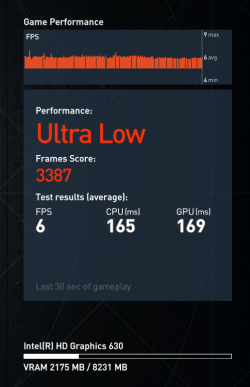

Well, what I can say. Completed FEAR with GF4 Ti 4200 64MB and Crysis with 6800 GS. That was truly years ago, IIRC FEAR was at about the minimum settings, Crysis was at 1024x768 low (effects high) though I had to drop to 800x600 in the last level.

Still finished both tho. I kinda miss those days when the constant 60fps wasn't that neccessary.

Still finished both tho. I kinda miss those days when the constant 60fps wasn't that neccessary.