@Vayra86

Even if Nvidia says FreeSync wins they will still provide back compatibility support of G-Sync. You only have two options of graphics cards anyway. It's one or the other. Not like you are losing out on a breadth of options. The reason Nvidia dominates the market share is because most of its user base is loyal and will keep buying Nvidia cards. This has been a thing before G-Sync and will most likely still be a thing. So being locked into Nvidia isn't as big of a negative to many (read, not all) of them.

While the price premium does suck (not arguing this) at least you get something for that premium - superior tech. Many people are quite ok with paying a premium for better technology. I am. I supported SSDs when they were new and expensive. If I get something for my money its not as evil as many make it out to be. To be clear since people are analogy happy, I am

not comparing G-Sync to SSDs. Only saying I personally don't mind paying a price premium for better tech.

@FordGT90Concept

The Betamax player analogy is really pointless. You should not need me to tell you Betamax Cassettes != a friggin monitor. The similarities exist only in your head. Stop with the analogies. We get it - you hate G-Sync and Nvidia. Instead of trying to think of analogies, focus on differences in the technology.

Now if you go back and read my posts you will see that I agree with you that AMD has Nvidia by the short ones and that Nvidia has a track record of bad habits. What I don't agree with you on, is that its not as simple as you make it out to be. There is a very clear and distinct difference on that.

First you make it sound like all of a sudden some recent information came out that put the death nail in G-Sync - using DisplayPort 1.3 as your argument. Truth is what you are referring to is old news - well for the computer industry. VESA created Adaptive Sync in 2009 but it was not implemented. Nvidia took the opportunity to develop and release G-Sync. In response to that AMD announced FreeSync, which was VESA's Adaptive Sync. So all of a sudden a technology that wasn't pushed at all was used to combat Nvidia's release of G-Sync. Adaptive Sync was actually supported by DisplayPort 1.2a - so no... 1.3 is not when Adaptive Sync was first supported. It should be noted that 1.2a was released in 2014 ...so was the spec for 1.3. Even with that you still need a FreeSync enabled monitor as it needs the chip. FreeSync monitors win on price. G-Sync monitors win on tech. Since Nvidia is not supporting Adaptive Sync we are back at square one... you need an AMD card for FreeSync and you need an Nvidia card for G-Sync. So we can discuss DisplayPort all we want but in the end very little has changed in that regard. In spite of the DisplayPort changes guess what... monitor manufacturers are still releasing G-Sync monitors.

A noteworthy change is Intel's integrated graphics will now support AdaptiveSync, but how many people running integrated graphics will be buying a gaming monitor?

You kind of have AMD's triumph on DisplayPort 1.3 a little off. The big win on DisplayPort 1.3 is the fact it enables 5120×2880 displays to 60Hz refresh rate, 1080p monitors will go up to 240hz refresh rate, 170hz for HDR 1440p screens and 144Hz for 3840×2160. The upper end displays will most likely have an announcement date toward the end of the year - in case anyone is curious.

The above is great but honestly what I feel is the biggest win for AMD is getting FreeSync to work over HDMI 2.0 since not all monitors support DisplayPort. This will definitely increase the number of monitors that are FreeSync certified. Granted this is a low cost solution since HDMI doesn't have the bandwidth DisplayPort does so all serious gamers will still go for a DisplayPort monitor. But it does open up options to the lower price tier group of monitors. So now lost cost game systems can enjoy variable refresh rates on low cost monitors. AMD always kind of dominated on the low cost GPUs but now there is even more reason for people looking for a low cost GPU to invest in AMD instead of Nvidia.

As far as your clarification of FreeSync and AdaptiveSync, you should realize that the two while the same are not mutually inclusive. A FreeSync certified monitor is always based on AdaptiveSync but not every AdaptiveSync monitor is FreeSync certified. Your same paragraph makes it sound like FreeSync is completely plug-n-play with DisplayPort 1.3. Again, you need an AMD graphics card for FreeSync to work just like you need an Nvidia graphics card for G-Sync. See how we came full circle again? Now when Intel releases its first line of CPUs that are AdaptiveSync enabled than your statement becomes true but DisplayPort 1.3 does not magically enable FreeSync on its own.

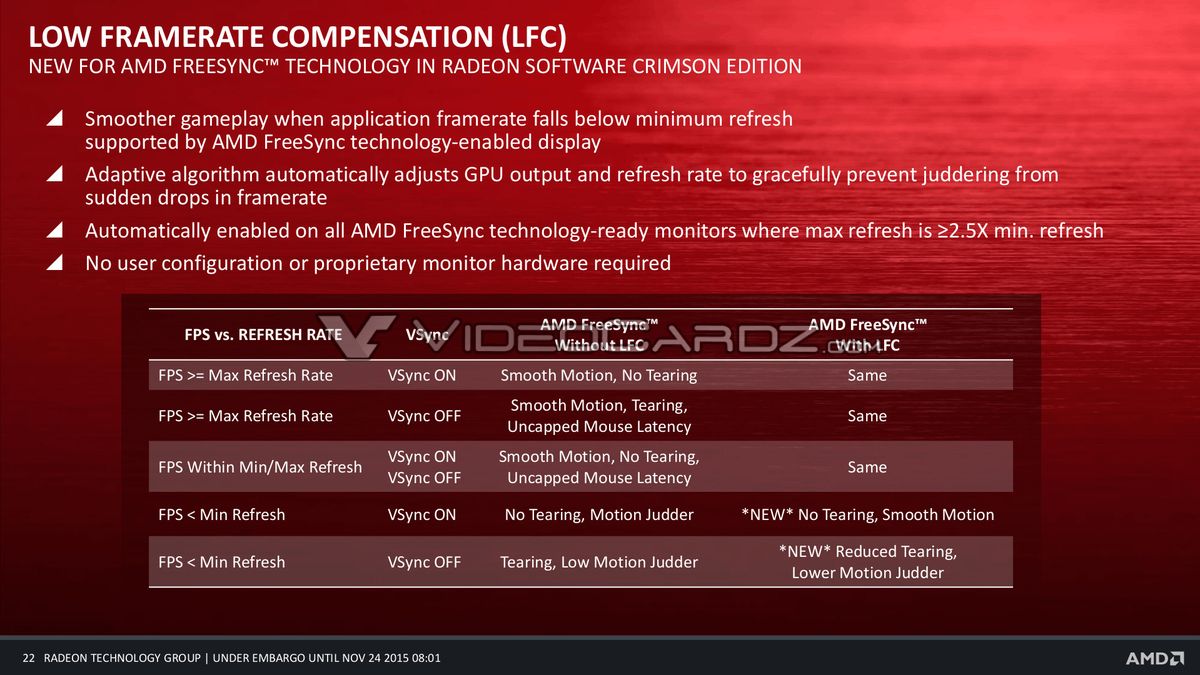

Also you should not assume every monitor's min refresh rate is 30Hz because some are actually 40Hz and above. It's not hard to dip below 40 FPS on a recent title. If you are going to buy a FreeSync monitor this is one of the most important things you should look up.

That list of G-Sync monitors you posted is out of date. AMD still has the advantage but there are more than 9 G-Sync monitors

-

List of FreeSync monitors

-

List of G-Sync monitors

Again, the important distinction between your POV and mine is I don't think it is clear cut like you do. As I said, AMD has the upper hand and Nvidia has a bad track record with the tech it likes to push. In fact, I think I provided more examples of how AMD has the upper hand. But the analogies in this thread are severely flawed. Your information is a bit off and you paint this picture that if someone invests in a G-Sync monitor they're screwed - not true.

0 frames is acceptable? Additionally, there is some indicators that eDP does take minimum refreshrate into consideration but manufacturers are forced to provide a minimum refreshrate via EDID so they give a higher number than the eDP can handle.

0 frames is acceptable? Additionally, there is some indicators that eDP does take minimum refreshrate into consideration but manufacturers are forced to provide a minimum refreshrate via EDID so they give a higher number than the eDP can handle.

the next amd venture be getting another hsa member to make gpu's and push out nv.. poor nv needs to wear a helmet

the next amd venture be getting another hsa member to make gpu's and push out nv.. poor nv needs to wear a helmet