I've just bought this card:

https://www.techpowerup.com/gpudb/b3654/msi-gtx-1070-armor-oc

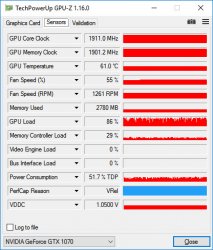

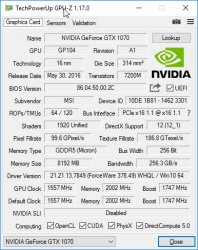

GPU-Z seems to report different speeds than the specs of the card, a much higher GPU clock and a lower memory clock.

https://www.techpowerup.com/gpudb/b3654/msi-gtx-1070-armor-oc

GPU-Z seems to report different speeds than the specs of the card, a much higher GPU clock and a lower memory clock.