- Joined

- Aug 6, 2017

- Messages

- 7,412 (2.55/day)

- Location

- Poland

| System Name | Purple rain |

|---|---|

| Processor | 10.5 thousand 4.2G 1.1v |

| Motherboard | Zee 490 Aorus Elite |

| Cooling | Noctua D15S |

| Memory | 16GB 4133 CL16-16-16-31 Viper Steel |

| Video Card(s) | RTX 2070 Super Gaming X Trio |

| Storage | SU900 128,8200Pro 1TB,850 Pro 512+256+256,860 Evo 500,XPG950 480, Skyhawk 2TB |

| Display(s) | Acer XB241YU+Dell S2716DG |

| Case | P600S Silent w. Alpenfohn wing boost 3 ARGBT+ fans |

| Audio Device(s) | K612 Pro w. FiiO E10k DAC,W830BT wireless |

| Power Supply | Superflower Leadex Gold 850W |

| Mouse | G903 lightspeed+powerplay,G403 wireless + Steelseries DeX + Roccat rest |

| Keyboard | HyperX Alloy SilverSpeed (w.HyperX wrist rest),Razer Deathstalker |

| Software | Windows 10 |

| Benchmark Scores | A LOT |

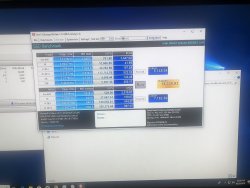

I've been looking for something to add to my SSD storage, but found that we're still missing reviews on a lot of the drives currently available. So let's all chip in to create a solid base of our benchmark scores. The rules are simple - post your anvil storage score. Why anvil - out of all simple and fast benchmarks it has the best range of small file size tests and is easy to read. Please specify whether the drive is full or empty and whether it's OS or storage.

Anvil download (free)

http://anvils-storage-utilities.en.lo4d.com/

latest RST driver

https://downloadcenter.intel.com/do...d-Storage-Technology-Intel-RST-?product=55005

That's my RAID0 array of two 256GB 850 Pro SSDs - 90% used, OS.

my almost empty 850 Pro 512GB for comparison

Anvil download (free)

http://anvils-storage-utilities.en.lo4d.com/

latest RST driver

https://downloadcenter.intel.com/do...d-Storage-Technology-Intel-RST-?product=55005

That's my RAID0 array of two 256GB 850 Pro SSDs - 90% used, OS.

my almost empty 850 Pro 512GB for comparison

Last edited:

) it would be no problem to store and work with a 6 TB video RAW on 4 x 2TB SSDs in RAID0. funny tought.

) it would be no problem to store and work with a 6 TB video RAW on 4 x 2TB SSDs in RAID0. funny tought.