FordGT90Concept

"I go fast!1!11!1!"

- Joined

- Oct 13, 2008

- Messages

- 26,262 (4.28/day)

- Location

- IA, USA

| System Name | BY-2021 |

|---|---|

| Processor | AMD Ryzen 7 5800X (65w eco profile) |

| Motherboard | MSI B550 Gaming Plus |

| Cooling | Scythe Mugen (rev 5) |

| Memory | 2 x Kingston HyperX DDR4-3200 32 GiB |

| Video Card(s) | AMD Radeon RX 7900 XT |

| Storage | Samsung 980 Pro, Seagate Exos X20 TB 7200 RPM |

| Display(s) | Nixeus NX-EDG274K (3840x2160@144 DP) + Samsung SyncMaster 906BW (1440x900@60 HDMI-DVI) |

| Case | Coolermaster HAF 932 w/ USB 3.0 5.25" bay + USB 3.2 (A+C) 3.5" bay |

| Audio Device(s) | Realtek ALC1150, Micca OriGen+ |

| Power Supply | Enermax Platimax 850w |

| Mouse | Nixeus REVEL-X |

| Keyboard | Tesoro Excalibur |

| Software | Windows 10 Home 64-bit |

| Benchmark Scores | Faster than the tortoise; slower than the hare. |

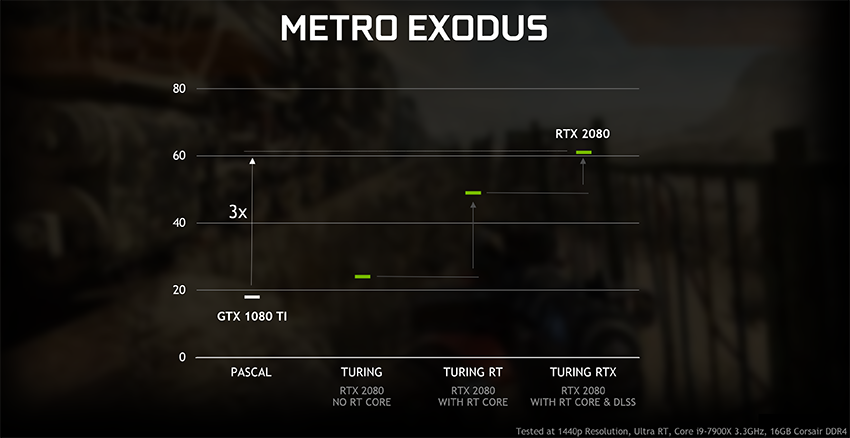

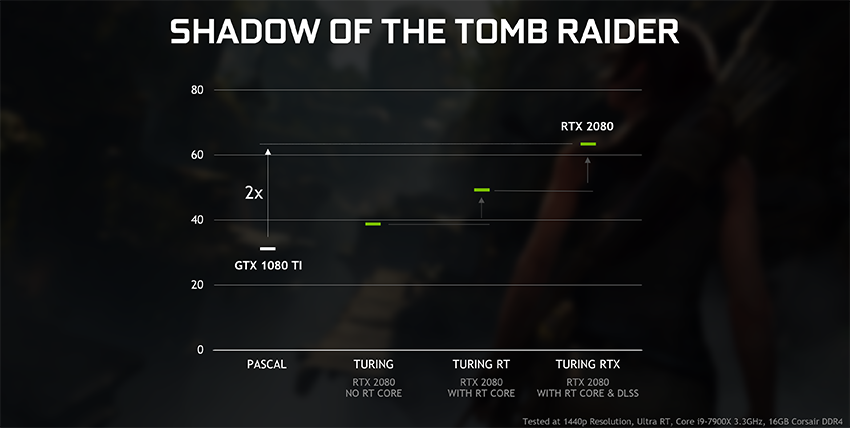

My bad, it's roughly 40% performance drop (was thinking Shadow of the Tomb Raider which was just a press-only tech demo I guess). Still, question remains the same. DXR has a high price to pay not just in terms of hardware, but loss in framerate and the addition of visual noise.

)))) RTX stuff that absolutely had to use RTX cards, because, for those stupid who don't get it: it has that R in it! It stands for "raytracing". Unlike GTX, where G stands for something else (gravity perhaps)

)))) RTX stuff that absolutely had to use RTX cards, because, for those stupid who don't get it: it has that R in it! It stands for "raytracing". Unlike GTX, where G stands for something else (gravity perhaps)