- Joined

- Oct 9, 2007

- Messages

- 47,696 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

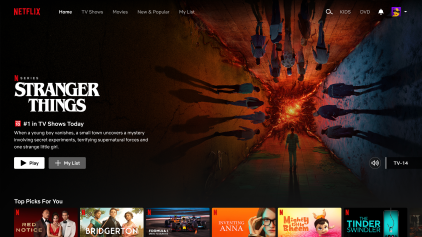

AMD's RDNA2 graphics architecture features hardware-accelerated decoding of the AV1 video format, according to a Microsoft blog announcing the format's integration with Windows 10. The blog mentions the three latest graphics architectures among those that support accelerated decoding of the format—Intel Gen12 Iris Xe, NVIDIA RTX 30-series "Ampere," and AMD RX 6000-series "RDNA2." The AV1 format is being actively promoted by major hardware vendors to online streaming content providers, as it offers 50% better compression than the prevalent H.264 (translating into that much bandwidth savings), and 20% better compression than VP9. You don't need these GPUs to use AV1, anyone can use it with Windows 10 (version 1909 or later), by installing the AV1 Video Extension from the Microsoft Store. The codec will use software (CPU) decode in the absence of hardware acceleration.

View at TechPowerUp Main Site

View at TechPowerUp Main Site