- Joined

- Jan 20, 2019

- Messages

- 1,751 (0.75/day)

- Location

- London, UK

| System Name | ❶ Oooh (2024) ❷ Aaaah (2021) ❸ Ahemm (2017) |

|---|---|

| Processor | ❶ 5800X3D ❷ i7-9700K ❸ i7-7700K |

| Motherboard | ❶ X570-F ❷ Z390-E ❸ Z270-E |

| Cooling | ❶ ALFIII 360 ❷ X62 + X72 (GPU mod) ❸ X62 |

| Memory | ❶ 32-3600/16 ❷ 32-3200/16 ❸ 16-3200/16 |

| Video Card(s) | ❶ 3080 X Trio ❷ 2080TI (AIOmod) ❸ 1080TI |

| Storage | ❶ NVME/SSD/HDD ❷ <SAME ❸ SSD/HDD |

| Display(s) | ❶ 1440/165/IPS ❷ 1440/144/IPS ❸ 1080/144/IPS |

| Case | ❶ BQ Silent 601 ❷ Cors 465X ❸ Frac Mesh C |

| Audio Device(s) | ❶ HyperX C2 ❷ HyperX C2 ❸ Logi G432 |

| Power Supply | ❶ HX1200 Plat ❷ RM750X ❸ EVGA 650W G2 |

| Mouse | ❶ Logi G Pro ❷ Razer Bas V3 ❸ Logi G502 |

| Keyboard | ❶ Logi G915 TKL ❷ Anne P2 ❸ Logi G610 |

| Software | ❶ Win 11 ❷ 10 ❸ 10 |

| Benchmark Scores | I have wrestled bandwidths, Tussled with voltages, Handcuffed Overclocks, Thrown Gigahertz in Jail |

AMD Ryzen 5 5600X Review

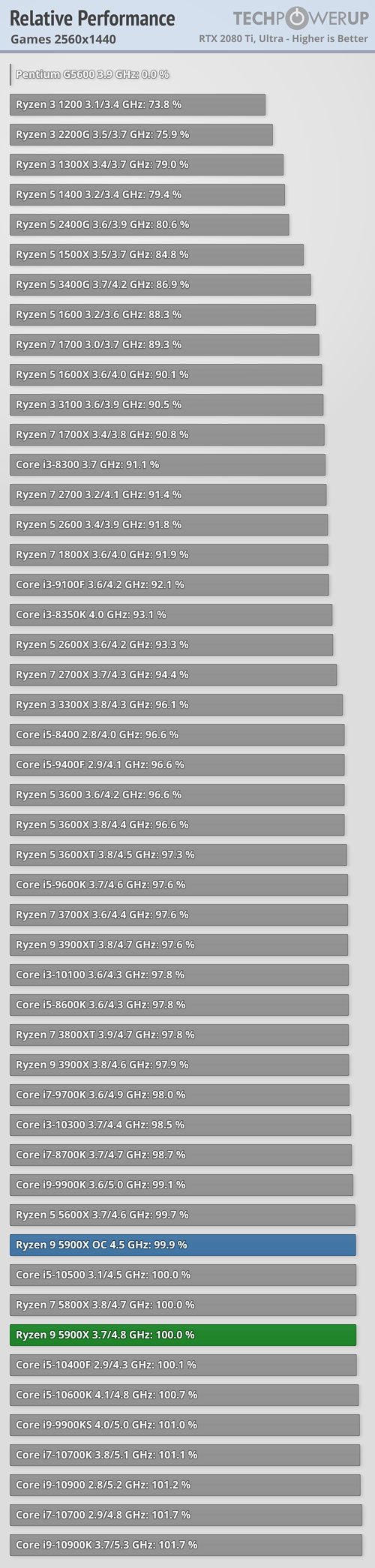

Six Zen 3 cores beating eight Zen 2 Cores? That's exactly what's happening with the Ryzen 5 5600X. AMD's massive IPC gain helped it overcome a two-core deficitm, even in productivity tests. The Ryzen 5 5600X redefines what you really need for a high-end gaming PC.

According to the above 5600X review, the non-K series 10700 comes out ahead @ 1080p (in most games). Also beats the 10700K and 10900k (stock).

I'm just just trying to wrap my head around how this was made possible. I've always been lead to believe the K-series CPU's are "marginally faster" out of the box + the added benefit of overclocking. My kaby lake 7700K sees pretty wide single threaded perf gains over the non-K 7700.... "so vat da hell iz goin on with 10th Gen?"

Is the 10700 reference in these reviews based on a premium Z-series board, more expensive lower latency memory and overclocking (as I understand some OC is possible with non-K series)? Or was it based on stock configurations with a B/H series board and not-so expensive RAM? Also your know-how thoughts on how the non-K is able to beat intels flagship i9 SKU?