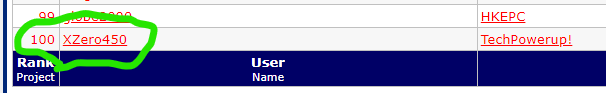

You ranked up into the Top 100 I see , 99th place, so well done !

How much of this comes from moving to Linux?

TPU has a good team!

Moving to Linux was a big piece of the changes I've made, especially with ALL my GPU being Nvidia and the CUDA core released at the end of Sept.

I still have a couple of Win10 systems, for desktop work.

Also, I've moved to Supermicro server-class hardware for the dedicated folders.

The desktop mobos just don't have enough PCIe lanes to work with.

And it was cheaper to buy & operate! Turns out the power efficiency is much better too.

This guy (photo attached) is putting out ~8M PPD. The mobo,cpu & ram (with a heatsink I replaced) was US$350.

Just checked.. he's currently running at 8.19M.

I've attached my Linux FAHclient Install Guide for Ubuntu 20.04. I also run Linux Mint and like the interface much more than Ubuntu Mate.

Buss-wise, I could attach two additional GPU to the system in the photo, but where would they go? Powering them too would be a challenge.

If anyone has an old system around they try out the Linux install.

I did a scatterplot of the September transition to Linux & CUDA from OpenCL. The more powerful cards like the

3070 2070s & 2060s really benefitted from the switch.

It's a crude plot, but communicates the effects of both CUDA & Linux. You can see the three different atom count levels in the groupings.

To share how simple it is to build a dedicated Linux server class Folder, this is the primary server that I'm working on.

Dual Xeon E5-2630v3, Supermicro X9DR3, 8GB DDR3 1066 RAM (which I upgraded later) Cost was US$170, free shipping.

This how it looks now... 4ea PCIex16 slots that can be configured to bifurcate!

I have the pieces to complete the fan bar & it'll be working soon.

I should point out that I don't fold on CPUs, a system like this isn't power efficient for CPU folding, but it does have 80 PCIe3 lanes to manage GPUs.