Mussels

Freshwater Moderator

- Joined

- Oct 6, 2004

- Messages

- 58,411 (7.69/day)

- Location

- Oystralia

| System Name | Rainbow Sparkles (Power efficient, <350W gaming load) |

|---|---|

| Processor | Ryzen R7 5800x3D (Undervolted, 4.45GHz all core) |

| Motherboard | Asus x570-F (BIOS Modded) |

| Cooling | Alphacool Apex UV - Alphacool Eisblock XPX Aurora + EK Quantum ARGB 3090 w/ active backplate |

| Memory | 2x32GB DDR4 3600 Corsair Vengeance RGB @3866 C18-22-22-22-42 TRFC704 (1.4V Hynix MJR - SoC 1.15V) |

| Video Card(s) | Galax RTX 3090 SG 24GB: Underclocked to 1700Mhz 0.750v (375W down to 250W)) |

| Storage | 2TB WD SN850 NVME + 1TB Sasmsung 970 Pro NVME + 1TB Intel 6000P NVME USB 3.2 |

| Display(s) | Phillips 32 32M1N5800A (4k144), LG 32" (4K60) | Gigabyte G32QC (2k165) | Phillips 328m6fjrmb (2K144) |

| Case | Fractal Design R6 |

| Audio Device(s) | Logitech G560 | Corsair Void pro RGB |Blue Yeti mic |

| Power Supply | Fractal Ion+ 2 860W (Platinum) (This thing is God-tier. Silent and TINY) |

| Mouse | Logitech G Pro wireless + Steelseries Prisma XL |

| Keyboard | Razer Huntsman TE ( Sexy white keycaps) |

| VR HMD | Oculus Rift S + Quest 2 |

| Software | Windows 11 pro x64 (Yes, it's genuinely a good OS) OpenRGB - ditch the branded bloatware! |

| Benchmark Scores | Nyooom. |

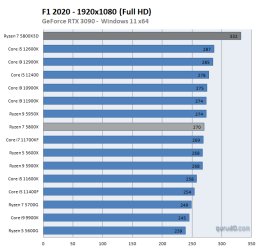

12700K has better performance per dollar in productivity, gaming (both low and high resolution)

Also, keep in mind that turning E cores off is going to improve gaming performance. ALder Lake was not tested on its full potential for gaming

5800X3D is more expensive than 12700K. SO turning E cores will not make put 12700K at any disadvantage anyway

So you're paying 450 dollar for a 5800x3d when you can get a 12600kf for 280 with 4.4% slower gaming performance at 1080p?

To both of you: pricing varies per country, let alone per state in the USA.

And as always... stop looking at just the CPU prices.

You can mix this with an A320 board and 16GB of DDR4 3200 and a 120mm air cooler, vs needing a top end DDR5 board and a 360mm AIO