- Joined

- Jun 29, 2019

- Messages

- 136 (0.06/day)

| Processor | AMD Ryzen 5 5600X @4.8Ghz PBO |

|---|---|

| Motherboard | MSI B550-A PRO |

| Cooling | Hyper 212 Black Edition |

| Memory | 4x8GB Crucial Ballistix 3800Mhz CL16 |

| Video Card(s) | Gigabyte RTX 3070 Gaming OC |

| Storage | 980 PRO 500GB, 860 EVO 500GB, 850 EVO 500GB |

| Display(s) | LG 24GN600-B |

| Case | CM 690 III |

| Audio Device(s) | Creative Sound Blaster Z |

| Power Supply | EVGA SuperNOVA G2 650w |

| Mouse | Cooler Master MM711 Matte Black |

| Keyboard | Corsair K95 Platinum - Cherry MX Brown |

| Software | Windows 11 |

Hi,

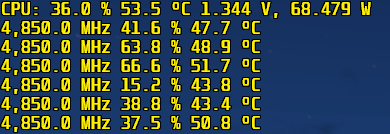

I wanted to run my 5600X at 4.85Ghz for 1T tasks and whatever that can be achieved at nT with reasonable, long-term adequate voltage that won't degrade the CPU in any noticeable way in the next like 2-3 years.

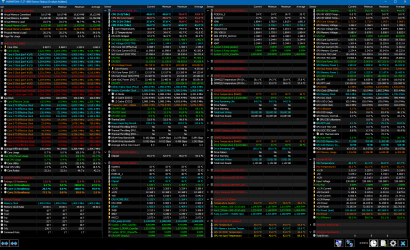

I have PBO2 enabled with curve optimizer. Currently I have the following setup:

Example:

Idle voltage is between 1.269-1.288.

It seems stable so far. Cinebench, CPUZ, AIDA64 and Linpack seem to be stable in short runs (like a couple minutes max and I refuse to use prime95).

The question is, is this safe for long term use (use case from the screenshot)? can I optimize this a bit more? I'd appreciate any tips from people who know better.

Another question, what happens to core 3 and 6 that have higher curve optimizer values which means higher voltage, does that mean they will degrade faster than the rest? Core 6 is the fastest according to Ryzen Master followed by Core 3. I can only check the individual VIDs and they seem to be the same, how come is that the case when cores 3 and 6 have different curve optimizer values? (unstable otherwise)

Thanks.

I wanted to run my 5600X at 4.85Ghz for 1T tasks and whatever that can be achieved at nT with reasonable, long-term adequate voltage that won't degrade the CPU in any noticeable way in the next like 2-3 years.

I have PBO2 enabled with curve optimizer. Currently I have the following setup:

- 125 PPT

- 75 TDC

- 100 EDC

- 1X Scalar

- Curve optimizer: -20 on core 1,2,4,5 /// -16 core 3 /// -12 core 6

- +200Mhz Fmax override

Example:

Idle voltage is between 1.269-1.288.

It seems stable so far. Cinebench, CPUZ, AIDA64 and Linpack seem to be stable in short runs (like a couple minutes max and I refuse to use prime95).

The question is, is this safe for long term use (use case from the screenshot)? can I optimize this a bit more? I'd appreciate any tips from people who know better.

Another question, what happens to core 3 and 6 that have higher curve optimizer values which means higher voltage, does that mean they will degrade faster than the rest? Core 6 is the fastest according to Ryzen Master followed by Core 3. I can only check the individual VIDs and they seem to be the same, how come is that the case when cores 3 and 6 have different curve optimizer values? (unstable otherwise)

Thanks.

he can probably tell you more about longevity

he can probably tell you more about longevity

4600 is ok for me at ~1.325v.. good for all loads, I think... somewhere around there anyways could be 1.35

4600 is ok for me at ~1.325v.. good for all loads, I think... somewhere around there anyways could be 1.35