- Joined

- Apr 30, 2011

- Messages

- 2,786 (0.54/day)

- Location

- Greece

| Processor | AMD Ryzen 5 5600@80W |

|---|---|

| Motherboard | MSI B550 Tomahawk |

| Cooling | ZALMAN CNPS9X OPTIMA |

| Memory | 2*8GB PATRIOT PVS416G400C9K@3733MT_C16 |

| Video Card(s) | Sapphire Radeon RX 6750 XT Pulse 12GB |

| Storage | Sandisk SSD 128GB, Kingston A2000 NVMe 1TB, Samsung F1 1TB, WD Black 10TB |

| Display(s) | AOC 27G2U/BK IPS 144Hz |

| Case | SHARKOON M25-W 7.1 BLACK |

| Audio Device(s) | Realtek 7.1 onboard |

| Power Supply | Seasonic Core GC 500W |

| Mouse | Sharkoon SHARK Force Black |

| Keyboard | Trust GXT280 |

| Software | Win 7 Ultimate 64bit/Win 10 pro 64bit/Manjaro Linux |

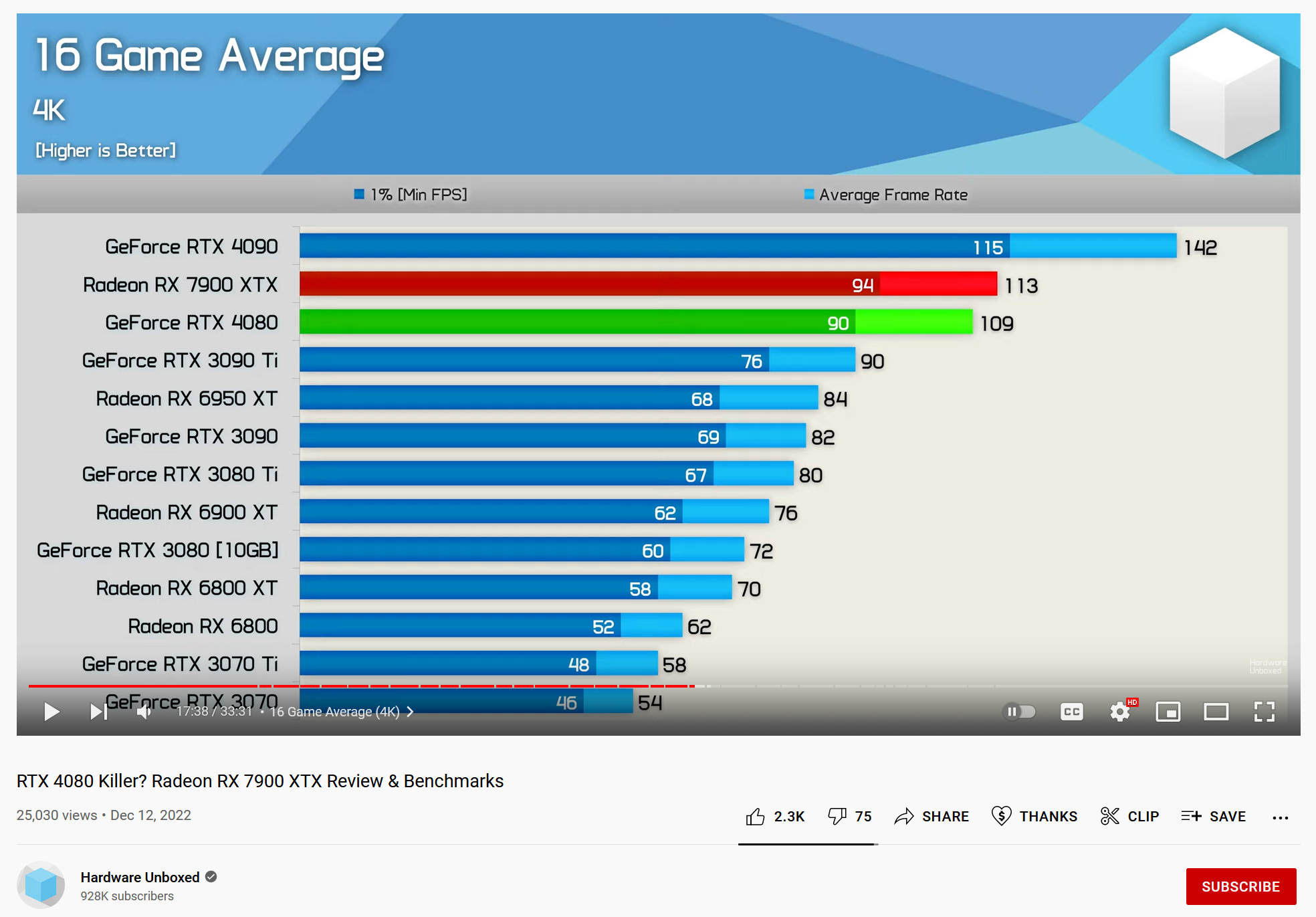

CPU bottlenecks very hard in some games so the ~45% uplift vs 6900XT @4K is pessimistic. For any game utilising the GPU properly it get closer to 60% and in some games even at 80% over the 6900XT (on other online reviews). So the up to 54% higher efficiency is true.

i guess i will see them around 1100 (XT) and 1200 (XTX) )

i guess i will see them around 1100 (XT) and 1200 (XTX) )