-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Witcher 3: Wild Hunt: FSR 2.1 vs. DLSS 2 vs. DLSS 3 Comparison

- Thread starter maxus24

- Start date

wolf

Better Than Native

- Joined

- May 7, 2007

- Messages

- 8,865 (1.33/day)

| System Name | MightyX |

|---|---|

| Processor | Ryzen 9800X3D |

| Motherboard | Gigabyte B650I AX |

| Cooling | Scythe Fuma 2 |

| Memory | 32GB DDR5 6000 CL30 tuned |

| Video Card(s) | Palit Gamerock RTX 5080 oc |

| Storage | WD Black SN850X 2TB |

| Display(s) | LG 42C2 4K OLED |

| Case | Coolermaster NR200P |

| Audio Device(s) | LG SN5Y / Focal Clear |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Corsair Dark Core RBG Pro SE |

| Keyboard | Glorious GMMK Compact w/pudding |

| VR HMD | Meta Quest 3 |

| Software | case populated with Artic P12's |

| Benchmark Scores | 4k120 OLED Gsync bliss |

DLSS Ultra performance mode with the 2.5 DLL is as better than I've ever seen that mode look, absolutely incredible what they can manage pushing 720p up to 4k, or put another way 9x area upscale, truly impressive. Further to that Performance Mode is already doing a very '4k like' presentation too, keep that up please.

- Joined

- Nov 13, 2007

- Messages

- 11,377 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

So far it's actually pretty amazing. I've tried it in flight simulator, portal and Witcher 3 -- it works really well. The fake frames are indistinguishable from the real ones, -- the control response time stays about the same as if you were running 50fps -- since the fake frames aren't taking in input. So you're not getting the reduced latency of high fps, but for a game like witcher/portal/etc but you go from 50 fps to 80-90 with that one setting and it evens out some of the chop.A bit confused on why FSR 2.1 looks so bad. This might be the worst I've ever seen it look in any game on this site. Quality looks as bad as Performance does in any other game, it's just a smeary mess. It's even worse than FXAA. I actually have a hard time believing FSR 2.1 has any sort of sharpening filter applied to it in its current state. It's also not selectable when you choose FSR, so you can't adjust the sharpening for it, however I noticed in the video that you can for DLSS. I don't know what they did to make it so bad, so I'll assume that they didn't implement FSR 2.1 correctly.

As for DLSS 3.0 Frame Generation... I... kind of have an issue calling the FPS it puts out frames per second. Sure, it throws out frames, but they're... only kind of real? If I understand frame generation correctly, in simple terms they're predictions of what the frames are going to be. I just don't see the usefulness of simply jamming predictive frames during gameplay besides saying "Look, higher number better!" in benchmarks. Its a technology that would actually get worse the lower base FPS you have, because there won't be enough good frames for the predictive frames to be obscured. I expect that this may actually make the experience worse for people actually need to use DLSS. But hey, maybe I'm wrong and it's amazing. I don't expect I'll know for another few years when the next-gen GPU's come out.

- Joined

- Apr 30, 2008

- Messages

- 4,939 (0.78/day)

- Location

- Multidimensional

| System Name | Intel NUC 12 Extreme |

|---|---|

| Processor | Intel Core i7 12700 12 Core 20 Thread CPU |

| Motherboard | Intel NUC Module Motherboard |

| Cooling | NUC Blower Cooler + 3 x 92mm Fans |

| Memory | 64GB RAM Corsair 3200Mhz CL22 |

| Video Card(s) | PowerColor RX 9070 16GB Reaper |

| Storage | Silicon 500GB M.2 + WD 2TB External HDD |

| Display(s) | Sony 4K Bravia X85J 43Inch TV 120Hz |

| Case | Intel NUC 12 Extreme Mini ITX Case |

| Audio Device(s) | Realtek Audio + Dolby Atmos |

| Power Supply | SFX FSP 650 Gold Rated PSU |

| Mouse | Logitech G203 Lightsync Mouse |

| Keyboard | Red Dragon K552W RGB White KB. |

| VR HMD | ( ◔ ʖ̯ ◔ ) |

| Software | Windows 10 Home 64bit |

| Benchmark Scores | None. I also own a Apple Macbook Air M2 |

I can't even test the game long enough cause it keeps crashing to desktop lol, tested both on my RTX 3060 & newly acquired RX 7900 XT DX12 mode, and like yall have said, it stutters here & there, this update is broken.

They are not indistinguishable, but our monkeybrains are too slow to notice them. If you look at them frame by frame, you could see many artefacts. As long as those artefacts only happen once every other frame and the rest of the frame is very similar to those coming before the FG-frame and after that, our brains won't notice them.So far it's actually pretty amazing. I've tried it in flight simulator, portal and Witcher 3 -- it works really well. The fake frames are indistinguishable from the real ones, -- the control response time stays about the same as if you were running 50fps -- since the fake frames aren't taking in input. So you're not getting the reduced latency of high fps, but for a game like witcher/portal/etc but you go from 50 fps to 80-90 with that one setting and it evens out some of the chop.

But if they happen repeatedly, you can see those errors. That is the problem DLSS/FSR have sometimes.

At release of the 4090 the pressversions with DLSS-FG had some of those repeated artefact issues with overlay icons, like the questmarker in CP2077.

So far i only played Darktide with DLSS-FG and i didn't notice any artefacts while gaming. I DID notice the input-lag difference, but only in direct on/off comparisions.

After playing Darktide with FG for a few hours, i forgot the difference.

- Joined

- Nov 13, 2007

- Messages

- 11,377 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

It's more that it makes anything that isnt a 4xxx series look like trash.So, the developer pushes a garbage patch that miraculously makes AMD gpus looks bad (pun intended ) and instead of calling the devs out,

A this point, I wouldn’t blame AMD for exiting the gpu market and leaving all of us at dear leader jensen hands. (quote edited)

Last edited by a moderator:

- Joined

- Dec 6, 2022

- Messages

- 703 (0.73/day)

- Location

- NYC

| System Name | GameStation |

|---|---|

| Processor | AMD R5 5600X |

| Motherboard | Gigabyte B550 |

| Cooling | Artic Freezer II 120 |

| Memory | 16 GB |

| Video Card(s) | Sapphire Pulse 7900 XTX |

| Storage | 2 TB SSD |

| Case | Cooler Master Elite 120 |

So you reaffirm my post, its the devs fault, not AMD.It's more that it makes anything that isnt a 4xxx series look like trash.

Frame generation don't improve latency, but it also doesn't reduce it. The frame generated use previous frame mouvement and data from dlss to estimate what the next frame would be. I think right now in the best condition you would have 1 normal frame, 1 generated frame, 1 normal, 1 generated, etc. I don't think there are case where there is more than 1 generated frame between 2 normal frame.

If you could look at all the frame, you could clearly identify witch one is real and witch one is not. But in motion, not really, it smooth the stream of image making it it more fluid. The best range for the tech is probably what is acheived in witcher 3 between 30 and 60 normal frame to get between 60-120 fps.

It probably more useful on fast screen like OLED where sub 40 fps are really atrocious.

If you could look at all the frame, you could clearly identify witch one is real and witch one is not. But in motion, not really, it smooth the stream of image making it it more fluid. The best range for the tech is probably what is acheived in witcher 3 between 30 and 60 normal frame to get between 60-120 fps.

It probably more useful on fast screen like OLED where sub 40 fps are really atrocious.

- Joined

- Nov 13, 2007

- Messages

- 11,377 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

I wouldn’t be surprised if Nvidia paid them to do a 4xxx optimized on purpose. There’s no reason why fsr looks so bad and no reason why anyone without 4 series gets an absolute mess of a game while the 4090 and 4080 run at a smooth 100-130 fps with the same settings.So you reaffirm my post, its the devs fault, not AMD.

right now they need the money and cdpr has been pretty close with Nvidia pr for a while. Not many other games out there with such bouncy hair - Nvidia making those Pantene pro v commercials come to life.

it’s pretty obvious optimizing for anything other than Lovelace wasn’t a priority.

Last edited:

D

Deleted member 177333

Guest

+1 I used to really like CD Projekt Red. Not anymore. When this patch launched, their moderation (see: censorship) squad was running all over Steam zapping threads and posts (not to mention banning people) due to the high number of legitimate complaints and negativity.I wouldn’t be surprised if Nvidia paid them to do a 4xxx optimized on purpose. There’s no reason why fsr looks so bad and no reason why anyone without 4 series gets an absolute mess of a game while the 4090 and 4080 run at a smooth 100-130 fps with the same settings.

right now they need the money and cdpr has been pretty close with Nvidia pr for a while.

- Joined

- Dec 26, 2006

- Messages

- 4,309 (0.64/day)

- Location

- Northern Ontario Canada

| Processor | Ryzen 5700x |

|---|---|

| Motherboard | Gigabyte X570S Aero G R1.1 Bios F7g |

| Cooling | Noctua NH-C12P SE14 w/ NF-A15 HS-PWM Fan 1500rpm |

| Memory | Micron DDR4-3200 2x32GB D.S. D.R. (CT2K32G4DFD832A) |

| Video Card(s) | AMD RX 6800 - Asus Tuf |

| Storage | Kingston KC3000 1TB & 2TB & 4TB Corsair MP600 Pro LPX |

| Display(s) | LG 27UL550-W (27" 4k) |

| Case | Be Quiet Pure Base 600 (no window) |

| Audio Device(s) | Realtek ALC1220-VB |

| Power Supply | SuperFlower Leadex V Gold Pro 850W ATX Ver2.52 |

| Mouse | Mionix Naos Pro |

| Keyboard | Corsair Strafe with browns |

| Software | W10 22H2 Pro x64 |

“The Witcher 3: Wild Hunt is a very CPU intensive game in DirectX 12”

is there option to run the game in DX11?

is there option to run the game in DX11?

- Joined

- Apr 30, 2011

- Messages

- 2,786 (0.54/day)

- Location

- Greece

| Processor | AMD Ryzen 5 5600@80W |

|---|---|

| Motherboard | MSI B550 Tomahawk |

| Cooling | ZALMAN CNPS9X OPTIMA |

| Memory | 2*8GB PATRIOT PVS416G400C9K@3733MT_C16 |

| Video Card(s) | Sapphire Radeon RX 6750 XT Pulse 12GB |

| Storage | Sandisk SSD 128GB, Kingston A2000 NVMe 1TB, Samsung F1 1TB, WD Black 10TB |

| Display(s) | AOC 27G2U/BK IPS 144Hz |

| Case | SHARKOON M25-W 7.1 BLACK |

| Audio Device(s) | Realtek 7.1 onboard |

| Power Supply | Seasonic Core GC 500W |

| Mouse | Sharkoon SHARK Force Black |

| Keyboard | Trust GXT280 |

| Software | Win 7 Ultimate 64bit/Win 10 pro 64bit/Manjaro Linux |

Just choose the non-DX12 exe in the bin->64 folder (X:\Program Files\The Witcher 3 Wild Hunt GOTY\bin\x64). That solves all the issues for anyone not interested in RT.“The Witcher 3: Wild Hunt is a very CPU intensive game in DirectX 12”

is there option to run the game in DX11?

That is not how it works. It estimates frames in between of already calculated frames. That means it always increases latency, by around 1/fps /2. Tests made by different parties confirms this.Frame generation don't improve latency, but it also doesn't reduce it. The frame generated use previous frame mouvement and data from dlss to estimate what the next frame would be. I think right now in the best condition you would have 1 normal frame, 1 generated frame, 1 normal, 1 generated, etc. I don't think there are case where there is more than 1 generated frame between 2 normal frame.

If you could look at all the frame, you could clearly identify witch one is real and witch one is not. But in motion, not really, it smooth the stream of image making it it more fluid. The best range for the tech is probably what is acheived in witcher 3 between 30 and 60 normal frame to get between 60-120 fps.

It probably more useful on fast screen like OLED where sub 40 fps are really atrocious.

In a 100fps game, your theoretical minimum latency is around 10ms. When using dlss3, your fps would be 200, but theoretical minimum latency would be around 15ms. The latency to see the next true (not ai generated) frame is 20ms, double that of the non dlss3.

Does the 5ms matter in this example? Maybe not for most.

- Joined

- Nov 13, 2007

- Messages

- 11,377 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

Not following - Your non-AI generated frames are still at 100fps, so why would your latency to see the next non ai frame be 20ms (50fps)?That is not how it works. It estimates frames in between of already calculated frames. That means it always increases latency, by around 1/fps /2. Tests made by different parties confirms this.

In a 100fps game, your theoretical minimum latency is around 10ms. When using dlss3, your fps would be 200, but theoretical minimum latency would be around 15ms. The latency to see the next true (not ai generated) frame is 20ms, double that of the non dlss3.

Does the 5ms matter in this example? Maybe not for most.

it would do this if say you were comparing native 100fps at med/low settings to dlss3 100fps at rt ultra, then yes your dlss3 latency would be as if you were running 50fps. But 100fps native to 200fps dlss3 should be roughly the same latency barring any excessive overhead.

I can see what you’re saying in the case if you are forcing 0 pre-calculated frames, in which case you will be adding a frame of pre-baking plus the fake frame. But afaik most default driver settings pre bake a frame anyways, even in low latency mode?

For instance here you can see that native non reflex is 39 ms, dlss2 actually increases frames so that does decrease latency, dlss3 adds a fake frame so that does nothing for latency but also doesn’t materially increase it.

Last edited:

I feel the same sometimes reading the end of these articles lmao.How much time does it really save to copy and paste the vast majority of the conclusions in every one of these articles?

you need to go watch the hardware unboxed video on it. Its more than just latency and depends on games. I myself don't see the need for it.Not following - Your non-AI generated frames are still at 100fps, so why would your latency to see the next non ai frame be 20ms (50fps)?

it would do this if say you were comparing native 100fps at med/low settings to dlss3 100fps at rt ultra, then yes your dlss3 latency would be as if you were running 50fps. But 100fps native to 200fps dlss3 should be roughly the same latency barring any excessive overhead.

I can see what you’re saying in the case if you are forcing 0 pre-calculated frames, in which case you will be adding a frame of pre-baking plus the fake frame. But afaik most default driver settings pre bake a frame anyways, even in low latency mode?

View attachment 275242

For instance here you can see that native non reflex is 39 ms, dlss2 actually increases frames so that does decrease latency, dlss3 adds a fake frame so that does nothing for latency but also doesn’t materially increase it.

In the picture you posted, dlss3 has 15ms worse latency compared to not using it.Not following - Your non-AI generated frames are still at 100fps, so why would your latency to see the next non ai frame be 20ms (50fps)?

it would do this if say you were comparing native 100fps at med/low settings to dlss3 100fps at rt ultra, then yes your dlss3 latency would be as if you were running 50fps. But 100fps native to 200fps dlss3 should be roughly the same latency barring any excessive overhead.

I can see what you’re saying in the case if you are forcing 0 pre-calculated frames, in which case you will be adding a frame of pre-baking plus the fake frame. But afaik most default driver settings pre bake a frame anyways, even in low latency mode?

View attachment 275242

For instance here you can see that native non reflex is 39 ms, dlss2 actually increases frames so that does decrease latency, dlss3 adds a fake frame so that does nothing for latency but also doesn’t materially increase it.

Reflex is completely different tech, and can be used independently to dlss3.

The delay comes from having to wait for the t+2 frame to be ready, before t+1 frame can be ’generated’, and then displayed. Without dlss3, you can display it immeaditely after it has been calculated. No need to wait for the ai generation, then displaying the ai frame, then waiting a bit more (because of frame pacing), and then finally displaying the true frame.

- Joined

- Nov 13, 2007

- Messages

- 11,377 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

Ah I see what you're saying now - thanks, that's helpful.In the picture you posted, dlss3 has 15ms worse latency compared to not using it.

Reflex is completely different tech, and can be used independently to dlss3.

The delay comes from having to wait for the t+2 frame to be ready, before t+1 frame can be ’generated’, and then displayed. Without dlss3, you can display it immeaditely after it has been calculated. No need to wait for the ai generation, then displaying the ai frame, then waiting a bit more (because of frame pacing), and then finally displaying the true frame.

End result vs. native it's about the same, but 3 is also using DLSS2 upscaling and eating up basically all of the latency benefit of upscaling to generate that extra frame (in that instance).

Yeah, there is a reason nvidia does not want to run frame generation without other tech in place ( dlss2 & reflex ).Ah I see what you're saying now - thanks, that's helpful.

- Joined

- Oct 19, 2022

- Messages

- 50 (0.05/day)

I am excited to get home and try FSR 2.1 on my 1080Ti.

I will let you guys know how it went

I will let you guys know how it went

- Joined

- Sep 10, 2007

- Messages

- 249 (0.04/day)

- Location

- Zagreb, Croatia

| System Name | My main PC - C2D |

|---|---|

| Processor | Intel Core 2 Duo E4400 @ 320x10 (3200MHz) w/ Scythe Ninja rev.B + 120mm fan |

| Motherboard | Gigabyte GA-P35-DS3R (Intel P35 + ICH9R chipset, socket 775) |

| Cooling | Scythe Ninja rev.B + 120mm fan | 250mm case fan on side | 120mm PSU fan |

| Memory | 4x 1GB Kingmax MARS DDR2 800 CL5 |

| Video Card(s) | Sapphire ATi Radeon HD4890 |

| Storage | Seagate Barracuda 7200.11 250GB SATAII, 16MB cache, 7200 rpm |

| Display(s) | Samsung SyncMaster 757DFX, 17“ CRT, max: 1920x1440 @64Hz |

| Case | Aplus CS-188AF case with 250mm side fan |

| Audio Device(s) | Realtek ALC889A onboard 7.1, with Logitech X-540 5.1 speakers |

| Power Supply | Chieftec 450W (GPS450AA-101A) /w 120mm fan |

| Software | Windows XP Professional SP3 32bit / Windows 7 Beta1 64bit (dual boot) |

| Benchmark Scores | none |

I really just want to hear what's actual frames increase, saying "double" means nothing, if it's 100 to 200 FPS I don't care as 100 is already enough, if its 10 to 20, then I don't care because it's still too low to be playable. So any FSR/DLSS article should have actual frame numbers in it. And even with comments posted so far, I still need to just go and try it myself to get any useful information. At least I know that DX11 is probably the way for this update, thanks for that comment

- Joined

- Nov 13, 2007

- Messages

- 11,377 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

I really just want to hear what's actual frames increase, saying "double" means nothing, if it's 100 to 200 FPS I don't care as 100 is already enough, if its 10 to 20, then I don't care because it's still too low to be playable. So any FSR/DLSS article should have actual frame numbers in it. And even with comments posted so far, I still need to just go and try it myself to get any useful information. At least I know that DX11 is probably the way for this update, thanks for that comment

What if it's 30 to 60? or 45 to 90?

Witcher 3 for me it's 55 to 110, portal RTX it's about 45 to 90. (native to 3.0) Roughly speaking.

It's definitely cool tech -- I'm actually excited about AMD's implementation since it can really breathe new life into some of the older gen cards. 3080/6900xt running at 40FPS? now it's 80...

You won't need it for games like apex or mw2/ multiplayer games that run at huge FPS anyways - but for Plague Tale/Starfield etc. I think it will be useful.

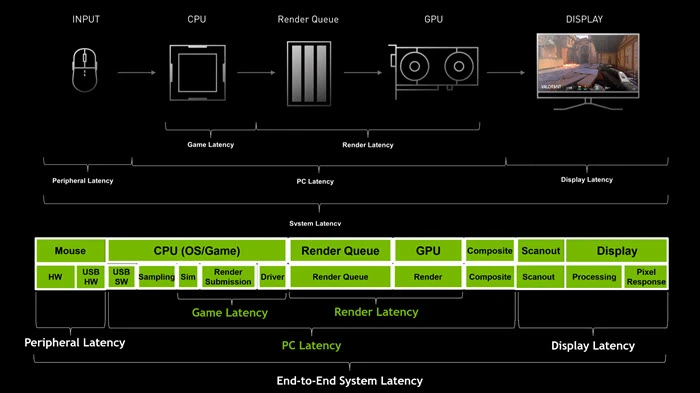

Nvidia declare that DLSS 3 Frame Generation only affect the PC Latency, not the Peripherical and Display Latency.

I seen report that DLSS use 2 frame to generate the third, but I seen no official place yet on where that generated frame would be(In between, or after). If you have the official source on it, i would like to read it.

Frame generation is probably only useful when the cpu is the bottlenecked (Game Latency). But that is still a useful thing.

I seen report that DLSS use 2 frame to generate the third, but I seen no official place yet on where that generated frame would be(In between, or after). If you have the official source on it, i would like to read it.

Frame generation is probably only useful when the cpu is the bottlenecked (Game Latency). But that is still a useful thing.

The quality would be complete shit if it predicted a future frame, thus it generates a frame in between. I do recall that info being in some slide deck as well. The latency hit also speaks for this.I seen report that DLSS use 2 frame to generate the third, but I seen no official place yet on where that generated frame would be(In between, or after). If you have the official source on it, i would like to read it.

- Joined

- Dec 10, 2022

- Messages

- 487 (0.51/day)

| System Name | The Phantom in the Black Tower |

|---|---|

| Processor | AMD Ryzen 7 5800X3D |

| Motherboard | ASRock X570 Pro4 AM4 |

| Cooling | AMD Wraith Prism, 5 x Cooler Master Sickleflow 120mm |

| Memory | 64GB Team Vulcan DDR4-3600 CL18 (4×16GB) |

| Video Card(s) | ASRock Radeon RX 7900 XTX Phantom Gaming OC 24GB |

| Storage | WDS500G3X0E (OS), WDS100T2B0C, TM8FP6002T0C101 (x2) and ~40TB of total HDD space |

| Display(s) | Haier 55E5500U 55" 2160p60Hz |

| Case | Ultra U12-40670 Super Tower |

| Audio Device(s) | Logitech Z200 |

| Power Supply | EVGA 1000 G2 Supernova 1kW 80+Gold-Certified |

| Mouse | Logitech MK320 |

| Keyboard | Logitech MK320 |

| VR HMD | None |

| Software | Windows 10 Professional |

| Benchmark Scores | Fire Strike Ultra: 19484 Time Spy Extreme: 11006 Port Royal: 16545 SuperPosition 4K Optimised: 23439 |

Moving the slider from left to right showed me that while DLSS and FSR do look ever so slightly different (not really seeing that one is better than the other), if only one of them was on my screen, I would be just fine either way. I can't imagine that most people wouldn't have the same reaction if they're really being honest.

It's like having a new TV. If it's the only one in the room, it looks great, no matter how superior another model may be.

It's like having a new TV. If it's the only one in the room, it looks great, no matter how superior another model may be.

- Joined

- Jan 17, 2018

- Messages

- 500 (0.18/day)

| Processor | Ryzen 7 5800X3D |

|---|---|

| Motherboard | MSI B550 Tomahawk |

| Cooling | Noctua U12S |

| Memory | 32GB @ 3600 CL18 |

| Video Card(s) | AMD 6800XT |

| Storage | WD Black SN850(1TB), WD Black NVMe 2018(500GB), WD Blue SATA(2TB) |

| Display(s) | Samsung Odyssey G9 |

| Case | Be Quiet! Silent Base 802 |

| Power Supply | Seasonic PRIME-GX-1000 |

The chosen scene wasn't the best scene to show the quality differences of the TAA, DLSS & FSR. As someone that can test FSR, the quality drop is extremely noticeable. Texture quality with FSR goes down to well below even 'low' texture preset settings. These two screenshots are comparisons of TAA & FSR at the same exact settings. The Witcher TAA/FSR ComparisonMoving the slider from left to right showed me that while DLSS and FSR do look ever so slightly different (not really seeing that one is better than the other), if only one of them was on my screen, I would be just fine either way. I can't imagine that most people wouldn't have the same reaction if they're really being honest.

It's like having a new TV. If it's the only one in the room, it looks great, no matter how superior another model may be.