-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel Compares Arc A750 with RTX 3060 With Latest Driver Update

- Thread starter GFreeman

- Start date

If you focus only on rasterization, you are right, but the current video card takes a lot of the processor's tasks and here nVidia shines. Beyond games, nVidia offers that package of good and very good performances, while the competition offers inconsistent performances, from very good to disaster. Even in games, with RT enabled, the competition is at least a generation behind.Intel should have learned from AMD that better value does not guarantee sales...Nvidia's mindshare cannot be broken with a better product because the vast majority of consumers don't make purchasing decisions based on rational, objective, empirical data...they make those decisions based on their feelings, emotions, and perceptions regardless of how divorced they are from reality.

- Joined

- May 3, 2018

- Messages

- 2,351 (1.07/day)

I would still get the A770, but good to see Intel making something out of Alchemist and giving us some good value. I hope Battlemage though is the real deal and by then they have a good driver team. Launching on time will also be must if they are to succeed.

- Joined

- Aug 26, 2021

- Messages

- 290 (0.29/day)

It's impressive seeing how much performance they are getting out of these cards compared to release.

- Joined

- Feb 18, 2013

- Messages

- 2,180 (0.53/day)

- Location

- Deez Nutz, bozo!

| System Name | Rainbow Puke Machine :D |

|---|---|

| Processor | Intel Core i5-11400 (MCE enabled, PL removed) |

| Motherboard | ASUS STRIX B560-G GAMING WIFI mATX |

| Cooling | Corsair H60i RGB PRO XT AIO + HD120 RGB (x3) + SP120 RGB PRO (x3) + Commander PRO |

| Memory | Corsair Vengeance RGB RT 2 x 8GB 3200MHz DDR4 C16 |

| Video Card(s) | Zotac RTX2060 Twin Fan 6GB GDDR6 (Stock) |

| Storage | Corsair MP600 PRO 1TB M.2 PCIe Gen4 x4 SSD |

| Display(s) | LG 29WK600-W Ultrawide 1080p IPS Monitor (primary display) |

| Case | Corsair iCUE 220T RGB Airflow (White) w/Lighting Node CORE + Lighting Node PRO RGB LED Strips (x4). |

| Audio Device(s) | ASUS ROG Supreme FX S1220A w/ Savitech SV3H712 AMP + Sonic Studio 3 suite |

| Power Supply | Corsair RM750x 80 Plus Gold Fully Modular |

| Mouse | Corsair M65 RGB FPS Gaming (White) |

| Keyboard | Corsair K60 PRO RGB Mechanical w/ Cherry VIOLA Switches |

| Software | Windows 11 Professional x64 (Update 23H2) |

how many f**ks do I have for games with RT? none. If a competitor GPU has better performance/$ and is cheaper than what NoVideo is offering, that's a win for consumers. Besides, NoVideo is harping hard on AI accelerators and AI BS so much they don't mind losing common end-users and consumers like us. AMD is one brand I will be keeping an eye on but will never buy it for how crappy their drivers are. No amount of price cuts will make me buy their products. As for Intel, if they're willing to sell the Arc LE cards and not some AIB crap cards, then I might consider getting one just to spite both Team Green and Team Red.

- Joined

- Sep 17, 2014

- Messages

- 21,042 (5.96/day)

- Location

- The Washing Machine

| Processor | i7 8700k 4.6Ghz @ 1.24V |

|---|---|

| Motherboard | AsRock Fatal1ty K6 Z370 |

| Cooling | beQuiet! Dark Rock Pro 3 |

| Memory | 16GB Corsair Vengeance LPX 3200/C16 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Samsung 850 EVO 1TB + Samsung 830 256GB + Crucial BX100 250GB + Toshiba 1TB HDD |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Fractal Design Define R5 |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | XTRFY M42 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| Software | W10 x64 |

Except todays Nvidia is a different beast. Ampere is absolute crap. Ada is overpriced. Second hand the majority especially midrange isnt really what it used to be. And its RT advantage wont amount to much either...Intel should have learned from AMD that better value does not guarantee sales...Nvidia's mindshare cannot be broken with a better product because the vast majority of consumers don't make purchasing decisions based on rational, objective, empirical data...they make those decisions based on their feelings, emotions, and perceptions regardless of how divorced they are from reality.

Price competitiveness with pretty much feature parity (where it matters, FSR for example) can definitely work. AMD used to pair feature deficits with lower price and to some, it still does if they value RT highly.

Last edited:

Change dealer.Ampere is absolute crap.

- Joined

- Jun 27, 2019

- Messages

- 1,884 (1.06/day)

- Location

- Hungary

| System Name | I don't name my systems. |

|---|---|

| Processor | i5-12600KF 'stock power limits/-115mV undervolt+contact frame' |

| Motherboard | Asus Prime B660-PLUS D4 |

| Cooling | ID-Cooling SE 224 XT ARGB V3 'CPU', 4x Be Quiet! Light Wings + 2x Arctic P12 black case fans. |

| Memory | 4x8GB G.SKILL Ripjaws V DDR4 3200MHz |

| Video Card(s) | Asus TuF V2 RTX 3060 Ti @1920 MHz Core/950mV Undervolt |

| Storage | 4 TB WD Red, 1 TB Silicon Power A55 Sata, 1 TB Kingston A2000 NVMe, 256 GB Adata Spectrix s40g NVMe |

| Display(s) | 29" 2560x1080 75 Hz / LG 29WK600-W |

| Case | Be Quiet! Pure Base 500 FX Black |

| Audio Device(s) | Onboard + Hama uRage SoundZ 900+USB DAC |

| Power Supply | Seasonic CORE GM 500W 80+ Gold |

| Mouse | Canyon Puncher GM-20 |

| Keyboard | SPC Gear GK630K Tournament 'Kailh Brown' |

| Software | Windows 10 Pro |

If they keep this up I might be open to the idea of a Intel card in a few years if the price is right.

My biggest issue with the first gen ARC was how badly they ran some older games with their relase/early drivers and since I'm a variety gamer who plays both older and new games that does matter to me.

As for bad AMD drivers mentioned before, well I dunno about that but when I owned a RX 570 for almost 3 years it was totally fine from a gaming/casual everyday use case perspective.

My biggest issue with the first gen ARC was how badly they ran some older games with their relase/early drivers and since I'm a variety gamer who plays both older and new games that does matter to me.

As for bad AMD drivers mentioned before, well I dunno about that but when I owned a RX 570 for almost 3 years it was totally fine from a gaming/casual everyday use case perspective.

Last edited:

I feel the driver should have been at this state at launch, and not 6 months after launch. That’s because the impression of poor driver will always linger around. And considering that AMD have been cutting GPU prices, the attractiveness of a last gen Intel GPU is not going to improve dramatically. And you can tell that trend when Intel themself is aggressively cutting prices. In my opinion, comparing the A750 against one of the weaker card from Nvidia’s last gen lineup is not a complement. The worst part is that current gen games eats VRAM like nobody’s business, and that 8GB of VRAM on the A750 is going to be the Achilles’ heel agains the 12GB VRAM of the RTX 3060. There is no way around the VRAM limitation unless game makers start optimising their games properly.

- Joined

- Aug 19, 2014

- Messages

- 1,451 (0.41/day)

Yeah, it is called optimizationIt's impressive seeing how much performance they are getting out of these cards compared to release.

Last edited:

cerulliber

New Member

- Joined

- Jul 9, 2021

- Messages

- 24 (0.02/day)

wondering with what card could a770 compete with 16 gb. perhaps rtx 3060 12 gb?

at thispoint gamers have to download memmory leak patches from nexusmods ( Hogwarts L being an example)

a new Intel model would be interesting,

but availability drivers ./ compatbilitity with am4 cpus due to market share in gaming segment would be a determined choice for a success story period.

as well with collaboration with biggest game studios for new games.

at thispoint gamers have to download memmory leak patches from nexusmods ( Hogwarts L being an example)

a new Intel model would be interesting,

but availability drivers ./ compatbilitity with am4 cpus due to market share in gaming segment would be a determined choice for a success story period.

as well with collaboration with biggest game studios for new games.

- Joined

- Feb 20, 2019

- Messages

- 7,426 (3.88/day)

| System Name | Bragging Rights |

|---|---|

| Processor | Atom Z3735F 1.33GHz |

| Motherboard | It has no markings but it's green |

| Cooling | No, it's a 2.2W processor |

| Memory | 2GB DDR3L-1333 |

| Video Card(s) | Gen7 Intel HD (4EU @ 311MHz) |

| Storage | 32GB eMMC and 128GB Sandisk Extreme U3 |

| Display(s) | 10" IPS 1280x800 60Hz |

| Case | Veddha T2 |

| Audio Device(s) | Apparently, yes |

| Power Supply | Samsung 18W 5V fast-charger |

| Mouse | MX Anywhere 2 |

| Keyboard | Logitech MX Keys (not Cherry MX at all) |

| VR HMD | Samsung Oddyssey, not that I'd plug it into this though.... |

| Software | W10 21H1, barely |

| Benchmark Scores | I once clocked a Celeron-300A to 564MHz on an Abit BE6 and it scored over 9000. |

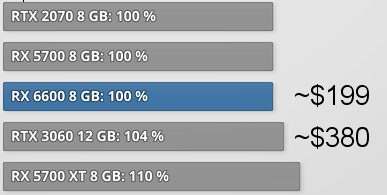

Beating the 3060 isn't exactly difficult, it's abysmal value. The RX6600 which is half the price of the 3060 is a close match for the 3060.

Clearly, Intel are tone-deaf because AMD have been beating Nvidia on price for several generations and that hasn't really given them marketshare.

We desperately need the competition, but what they're doing isn't really working yet. Matching an existing competitor like AMD and doing so with some serious caveats still isn't enough to change the tide.

Clearly, Intel are tone-deaf because AMD have been beating Nvidia on price for several generations and that hasn't really given them marketshare.

We desperately need the competition, but what they're doing isn't really working yet. Matching an existing competitor like AMD and doing so with some serious caveats still isn't enough to change the tide.

Last edited:

- Joined

- Nov 11, 2013

- Messages

- 41 (0.01/day)

- Location

- Canada

| System Name | Lovelace |

|---|---|

| Processor | 13th Gen Intel Core i7-13700K |

| Motherboard | ASUS ROG STRIX Z790-E GAMING WiFi @ BIOS 2102 |

| Cooling | Noctua NH-D15 chromax.black w/ 5 fans |

| Memory | 32GB G.Skill Trident Z5 RGB Series RAM @ 7200 MHz |

| Video Card(s) | ASUS TUF Gaming Radeon™ RX 7900 XTX OC Edition |

| Storage | WD_BLACK SN850 1 TB, SN850X 2 TB, SN850X 4 TB |

| Display(s) | TCL 55R617 (2018) |

| Case | Fractal Design Torrent (White) |

| Audio Device(s) | Schiit Magni Heretic & Modi+ / Philips Fidelio X2HR + Sennheiser HD 600 & HD 650 |

| Power Supply | Corsair RM850x Power Supply (2021) |

| Mouse | Razer Quartz Viper Ultimate |

| Keyboard | Razer Quartz Blackwidow V3 |

| Software | Windows 11 Professional 64-bit |

AMD’s drivers aren’t crappy. They have been improving their drivers for a decent while now. Aside from a windows quirk with window shadows where they like glitch out and windows trying to activate HDR when it’s disabled (which it did on my 1080 too, stupid OS) AMD’s drivers have been rock solid on my 6700 XT. I imagine they’re better on the 7000 series too.how many f**ks do I have for games with RT? none. If a competitor GPU has better performance/$ and is cheaper than what NoVideo is offering, that's a win for consumers. Besides, NoVideo is harping hard on AI accelerators and AI BS so much they don't mind losing common end-users and consumers like us. AMD is one brand I will be keeping an eye on but will never buy it for how crappy their drivers are. No amount of price cuts will make me buy their products. As for Intel, if they're willing to sell the Arc LE cards and not some AIB crap cards, then I might consider getting one just to spite both Team Green and Team Red.

There is no way to accurately judge their drivers unless you’ve used them recently, and AMD, Intel, and NVIDIA all have driver issues, it just depends on the scope of what they are.

- Joined

- Sep 17, 2014

- Messages

- 21,042 (5.96/day)

- Location

- The Washing Machine

| Processor | i7 8700k 4.6Ghz @ 1.24V |

|---|---|

| Motherboard | AsRock Fatal1ty K6 Z370 |

| Cooling | beQuiet! Dark Rock Pro 3 |

| Memory | 16GB Corsair Vengeance LPX 3200/C16 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Samsung 850 EVO 1TB + Samsung 830 256GB + Crucial BX100 250GB + Toshiba 1TB HDD |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Fractal Design Define R5 |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | XTRFY M42 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| Software | W10 x64 |

Yesterday I installed my 7900XT and it has been a flawless experience, I was also pleasantly surprised with the GUI and options within Adrenaline software. Shit just works. It was plug, install, play. Games run the same way.AMD’s drivers aren’t crappy. They have been improving their drivers for a decent while now. Aside from a windows quirk with window shadows where they like glitch out and windows trying to activate HDR when it’s disabled (which it did on my 1080 too, stupid OS) AMD’s drivers have been rock solid on my 6700 XT. I imagine they’re better on the 7000 series too.

There is no way to accurately judge their drivers unless you’ve used them recently, and AMD, Intel, and NVIDIA all have driver issues, it just depends on the scope of what they are.

Coming from 10+ years of Nvidia wasnt an issue either. Removed the old device in device manager, deleted drivers, and installed new device. Done

The discussion slides towards an AMD ode. When AMD RX and Intel Arc will be at the level of nVidia in all aspects, not only in rasterization, the prices will be similar.

When AMD had a competitive product with which it surpassed the competition, the prices were no longer low at all. On the contrary. I give as examples: 5600X, 5800X3D and the lack of any offer under 200 dollars for Zen 3 and 4. For Zen 3 they came at the sunset of the series, for Zen 4 it is still missing.

Even now, nVidia has four new models launched, mainstream and high-end, AMD has only two, only high-end.

When AMD had a competitive product with which it surpassed the competition, the prices were no longer low at all. On the contrary. I give as examples: 5600X, 5800X3D and the lack of any offer under 200 dollars for Zen 3 and 4. For Zen 3 they came at the sunset of the series, for Zen 4 it is still missing.

Even now, nVidia has four new models launched, mainstream and high-end, AMD has only two, only high-end.

Last edited:

- Joined

- Sep 17, 2014

- Messages

- 21,042 (5.96/day)

- Location

- The Washing Machine

| Processor | i7 8700k 4.6Ghz @ 1.24V |

|---|---|

| Motherboard | AsRock Fatal1ty K6 Z370 |

| Cooling | beQuiet! Dark Rock Pro 3 |

| Memory | 16GB Corsair Vengeance LPX 3200/C16 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Samsung 850 EVO 1TB + Samsung 830 256GB + Crucial BX100 250GB + Toshiba 1TB HDD |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Fractal Design Define R5 |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | XTRFY M42 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| Software | W10 x64 |

5800X3D plus board puts you at 400 ,- thats pretty competitive for top end gaming CPU. And itll max perf on a simple air cooler too. Though in general, youre absolutely correct, AMD 'past gen' definitely sees bigger price cuts.The discussion slides towards an AMD ode. When AMD RX and Intel Arc will be at the level of nVidia in all aspects, not only in rasterization, the prices will be similar.

When AMD had a competitive product with which it surpassed the competition, the prices were no longer low at all. On the contrary. I give as examples: 5600X, 5800X3D and the lack of any offer under 200 dollars for Zen 3 and 4. For Zen 3 they came at the sunset of the series, for Zen 4 it is still missing.

Even now, nVidia has four new models launched, mainstream and high-end, AMD has only two, only high-end.

- Joined

- Feb 20, 2019

- Messages

- 7,426 (3.88/day)

| System Name | Bragging Rights |

|---|---|

| Processor | Atom Z3735F 1.33GHz |

| Motherboard | It has no markings but it's green |

| Cooling | No, it's a 2.2W processor |

| Memory | 2GB DDR3L-1333 |

| Video Card(s) | Gen7 Intel HD (4EU @ 311MHz) |

| Storage | 32GB eMMC and 128GB Sandisk Extreme U3 |

| Display(s) | 10" IPS 1280x800 60Hz |

| Case | Veddha T2 |

| Audio Device(s) | Apparently, yes |

| Power Supply | Samsung 18W 5V fast-charger |

| Mouse | MX Anywhere 2 |

| Keyboard | Logitech MX Keys (not Cherry MX at all) |

| VR HMD | Samsung Oddyssey, not that I'd plug it into this though.... |

| Software | W10 21H1, barely |

| Benchmark Scores | I once clocked a Celeron-300A to 564MHz on an Abit BE6 and it scored over 9000. |

While I mostly agree with you, I wouldn't call a $600 GPU "mainstream" yet. Market data still shows that a very small percentage of people spend more than $400 on a GPU and the Steam hardware survey seems to indicate that the $149 reigning champion of the mainstream (GTX 1650) has only recently been dethroned.Even now, nVidia has four new models launched, mainstream and high-end, AMD has only two, only high-end.

There's also mounting evidence from manufacturing dates on 4070 GPUs that Nvidia has had these cards ready for a very very long time and has been intentionally holding them back until the inventory of old stock gets sold. Laptops with the 4070 have been reviewed and on sale since January and it takes much longer to get a laptop to market than a dGPU, so that correlates with the rumours that desktop 4070 cards have been held back from a 2022 launch to

Hmm, $600 clearly too much for the mainstream:

This guy cites a ton of sites, channels, sources - the 4070 is a serious sales flop with nobody willing to spend the asking price.

Last edited:

- Joined

- May 15, 2007

- Messages

- 773 (0.12/day)

| System Name | Daedalus | O'Neill | ZPM Hive | |

|---|---|

| Processor | M3 Pro (11/14) | Epyc 7402p | i5 12400F | |

| Motherboard | Apple M3 Pro | SM H11SSL-i | TUF B660M-E D4 | |

| Cooling | Pure Silence | Noctua NH-U12S TR4 | Noctua NH-D14 | |

| Memory | 18GB Unified | 128GB DDR4 | 16GB DDR4 | |

| Video Card(s) | M3 Pro | RTX 4070FE (VM) | ARC A750 LE | |

| Storage | 512GB NVME | ALOT of SSD's | 1TB NVME | |

| Display(s) | 14" 3024x1964 | IPMI | 1440p UW | |

| Case | Macbook Pro 14" | NZXT H510 Flow| BC1 Test Bench | |

| Audio Device(s) | Onboard | None | Onboard | |

| Power Supply | ~ 77w Magsafe | EVGA 750w G3 | HX1000i | |

| Mouse | Razer Basilisk |

| Keyboard | Logitech G915 TKL |

| Software | MacOS Sonoma | Proxmox 8 | Win 11 x64 | |

I would wait. I bought the Acer A770 predator thinking I would benefit from the pcie 4.0 over my older RTX 2080 FE (pcie 3.0) and was so dissapointed I'm back with the Nvidia. Sluggish, mouse lags, scrolling weird. Simple programs like Irfanview shut down with a huge delay often after a short(ish) freeze. I could go on. It is a beautiful card, but just too many things. Also the Intel updates may not sit well with 3rd party cards.

I have both a A750 LE and A770 BiFrost (and have owned a A770 Phantom Gaming - so basically all of them) and I can say I have not experienced any of this. The system outside of gaming felt exactly the same as with either a AMD or Nvidia card (and either Intel or AMD). Something else was going on to cause that issue.

The "3rd party cards" all run perfectly fine with the Intel drivers, either Beta or WHQL releases. No idea where the idea they wouldn't have come from as it not something I have experienced or read about anywhere.

If they keep this up I might be open to the idea of a Intel card in a few years if the price is right.

My biggest issue with the first gen ARC was how badly they ran some older games with their relase/early drivers and since I'm a variety gamer who plays both older and new games that does matter to me.

As for bad AMD drivers mentioned before, well I dunno about that but when I owned a RX 570 for almost 3 years it was totally fine from a gaming/casual everyday use case perspective.

ARC are bad for old games because only has the latest DirectX, older DirectX are emulated.

Yes, AMD are trash, random reboots on my computer when I turn off/on my Smart TV or my Receiver, limited to 8 bits (my old 1050 TI had 10 bits), no VRR on Smart TV.

Actually are crappier with new releases, Hardware Acceleration in kodi was working until about 3 or 4 drivers ago, now they broke it and haven't fixed since then.AMD’s drivers aren’t crappy. They have been improving their drivers for a decent while now. Aside from a windows quirk with window shadows where they like glitch out and windows trying to activate HDR when it’s disabled (which it did on my 1080 too, stupid OS) AMD’s drivers have been rock solid on my 6700 XT. I imagine they’re better on the 7000 series too.

There is no way to accurately judge their drivers unless you’ve used them recently, and AMD, Intel, and NVIDIA all have driver issues, it just depends on the scope of what they are.

Adrenaline is simplified, the UI is nice but I prefer Nvidia because you can fine tune settings per game or application.Yesterday I installed my 7900XT and it has been a flawless experience, I was also pleasantly surprised with the GUI and options within Adrenaline software. Shit just works. It was plug, install, play. Games run the same way.

Coming from 10+ years of Nvidia wasnt an issue either. Removed the old device in device manager, deleted drivers, and installed new device. Done

Yes Nvidia is not perfect, but the reason why, even with their higher prices, are still the top of the line is because they offer the best drivers of all GPU manufacturers.

- Joined

- Nov 11, 2013

- Messages

- 41 (0.01/day)

- Location

- Canada

| System Name | Lovelace |

|---|---|

| Processor | 13th Gen Intel Core i7-13700K |

| Motherboard | ASUS ROG STRIX Z790-E GAMING WiFi @ BIOS 2102 |

| Cooling | Noctua NH-D15 chromax.black w/ 5 fans |

| Memory | 32GB G.Skill Trident Z5 RGB Series RAM @ 7200 MHz |

| Video Card(s) | ASUS TUF Gaming Radeon™ RX 7900 XTX OC Edition |

| Storage | WD_BLACK SN850 1 TB, SN850X 2 TB, SN850X 4 TB |

| Display(s) | TCL 55R617 (2018) |

| Case | Fractal Design Torrent (White) |

| Audio Device(s) | Schiit Magni Heretic & Modi+ / Philips Fidelio X2HR + Sennheiser HD 600 & HD 650 |

| Power Supply | Corsair RM850x Power Supply (2021) |

| Mouse | Razer Quartz Viper Ultimate |

| Keyboard | Razer Quartz Blackwidow V3 |

| Software | Windows 11 Professional 64-bit |

What? AMD’s graphics cards do support 10-bit or even 12-bit color (as shown in one of the screenshots). It depends a) on the display and b) which graphics card you have. And VRR is supported as well…you seem to be out of the loop in terms of what is supported vs what isn’t. It just depends on GPU and monitor as well. Some monitors don’t support FreeSync and instead only support G-Sync. and typically 10-bit color is best taken advantage of on HDR displays.ARC are bad for old games because only has the latest DirectX, older DirectX are emulated.

Yes, AMD are trash, random reboots on my computer when I turn off/on my Smart TV or my Receiver, limited to 8 bits (my old 1050 TI had 10 bits), no VRR on Smart TV.

Actually are crappier with new releases, Hardware Acceleration in kodi was working until about 3 or 4 drivers ago, now they broke it and haven't fixed since then.

Adrenaline is simplified, the UI is nice but I prefer Nvidia because you can fine tune settings per game or application.

Yes Nvidia is not perfect, but the reason why, even with their higher prices, are still the top of the line is because they offer the best drivers of all GPU manufacturers.

and when you encounter driver issues it’s your responsibility to report them with AMD’s bug report tool tbh. Same if anyone encounters a driver issue on NVIDIA or Intel - they can't fix these issues if they're not reported, and even then there are higher-priority issues that probably take precendent over HW acceleration in a program like Kodi not working. Additionally, with Adrenalin you can fine tune settings per game as well, as can be seen in another attached screenshot. So everything you can do on NVDIA's control panel can pretty much be done on Radeon Software.

Attachments

- Joined

- Jun 2, 2017

- Messages

- 8,030 (3.16/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

This. This is why I feel when AMD releases an APU that can do 1080P higher than 60FPS in all Games for the Desktop it will sell like crazy for $299. Just look at the number of Handhelds that are in the space and also the availability of the Steam Deck. That speaks to me that AMD have already dedicated a certain number of their chips and chiplets to that part of the ecosystem that is seeing a huge uptake in investment. Things like the Asus Ally and the just announced PS5 handheld will both be powered by AMD's new APU offerings.While I mostly agree with you, I wouldn't call a $600 GPU "mainstream" yet. Market data still shows that a very small percentage of people spend more than $400 on a GPU and the Steam hardware survey seems to indicate that the $149 reigning champion of the mainstream (GTX 1650) has only recently been dethroned.

There's also mounting evidence from manufacturing dates on 4070 GPUs that Nvidia has had these cards ready for a very very long time and has been intentionally holding them back until the inventory of old stock gets sold. Laptops with the 4070 have been reviewed and on sale since January and it takes much longer to get a laptop to market than a dGPU, so that correlates with the rumours that desktop 4070 cards have been held back from a 2022 launch toscrew over the mainstream marketshift unsold, overpriced 30-series inventory first.

Nvidia has really killed the budget Discrete GPU space that we all loved. Even when AMD released a GPU that cost 18% of a 6800XT called the 6500XT that gave great 1080P performance for $200 US the Nvidia focused social media army maligned that card because it did not have a built in media encoder. The thing about that is that at the same time they all talked about the 1080P performance. Then you go on Amazon, Newegg, B&H and read reviews and see that people who have bought that card actually enjoy them. The narrative cannot last though. It's not just the Hardware Unboxed video but also Nvidia has, with their product choices done their best to kill the budget market. In 2021 for my 50th Bday I bought myself a Gaming laptop. It came with a 5800X mobile version and a 3060 with 6 GB of VRAM. I made sure I got a 1080P screen though so that I could enjoy high frame rates. In 2022 the same laptop was (basically) en masse changed to the 3050 and were $100 more. Now we are going to see 8 GB cards for $400 US in a World where Memory manufacturers have plenty of inventory so I'm sure there are deals to be had.

Intel is actually trying to make inroads which is a good thing, but the Arc cards are still not yet ready for being put into handhelds. They are also at least 2 years behind Nvidia in RT and 3-4 years behind AMD in raw performance. $300 for a GPU with 16GB of VRAM is nice but unless it also gives a proper hash rate (mining is a part of Computing) it will not catch on.

Before people wax on about the importance of RT let me ask one question. How many Games support raw "rasterization" vs RT? I won't mention FSR and therefore DLSS because my 7900XT has no issue playing at 4K and when you have a Mini LED or Newer OLED (Brightness) you don't need those accoutrements to marvel at high res Gaming.

There is also the Giant Ocean of Fish that are more important than Gaming that effect Gaming. At the end of the Day PC Gaming is a Luxury item. Don't get me wrong you could buy a $60 MB vs a $400 MB and enjoy the same experience but GPUs have gone insane. The reason I give Nvidia no love is how they priced the 3090 like a Enterprise card and put in the consumer space but a Year later release a card and a narrative that suddenly your 3090 is Garbage vs the new node with the same amount of VRAM.

What? AMD’s graphics cards do support 10-bit or even 12-bit color (as shown in one of the screenshots). It depends a) on the display and b) which graphics card you have. And VRR is supported as well…you seem to be out of the loop in terms of what is supported vs what isn’t. It just depends on GPU and monitor as well. Some monitors don’t support FreeSync and instead only support G-Sync. and typically 10-bit color is best taken advantage of on HDR displays.

and when you encounter driver issues it’s your responsibility to report them with AMD’s bug report tool tbh. Same if anyone encounters a driver issue on NVIDIA or Intel - they can't fix these issues if they're not reported, and even then there are higher-priority issues that probably take precendent over HW acceleration in a program like Kodi not working. Additionally, with Adrenalin you can fine tune settings per game as well, as can be seen in another attached screenshot. So everything you can do on NVDIA's control panel can pretty much be done on Radeon Software.

With my Smart TV, my old 1050TI supports 10 bit, with my crap 6400 doesn't.

My Smart TV is VRR not FreeSync and not G-Sync, with Nvidia Cards you can enable VRR, not with my crapy 6400.

I reported the bugs, guess what, has been a year since I reported those issues and still are there.

By the way I also reported those issues in their forum, which are pretty much dead, nobody from AMD reads them.

Keep your crappy AMD, I'll be back to Nvidia as soon as they release their 4050.

This. This is why I feel when AMD releases an APU that can do 1080P higher than 60FPS in all Games for the Desktop it will sell like crazy for $299. Just look at the number of Handhelds that are in the space and also the availability of the Steam Deck. That speaks to me that AMD have already dedicated a certain number of their chips and chiplets to that part of the ecosystem that is seeing a huge uptake in investment. Things like the Asus Ally and the just announced PS5 handheld will both be powered by AMD's new APU offerings.

Nvidia has really killed the budget Discrete GPU space that we all loved. Even when AMD released a GPU that cost 18% of a 6800XT called the 6500XT that gave great 1080P performance for $200 US the Nvidia focused social media army maligned that card because it did not have a built in media encoder. The thing about that is that at the same time they all talked about the 1080P performance. Then you go on Amazon, Newegg, B&H and read reviews and see that people who have bought that card actually enjoy them. The narrative cannot last though. It's not just the Hardware Unboxed video but also Nvidia has, with their product choices done their best to kill the budget market. In 2021 for my 50th Bday I bought myself a Gaming laptop. It came with a 5800X mobile version and a 3060 with 6 GB of VRAM. I made sure I got a 1080P screen though so that I could enjoy high frame rates. In 2022 the same laptop was (basically) en masse changed to the 3050 and were $100 more. Now we are going to see 8 GB cards for $400 US in a World where Memory manufacturers have plenty of inventory so I'm sure there are deals to be had.

Intel is actually trying to make inroads which is a good thing, but the Arc cards are still not yet ready for being put into handhelds. They are also at least 2 years behind Nvidia in RT and 3-4 years behind AMD in raw performance. $300 for a GPU with 16GB of VRAM is nice but unless it also gives a proper hash rate (mining is a part of Computing) it will not catch on.

Before people wax on about the importance of RT let me ask one question. How many Games support raw "rasterization" vs RT? I won't mention FSR and therefore DLSS because my 7900XT has no issue playing at 4K and when you have a Mini LED or Newer OLED (Brightness) you don't need those accoutrements to marvel at high res Gaming.

There is also the Giant Ocean of Fish that are more important than Gaming that effect Gaming. At the end of the Day PC Gaming is a Luxury item. Don't get me wrong you could buy a $60 MB vs a $400 MB and enjoy the same experience but GPUs have gone insane. The reason I give Nvidia no love is how they priced the 3090 like a Enterprise card and put in the consumer space but a Year later release a card and a narrative that suddenly your 3090 is Garbage vs the new node with the same amount of VRAM.

Yes, Nvidia killed budget GPUs.

Now I hope Intel really fixes what AMD doesn't wants to fix or can't fix and if Intel can release a product to compete in the handheld market that would be good news.

Last edited:

- Joined

- Feb 20, 2019

- Messages

- 7,426 (3.88/day)

| System Name | Bragging Rights |

|---|---|

| Processor | Atom Z3735F 1.33GHz |

| Motherboard | It has no markings but it's green |

| Cooling | No, it's a 2.2W processor |

| Memory | 2GB DDR3L-1333 |

| Video Card(s) | Gen7 Intel HD (4EU @ 311MHz) |

| Storage | 32GB eMMC and 128GB Sandisk Extreme U3 |

| Display(s) | 10" IPS 1280x800 60Hz |

| Case | Veddha T2 |

| Audio Device(s) | Apparently, yes |

| Power Supply | Samsung 18W 5V fast-charger |

| Mouse | MX Anywhere 2 |

| Keyboard | Logitech MX Keys (not Cherry MX at all) |

| VR HMD | Samsung Oddyssey, not that I'd plug it into this though.... |

| Software | W10 21H1, barely |

| Benchmark Scores | I once clocked a Celeron-300A to 564MHz on an Abit BE6 and it scored over 9000. |

I don't have any first-hand experience with the RX 6400, but my undestanding is that it and the 6500 would never have existed outside of the pandemic/ETH-mining scalpocalypse.With my Smart TV, my old 1050TI supports 10 bit, with my crap 6400 doesn't.

My Smart TV is VRR not FreeSync and not G-Sync, with Nvidia Cards you can enable VRR, not with my crapy 6400.

I reported the bugs, guess what, has been a year since I reported those issues and still are there.

By the way I also reported those issues in their forum, which are pretty much dead, nobody from AMD reads them.

Keep your crappy AMD, I'll be back to Nvidia as soon as they release their 4050.

Yes, Nvidia killed budget GPUs.

Now I hope Intel really fixes what AMD doesn't wants to fix or can't fix and if Intel can release a product to compete in the handheld market that would be good news.

They were never designed to be dGPUs. They are laptop chips designed to complement APUs and are lacking a lot of things we'd normally expect from a graphics card's display engine, because all of those things would be redundant duplicates alongside an APU.

@evelynmarie probably picked a bad example there because the 6400 and 6500 are possibly the two most cut-down, incomplete, actually half-baked cards AMD have released to market in a very long time. The 6600 was supposed to be the bottom of the product stack, and that's a fantastic example of a cheap, do-everything GPU that works amazingly. I dumped one in my HTPC briefly in 2022 and it was unbelievably capable for its price and power draw, the drivers were absolutely flawless while I was testing it, and the damn thing was both tiny and quiet.

- Joined

- Nov 11, 2013

- Messages

- 41 (0.01/day)

- Location

- Canada

| System Name | Lovelace |

|---|---|

| Processor | 13th Gen Intel Core i7-13700K |

| Motherboard | ASUS ROG STRIX Z790-E GAMING WiFi @ BIOS 2102 |

| Cooling | Noctua NH-D15 chromax.black w/ 5 fans |

| Memory | 32GB G.Skill Trident Z5 RGB Series RAM @ 7200 MHz |

| Video Card(s) | ASUS TUF Gaming Radeon™ RX 7900 XTX OC Edition |

| Storage | WD_BLACK SN850 1 TB, SN850X 2 TB, SN850X 4 TB |

| Display(s) | TCL 55R617 (2018) |

| Case | Fractal Design Torrent (White) |

| Audio Device(s) | Schiit Magni Heretic & Modi+ / Philips Fidelio X2HR + Sennheiser HD 600 & HD 650 |

| Power Supply | Corsair RM850x Power Supply (2021) |

| Mouse | Razer Quartz Viper Ultimate |

| Keyboard | Razer Quartz Blackwidow V3 |

| Software | Windows 11 Professional 64-bit |

The 6400 literally supports VRR according to the specifications. So yes, you CAN enable VRR. You just have to do it from within Windows, not Adrenalin Software. You have to do it via Windows Settings -> System -> Display -> Graphics Settings -> Variable Refresh Rate, and toggle it to on.With my Smart TV, my old 1050TI supports 10 bit, with my crap 6400 doesn't.

My Smart TV is VRR not FreeSync and not G-Sync, with Nvidia Cards you can enable VRR, not with my crapy 6400.

I reported the bugs, guess what, has been a year since I reported those issues and still are there.

By the way I also reported those issues in their forum, which are pretty much dead, nobody from AMD reads them.

Keep your crappy AMD, I'll be back to Nvidia as soon as they release their 4050.

Also AMD is not crappy. I literally said this already. I had a GTX 1080 before I got the 6700 XT. My 1080 served me well, and my 6700 XT has served me well so far since I got it. I don't appreciate having my hardware insulted for no reason.

I didn't pick a bad example. AMD just relies on Windows native controls to toggle stuff on like Variable Refresh Rate, which the 6400 / 6500 both support. NVIDIA however likes to unnecessarily duplicate functionality into their outdated-looking control panel.I don't have any first-hand experience with the RX 6400, but my undestanding is that it and the 6500 would never have existed outside of the pandemic/ETH-mining scalpocalypse.

They were never designed to be dGPUs. They are laptop chips designed to complement APUs and are lacking a lot of things we'd normally expect from a graphics card's display engine, because all of those things would be redundant duplicates alongside an APU.

@evelynmarie probably picked a bad example there because the 6400 and 6500 are possibly the two most cut-down, incomplete, actually half-baked cards AMD have released to market in a very long time. The 6600 was supposed to be the bottom of the product stack, and that's a fantastic example of a cheap, do-everything GPU that works amazingly. I dumped one in my HTPC briefly in 2022 and it was unbelievably capable for its price and power draw, the drivers were absolutely flawless while I was testing it, and the damn thing was both tiny and quiet.