- Joined

- Feb 15, 2022

- Messages

- 58 (0.05/day)

Whats up peeps

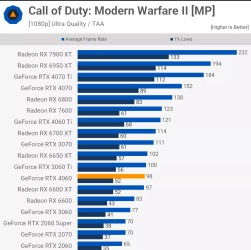

Whats the best gpu that can run warzone at 1080 with combination of low/medium settings @144 fps sustainable for 4 years

Specs:

I5 13400f

Asus prime B660m-k d4

2×8 gb ddr5 5200mhz

Evga supernova 750 gt 80+ gold

Whats the best gpu that can run warzone at 1080 with combination of low/medium settings @144 fps sustainable for 4 years

Specs:

I5 13400f

Asus prime B660m-k d4

2×8 gb ddr5 5200mhz

Evga supernova 750 gt 80+ gold