Doffy

New Member

- Joined

- Sep 6, 2023

- Messages

- 13 (0.02/day)

- Location

- Brasil, DF.

So i have a RTX 3050 mobile, is a really tough card actually being capable of offer 6,9TFs without overclock, and about 7,7TFs, with both ram and chip overclocked (from core: 1695MHZ to 1875MHZ and memory: 5500MHZ to 6600MHZ; 180+, and 1100 MHZ respectively.)

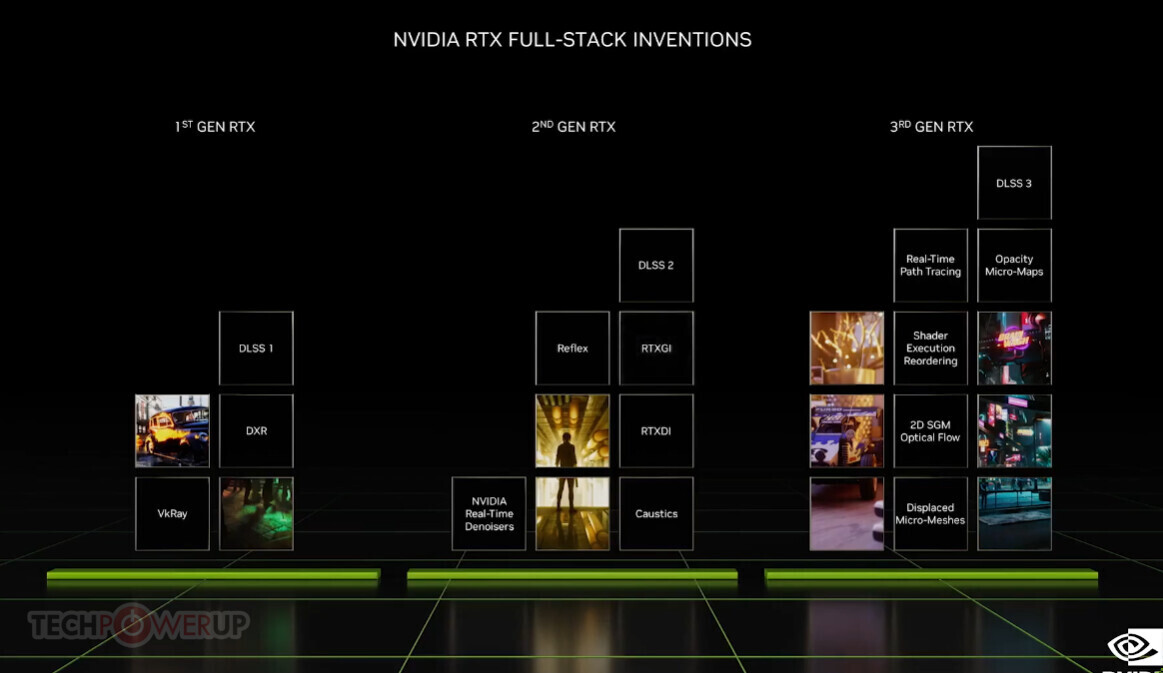

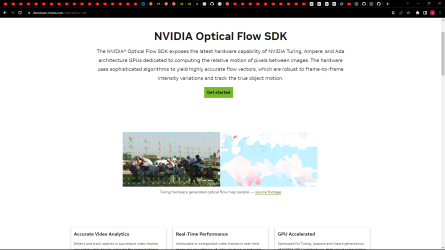

And in advance, yeah i know that "You CaNt beCOuse doNT hAvE opTicAnaL Flof on 3000s", thats bulshit, since the 2000s series this cards have OFA, just a 2X performance bedtime story every 2 years, as usual.

But the 4000s series don't have anyshit new, just a better node and a better L2 cache, they don't even increase the performance per W in the mobile cards, forcing the battery designers to use meth to deliver a new battery model with 200W+ capability that don't melt the entire rooms olner; or yet increase the bandwidth in the NEW cards, sounds lazy job for me.

As such, I PERSONALLY THINK, that to prof that given software cannot run, even delivering a 30% performance increase in the LAST GEN cards, a "ethical" company, should deliver that specific software to the consumers themselves test (I CAN SWALLOW INTEL OR AMD REMAKING AND USING NEW ARCHITECTURES AND NODES IN A TOTALLY NEW ARCHITECTURE, BUT YOU CHANGE A TRANSISTOR OR TWO AND SPELL THAT YOU REMAKE A SINGLE COMPONENT SO MUCH THAT WILL RUN LIKE A R3000 RUNNING CYBERPUNK, I DON'T!), so if anyone please has any means to get it, please share your software/hardware mage knowledge.

I'am open to discuss the AD1xx new lazy architecture, i think that making things smarter lead to evolution, but by any means i thing smarter = lazy, contrariwise i thing that the smarter work should work even harder than the average work to deliver smarter massive output, i know that the bonobos at npimba office will not get a bonus doing this but, if they get, by the personal and corpo side you should not carry this as a flag to be proud about it.

And in advance, yeah i know that "You CaNt beCOuse doNT hAvE opTicAnaL Flof on 3000s", thats bulshit, since the 2000s series this cards have OFA, just a 2X performance bedtime story every 2 years, as usual.

But the 4000s series don't have anyshit new, just a better node and a better L2 cache, they don't even increase the performance per W in the mobile cards, forcing the battery designers to use meth to deliver a new battery model with 200W+ capability that don't melt the entire rooms olner; or yet increase the bandwidth in the NEW cards, sounds lazy job for me.

As such, I PERSONALLY THINK, that to prof that given software cannot run, even delivering a 30% performance increase in the LAST GEN cards, a "ethical" company, should deliver that specific software to the consumers themselves test (I CAN SWALLOW INTEL OR AMD REMAKING AND USING NEW ARCHITECTURES AND NODES IN A TOTALLY NEW ARCHITECTURE, BUT YOU CHANGE A TRANSISTOR OR TWO AND SPELL THAT YOU REMAKE A SINGLE COMPONENT SO MUCH THAT WILL RUN LIKE A R3000 RUNNING CYBERPUNK, I DON'T!), so if anyone please has any means to get it, please share your software/hardware mage knowledge.

I'am open to discuss the AD1xx new lazy architecture, i think that making things smarter lead to evolution, but by any means i thing smarter = lazy, contrariwise i thing that the smarter work should work even harder than the average work to deliver smarter massive output, i know that the bonobos at npimba office will not get a bonus doing this but, if they get, by the personal and corpo side you should not carry this as a flag to be proud about it.