Mussels

Freshwater Moderator

- Joined

- Oct 6, 2004

- Messages

- 58,412 (7.75/day)

- Location

- Oystralia

| System Name | Rainbow Sparkles (Power efficient, <350W gaming load) |

|---|---|

| Processor | Ryzen R7 5800x3D (Undervolted, 4.45GHz all core) |

| Motherboard | Asus x570-F (BIOS Modded) |

| Cooling | Alphacool Apex UV - Alphacool Eisblock XPX Aurora + EK Quantum ARGB 3090 w/ active backplate |

| Memory | 2x32GB DDR4 3600 Corsair Vengeance RGB @3866 C18-22-22-22-42 TRFC704 (1.4V Hynix MJR - SoC 1.15V) |

| Video Card(s) | Galax RTX 3090 SG 24GB: Underclocked to 1700Mhz 0.750v (375W down to 250W)) |

| Storage | 2TB WD SN850 NVME + 1TB Sasmsung 970 Pro NVME + 1TB Intel 6000P NVME USB 3.2 |

| Display(s) | Phillips 32 32M1N5800A (4k144), LG 32" (4K60) | Gigabyte G32QC (2k165) | Phillips 328m6fjrmb (2K144) |

| Case | Fractal Design R6 |

| Audio Device(s) | Logitech G560 | Corsair Void pro RGB |Blue Yeti mic |

| Power Supply | Fractal Ion+ 2 860W (Platinum) (This thing is God-tier. Silent and TINY) |

| Mouse | Logitech G Pro wireless + Steelseries Prisma XL |

| Keyboard | Razer Huntsman TE ( Sexy white keycaps) |

| VR HMD | Oculus Rift S + Quest 2 |

| Software | Windows 11 pro x64 (Yes, it's genuinely a good OS) OpenRGB - ditch the branded bloatware! |

| Benchmark Scores | Nyooom. |

It's already leaked out in other parts of the forum, I'm TPU's latest motherboard reviewer.

Part of the changes being brought in is to speed up the reviews - finding ways to streamline the process so we get reviews out faster without compromising the content.

Personally, I think there's a lot of duplicate testing going on with CPU and GPU testing and want to trim that down, but it's a fine line of where to stop.

We already have detailed 14900K and RTX 4090 reviews, how much do we need re-tested every motherboard review?

Don't we need to just test enough to make sure they're not being throttled or held back in some way, instead of re-running every single benchmark and test again?

I'm after feedback and thoughts on this, so that my time is spent where it matters to the readers.

A lot of time goes into the photos and getting the article itself looking good.

Things take a lot longer than you'd assume there - those graphs and charts take manual time and effort although I am working with W1zz on automating more of that.

He's a machine, but I personally don't speak HTML as a second language - just assume that however long you think these things take, at least triple it.

CPU testing and redundancy:

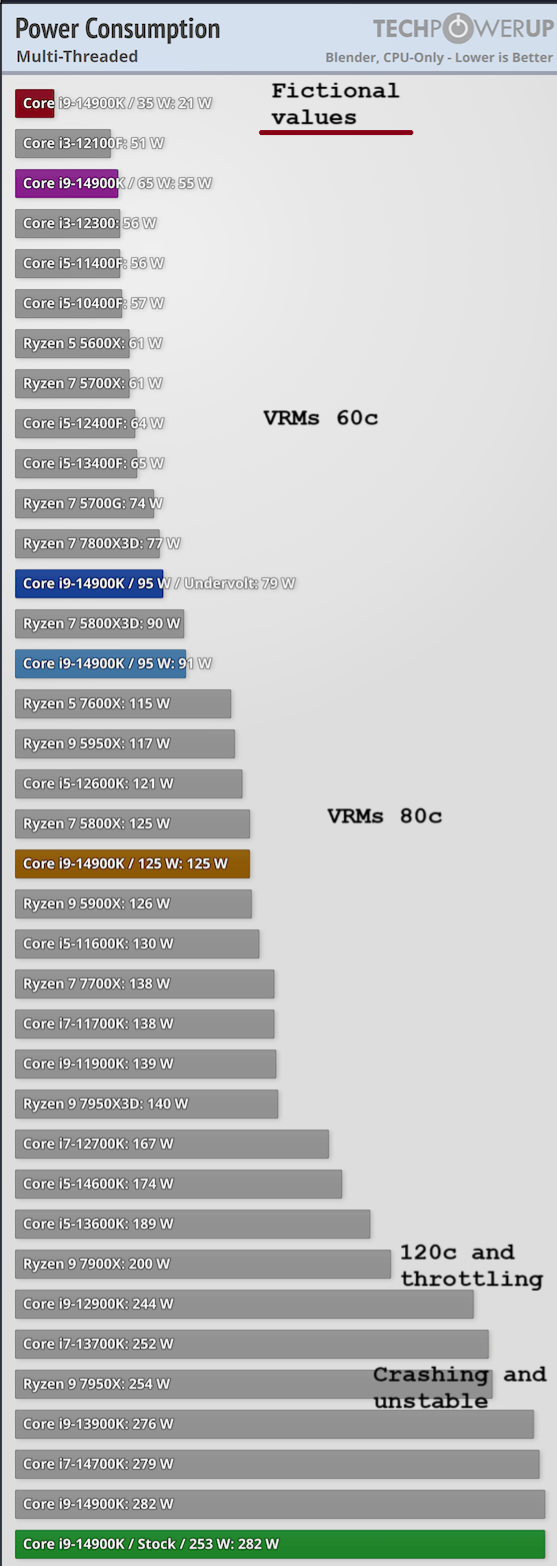

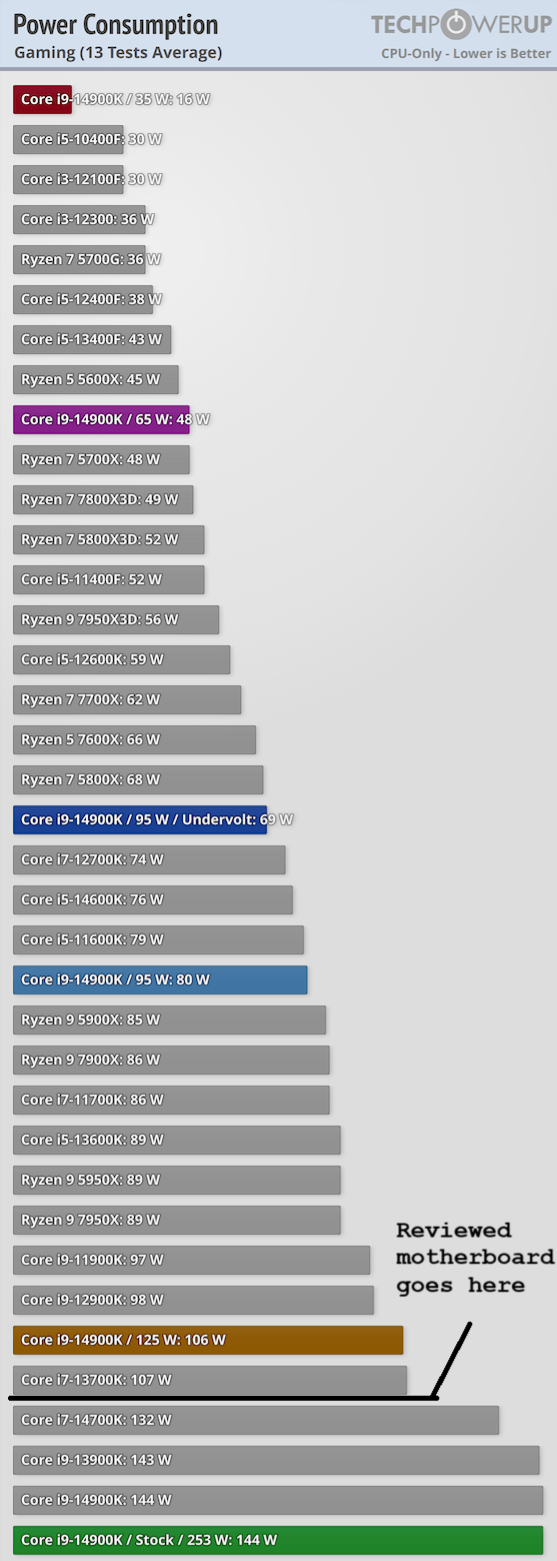

Stock BIOS settings vs power limits changed/removed is obviously important, for both AMD and Intel platforms these days.

That said, we already have the performance values for this from the CPU reviews themselves.

Unless its heavy multi core workloads it doesn't make any difference - if they can handle 125W they can power a 14900K for a gaming only system

Intel Core i9-14900K Raptor Lake Tested at Power Limits Down to 35 W - Minimum FPS | TechPowerUp

Excuse the ugly MS paint, this is only to express the concept without a solid wall of text.

Once you know the wattage a motherboards VRM's can sustain, is TPU's existing testing enough to compare to? If not, why not?

Knowing the wattage a board can sustain lets us know what CPU's run on it problem free, can we simply compare to the measured power consumption of compatible CPUs?

Fictional example below

Intel 12/13/14th gen would be included only, as the compatible CPUs.

With this fictional example above, it'd be easy to judge that as enough for any gaming system - even with a 14900k.

Anyone wanting unlocked power limits instead of stock for multi-threaded workloads, would want to look elsewhere or actively cool those VRMs.

VRM efficiency is part of the reviews, I've already found some waste 20% and up as heat meaning the higher the wattage the worse they do - VRM efficiency is easy to rate and compare separately to temperatures.

Question 1 to you guys:

Is synthetic testing of the VRM's to find a motherboards power limits enough to compare to the existing CPU benchmarks?

If not, what *exactly* do you think should be tested and why - program/game examples needed, and a reason why they need re-testing.

RAM testing is simple since it's not about overclocking.

This is all about the out of the box experience here, not manual RAM overclocking. I've got plenty fast DDR5 to test their limits with.

Once those limits are found, however...

Question 2: AIDA64 is an obvious inclusion, what RAM Bandwidth/latency sensitive games/programs matter the most to you?

With the manual effort of swapping the RAM sticks and recovering from failed boots and such, this really wastes a ton of time so simpler is better

Question 3: What parts of the *chipset* matters to you, for focused testing?

Intel and AMD both include more and more of current systems within the CPU itself from integrated graphics to SATA ports and PCI-E lanes direct to CPU's and M.2 slots - these are not part of the motherboard.

Modern chipsets are almost just PCI-E switch to spread the bandwidth between lower priority components, and it seems that very few people care about things like networking and onboard audio since it's easy to find reviews on those specific components elsewhere, or outright replace them.

I do intend to test USB 3.2/4.0 performance with external NVME drives where possible, but USB 4.0 is so new they're hard to get access to at this time.

NVME testing will be done to compare CPU lanes vs chipset to look for a bottleneck.

Part of the changes being brought in is to speed up the reviews - finding ways to streamline the process so we get reviews out faster without compromising the content.

Personally, I think there's a lot of duplicate testing going on with CPU and GPU testing and want to trim that down, but it's a fine line of where to stop.

We already have detailed 14900K and RTX 4090 reviews, how much do we need re-tested every motherboard review?

Don't we need to just test enough to make sure they're not being throttled or held back in some way, instead of re-running every single benchmark and test again?

I'm after feedback and thoughts on this, so that my time is spent where it matters to the readers.

A lot of time goes into the photos and getting the article itself looking good.

Things take a lot longer than you'd assume there - those graphs and charts take manual time and effort although I am working with W1zz on automating more of that.

He's a machine, but I personally don't speak HTML as a second language - just assume that however long you think these things take, at least triple it.

CPU testing and redundancy:

Stock BIOS settings vs power limits changed/removed is obviously important, for both AMD and Intel platforms these days.

That said, we already have the performance values for this from the CPU reviews themselves.

Unless its heavy multi core workloads it doesn't make any difference - if they can handle 125W they can power a 14900K for a gaming only system

Intel Core i9-14900K Raptor Lake Tested at Power Limits Down to 35 W - Minimum FPS | TechPowerUp

Excuse the ugly MS paint, this is only to express the concept without a solid wall of text.

Once you know the wattage a motherboards VRM's can sustain, is TPU's existing testing enough to compare to? If not, why not?

Knowing the wattage a board can sustain lets us know what CPU's run on it problem free, can we simply compare to the measured power consumption of compatible CPUs?

Fictional example below

Intel 12/13/14th gen would be included only, as the compatible CPUs.

With this fictional example above, it'd be easy to judge that as enough for any gaming system - even with a 14900k.

Anyone wanting unlocked power limits instead of stock for multi-threaded workloads, would want to look elsewhere or actively cool those VRMs.

VRM efficiency is part of the reviews, I've already found some waste 20% and up as heat meaning the higher the wattage the worse they do - VRM efficiency is easy to rate and compare separately to temperatures.

Question 1 to you guys:

Is synthetic testing of the VRM's to find a motherboards power limits enough to compare to the existing CPU benchmarks?

If not, what *exactly* do you think should be tested and why - program/game examples needed, and a reason why they need re-testing.

RAM testing is simple since it's not about overclocking.

This is all about the out of the box experience here, not manual RAM overclocking. I've got plenty fast DDR5 to test their limits with.

Once those limits are found, however...

Question 2: AIDA64 is an obvious inclusion, what RAM Bandwidth/latency sensitive games/programs matter the most to you?

With the manual effort of swapping the RAM sticks and recovering from failed boots and such, this really wastes a ton of time so simpler is better

Question 3: What parts of the *chipset* matters to you, for focused testing?

Intel and AMD both include more and more of current systems within the CPU itself from integrated graphics to SATA ports and PCI-E lanes direct to CPU's and M.2 slots - these are not part of the motherboard.

Modern chipsets are almost just PCI-E switch to spread the bandwidth between lower priority components, and it seems that very few people care about things like networking and onboard audio since it's easy to find reviews on those specific components elsewhere, or outright replace them.

I do intend to test USB 3.2/4.0 performance with external NVME drives where possible, but USB 4.0 is so new they're hard to get access to at this time.

NVME testing will be done to compare CPU lanes vs chipset to look for a bottleneck.