- Joined

- Sep 10, 2018

- Messages

- 8,276 (3.35/day)

- Location

- California

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R7 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

yeah 349usd will be for the first 10 people (who will then spend all their time praising it online), 400usd or more for the rest.

5060 still has no competition at 300usd

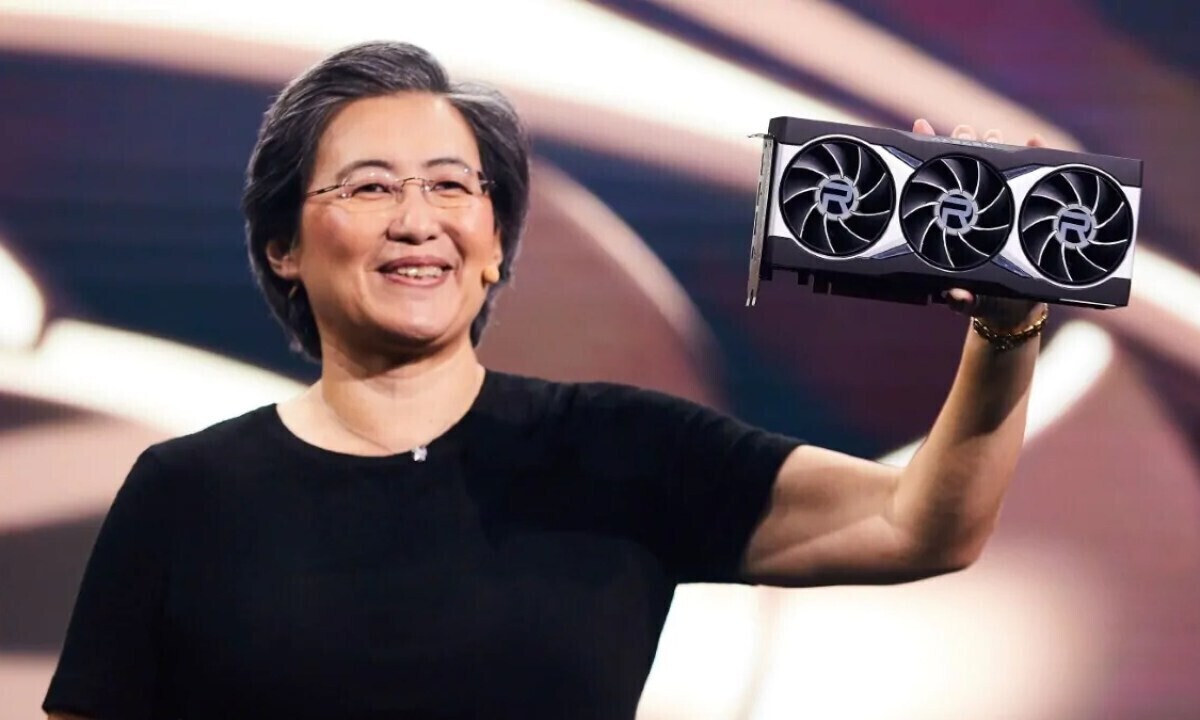

The 8GB AMD card will have the same core as the 350 usd one that supposed to be faster than the 5060ti..... They will likely be more desirable so I doubt they will hit their MSRPs though.

8GB is ok right.... Right lmao.