- Joined

- Aug 30, 2020

- Messages

- 415 (0.23/day)

WHAT? all the whining, crying, gnashing of teeth...

| System Name | Godrillamobile |

|---|---|

| Processor | 9800 x 3d @5.425 ghz |

| Motherboard | MSI x870e Tomahawk |

| Cooling | Lianli GA Trinity 2 x360 |

| Memory | Gskill 32 gig ddr5 (cl 28 oc from cl30 ) 6ghz |

| Video Card(s) | 5090 PNY stock 4 now |

| Storage | 990 pro 2 tera as prime and 970 evo plus 2 tera as secondary |

| Display(s) | 5 year cx 48 inches |

| Case | Motech |

| Audio Device(s) | SteelSeries 7.1 wired headset with dac |

| Power Supply | seasonic vertex 1000 atx 3.0 psu with single 12 volt dedicated gpu wire cable |

| Mouse | g502x wired |

| Keyboard | corsair rgb full |

| Software | wind 11 64 bit |

Delayed learning curve.WHAT? all the whining, crying, gnashing of teeth..

| Processor | Ryzen 7 9800X3D |

|---|---|

| Motherboard | MSI MAG X870 Tomahawk WIFI |

| Cooling | Custom watercooling loop with Watercool MO-RA3 420 |

| Memory | 96GB DDR5-6000 |

| Video Card(s) | Zotac RTX 4090 AIRO |

| Storage | Intel Optane DC P4800X 1.5TB + Micron 7400 PRO 3.84TB + KIOXIA CD6-R 7.68TB + Micron 9200 ECO 11TB |

| Display(s) | Dell UltraSharp U4025QW |

| Case | Fractal Design Vector RS |

| Audio Device(s) | Canton CT 800, beyerdynamic DT 990 Pro, Audio Technica AT3035 |

| Power Supply | Cooler Master X Silent Edge Platinum 1100 |

| Mouse | pwnage Stormbreaker |

| Keyboard | Wooting 80HE (zinc case version) |

Where can you get a 3090 for 400€?The RX 9070 is now new 599€ and faster then the RTX 3090, which is worth 400€ old used is fine.

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I don't know the AI benchmarks, but the RTX 3090 is really falling Bach due to lower clocks and high power consumption :

View attachment 405742

For 699 you are getting the RX 9070XT, which is +46% faster for example. No way people buying the RTX 3090 for 700€, it's insane. The better 7900XTX 24GB going for 899 to 999 brand new with better Ai capabilities then the old RTX 3090.

People just overpaying for Nvidia, it's crazy.

| Processor | Ryzen 7 9800X3D |

|---|---|

| Motherboard | MSI MAG X870 Tomahawk WIFI |

| Cooling | Custom watercooling loop with Watercool MO-RA3 420 |

| Memory | 96GB DDR5-6000 |

| Video Card(s) | Zotac RTX 4090 AIRO |

| Storage | Intel Optane DC P4800X 1.5TB + Micron 7400 PRO 3.84TB + KIOXIA CD6-R 7.68TB + Micron 9200 ECO 11TB |

| Display(s) | Dell UltraSharp U4025QW |

| Case | Fractal Design Vector RS |

| Audio Device(s) | Canton CT 800, beyerdynamic DT 990 Pro, Audio Technica AT3035 |

| Power Supply | Cooler Master X Silent Edge Platinum 1100 |

| Mouse | pwnage Stormbreaker |

| Keyboard | Wooting 80HE (zinc case version) |

But I do know the AI benchmarks and I'm telling you that AI is the reason the 3090 (Ti) is still so popular. The memory capacity to bandwidth to cost ratio is unbeatable even at 700€.I don't know the AI benchmarks

MSRPWhere can you find a 5090 for 2000??

Most 5080 are between 1500-2000.

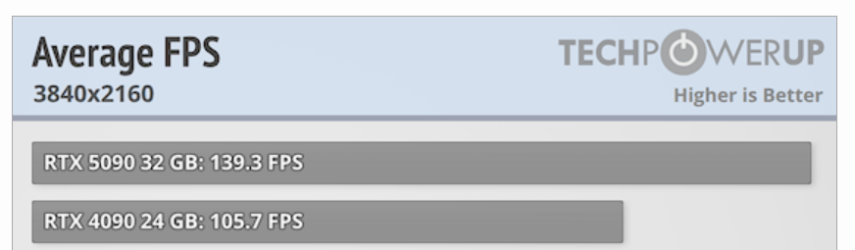

16% isn’t quite accurate. Sure it varies so it really depends on what game you’re playing. And my Strix 4090 used to pull well over 450W at the time.It's a 25% price increase for an average of 16% more performance (assuming you are running at full wattage) while using 34% more power. The 4090 was overpriced yes but by comparison the 5090 is vastly more so.

| System Name | Desktop |

|---|---|

| Processor | AMD Ryzen 9950X |

| Motherboard | MSI X670E MEG ACE |

| Cooling | Corsair XC7 Block / Corsair XG7 Block EK 360PE Radiator EK 120XE Radiator 8x EK Vadar Furious Fans |

| Memory | 64GB TeamGroup T-Create Expert DDR5-6000 |

| Video Card(s) | MSI RTX 4090 Gaming X Trio |

| Storage | 1TB WD Black SN850 / 4TB Inland Premium / 8TB WD Black HDD |

| Display(s) | Alienware AW3821DW / ASUS TUF VG279QM |

| Case | Lian-Li Dynamic 011 XL ROG |

| Audio Device(s) | Razer Nommo Pro Speakers / Razer Kraken Pro V4 |

| Power Supply | EVGA P2 1200W Platinum |

| Mouse | Razer Viper |

| Keyboard | Razer Huntsman Elite |

MSRP

Where can you find a 4090 for $1600?

What's your point??? You haven't shown where you can get a 5090 for MSRP. It's because you can't.

| System Name | Godrillamobile |

|---|---|

| Processor | 9800 x 3d @5.425 ghz |

| Motherboard | MSI x870e Tomahawk |

| Cooling | Lianli GA Trinity 2 x360 |

| Memory | Gskill 32 gig ddr5 (cl 28 oc from cl30 ) 6ghz |

| Video Card(s) | 5090 PNY stock 4 now |

| Storage | 990 pro 2 tera as prime and 970 evo plus 2 tera as secondary |

| Display(s) | 5 year cx 48 inches |

| Case | Motech |

| Audio Device(s) | SteelSeries 7.1 wired headset with dac |

| Power Supply | seasonic vertex 1000 atx 3.0 psu with single 12 volt dedicated gpu wire cable |

| Mouse | g502x wired |

| Keyboard | corsair rgb full |

| Software | wind 11 64 bit |

| Processor | 14700KF/12100 |

|---|---|

| Motherboard | Gigabyte B760 Aorus Elite Ax DDR5 |

| Cooling | ARCTIC Liquid Freezer II 240 + P12 Max Fans |

| Memory | 32GB Kingston Fury Beast DDR5 |

| Video Card(s) | Asus Tuf 4090 24GB |

| Storage | 4TB sn850x, 2TB sn850x, 2TB p5 plus, 2TB sn580, 2TB P400L, 4TB MX500 * 2 + dvd burner. |

| Display(s) | Dell 23.5" 1440P IPS panel |

| Case | Lian Li LANCOOL II MESH Performance Mid-Tower |

| Audio Device(s) | Logitech Z623 |

| Power Supply | Gigabyte ud850gm pg5 |

| Keyboard | msi gk30 |

I think you could make an argument that the 4090 is best for backwards compatibility reasons due to the dropping of 32-bit physx. I know its not much but, its a cool feature I wouldn't want to lose, especially for those old games where the source code may not be around anymore, or even the studio. 5090 is also pretty dangerously close on the safety margin of its connector. And I mean, even the 4090 was, I think decreasing that margin even more was a mistake. Something like power delivery should be easy to get right for people who design silicon as complicated as nvidia's GPUs.What’s yours? Mine was that, theoretically, the price of a new 5090 should be no more than 25% over what a new 4090 is and that’s give or take the advantage you get in demanding games. For LLMs this looks much better for the 5090 due to the bandwidth increase. IMHO overclocking potential of the 5000 series is also better. The 4090 is a great card but the 5090 is better in every way except for its price.

You can’t get a 4090 for MSRP at the moment. You can get a 5090 for 20% over MSRP but you can get a pre-built system with decent components (9800X3D based) for not much more. If you work out the cost of the GPU then it’s effectively MSRP. This is in Australia and seemingly every country is different. Supposedly they have 5090s below MSRP in Scandinavia.

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R9 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

And yeah the 5090 msrp isn't bad, relative to the 4090, but you could at least at one point buy the 4090 at msrp, I did, I thought I was getting ripped off at the time and that I'd regret it when better, cheaper cards came out, but now I realize I was lucky, as the price ended up going up and staying up. And I know its not like this in the US, or maybe it is now with the tariffs, idk, but I've never even seen an nvidia founders edition card with my own two eyes, ever. Thats how rare they are here. If you want a cheap(er) 5090 in my country, you have to buy one of the models with bad reviews, and usually there's a reason for a cheap price and bad reviews. The decent cards, cost double what my 4090 cost when I bought it at msrp. Though I admit, I got it at a good time.

A lot depends on where you live. AMD prices are even worse here, at least at the times I've been shopping.

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus Astral 5090 LC OC |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R9 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

I put my Asus TUF 4090 back in the box and that's it, maybe in 20 years I can sell it as collector item

| Processor | Ryzen 9800X3D |

|---|---|

| Motherboard | ASRock X670E Taichi |

| Cooling | Noctua NH-D15 Chromax |

| Memory | 64GB DDR5 6000 CL26 |

| Video Card(s) | MSI RTX 4090 Trio |

| Storage | P5800X 1.6TB 4x 15.36TB Micron 9300 Pro 4x WD Black 8TB M.2 |

| Display(s) | Acer Predator XB3 27" 240 Hz |

| Case | Thermaltake Core X9 |

| Audio Device(s) | JDS Element IV, DCA Aeon II |

| Power Supply | Seasonic Prime Titanium 850w |

| Mouse | PMM P-305 |

| Keyboard | Wooting HE60 |

| VR HMD | Valve Index |

| Software | Win 10 |

16% isn’t quite accurate. Sure it varies so it really depends on what game you’re playing. And my Strix 4090 used to pull well over 450W at the time.

| Processor | Ryzen 7 9800X3D |

|---|---|

| Motherboard | MSI MAG X870 Tomahawk WIFI |

| Cooling | Custom watercooling loop with Watercool MO-RA3 420 |

| Memory | 96GB DDR5-6000 |

| Video Card(s) | Zotac RTX 4090 AIRO |

| Storage | Intel Optane DC P4800X 1.5TB + Micron 7400 PRO 3.84TB + KIOXIA CD6-R 7.68TB + Micron 9200 ECO 11TB |

| Display(s) | Dell UltraSharp U4025QW |

| Case | Fractal Design Vector RS |

| Audio Device(s) | Canton CT 800, beyerdynamic DT 990 Pro, Audio Technica AT3035 |

| Power Supply | Cooler Master X Silent Edge Platinum 1100 |

| Mouse | pwnage Stormbreaker |

| Keyboard | Wooting 80HE (zinc case version) |

Go get a better monitor -_-Nonsense, my number is the 1440p average that was pulled right from TPU's review:

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I felt like a sucker paying 1900 for the 3090. For the 4090 on the other hand I actually felt like im stealing jensen (based on the pricing of the other gpus on the market, both nvidias and the competitions).I remember thinking paying 1699 was stupid for a gpu, next generations is gonna be so much better..... Sucks to be wrong. I only did it because it was the last time I would be able to buy a stupidly priced gpu without factoring in a toddler lol and knew I would be retiring around the time the next generation came out.

That's 20%, and most likely that's gpu bound. I've tested the cards, the difference is 30+ when cpu isn't slowing em down.Nonsense, my number is the 1440p average that was pulled right from TPU's review:

View attachment 405802

| System Name | Phenomenal1 |

|---|---|

| Processor | Ryzen 7 5800x3d |

| Motherboard | MSI X570 Gaming Plus |

| Cooling | Noctua NH-D15s with added NF-A12x25 fan on front |

| Memory | Predator Vesta 32GB (2 x 16GB) DDR4 3600 BL.9BWWR.300 B-Die @3733 CL14 |

| Video Card(s) | PNY XLR8 RTX 4090 VERTO EPIC-X Triple Fan |

| Storage | Boot SSD: SATA 500GB - 1tb + 4tb pcie3 nvme / Spinning Drives: 2tb + 6 tb |

| Display(s) | MSI MAG 271QPX E2 240hz oled and Gigabyte M27Q 170hz ips |

| Case | Fractal Torrent - Black |

| Audio Device(s) | Realtek ALC1220P |

| Power Supply | Super Flower Leadex VII XG 1000W |

| Mouse | Redragon Griffin |

| Keyboard | Razer Huntsman V2 Optical |

| Software | Windows 11 that I forced to stay at 23H2 with InControl |

| Benchmark Scores | Time Spy - 28594 https://www.3dmark.com/spy/51010148 |

The 4090 doesn't crash and has physx.The 4090 is a great card but the 5090 is better in every way except for its price.

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R9 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

That's 20%, and most likely that's gpu bound. I've tested the cards, the difference is 30+ when cpu isn't slowing em down.

I felt like a sucker paying 1900 for the 3090. For the 4090 on the other hand I actually felt like im stealing jensen (based on the pricing of the other gpus on the market, both nvidias and the competitions)..

The 4090 doesn't crash and has physx.

Nonsense, my number is the 1440p average that was pulled right from TPU's review:

Sorry but no sane person would use 1440p to compare cards at that level. Who cares whether you get 120 or 140 fps unless it’s competitive gaming and I’m sure even cheaper cards manage to get good fps at Counterstrike.Hardware Unboxed's 1440p results are even more dismal, with there being a 12% difference between the 4090 and 5090:

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R9 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

Sorry but no sane person would use 1440p to compare cards at that level. Who cares whether you get 120 or 140 fps unless it’s competitive gaming and I’m sure even cheaper cards manage to get good fps at Counterstrike.

I don’t know how we got here….The only point I was trying to make was MSRP is up 25%, memory size is up 33%, memory bandwidth is up 80% and those two significantly benefit AI usage. Gaming performance @ 4k is up 20-30% and that’s always nice to have.

MSRP for the 4090 was over $3k AU. We get shafted here ALL the time. I paid $3.7k for my Strix because that’s all I could get on the morning of release. The 4090 was then mostly available only for over MSRP for the first 6 months. NOT much different from the current situation. The MSRP for the 5090 was over $4k here which is $2000 * exchange rate plus GST (our sales tax) plus another 20% of rip-me-off surcharge. What can I say, these things are definitely luxury items and luxury items have strange pricingI don't think it's as bad in Europe and I'm not familiar with other territories like Australia etc.

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R9 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

MSRP for the 4090 was over $3k AU. We get shafted here ALL the time. I paid $3.7k for my Strix because that’s all I could get on the morning of release. The 4090 was then mostly available only for over MSRP for the first 6 months. NOT much different from the current situation. The MSRP for the 5090 was over $4k here which is $2000 * exchange rate plus GST (our sales tax) plus another 20% of rip-me-off surcharge. What can I say, these things are definitely luxury items and luxury items have strange pricing

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I've measured a ~22% difference with both locked to 400w. But yeah, your 25% seems on point.I agree, closer to 35% when not gpu limited in my very un scientific testing. Or 25% when both are limited to 350w/400w although that could just be the 4 games I tried and my +/‐ is probably 5% becuase I only do 4 runs and throw out the fastest and slowest run.

4K is really the only resolution 1000+ gpu should be tested at if not even the fastest cpus will limit them in some games at 1440p.

W1z got 32% at 4k I believe I think that is probably about what one should expect.

View attachment 405808

Honestly I only felt that way till I got it home and in some scenarios it was almost double my 3080ti in heavy RT 4k 70-90% and I was like damn and that was without BSgen.

The big downside - for me - and the reason I didn't keep the 5090 is the power draw. Limited to the power im using on the 4090 the performance difference just isn't there.Sorry but no sane person would use 1440p to compare cards at that level. Who cares whether you get 120 or 140 fps unless it’s competitive gaming and I’m sure even cheaper cards manage to get good fps at Counterstrike.

I don’t know how we got here….The only point I was trying to make was MSRP is up 25%, memory size is up 33%, memory bandwidth is up 80% and those two significantly benefit AI usage. Gaming performance @ 4k is up 20-30% and that’s always nice to have.

| System Name | His & Hers |

|---|---|

| Processor | R7 5800X/ R9 7950X3D Stock |

| Motherboard | X670E Aorus Pro X/ROG Crosshair VIII Hero |

| Cooling | Corsair h150 elite/ Corsair h115i Platinum |

| Memory | Trident Z5 Neo 6000/ 32 GB 3200 CL14 @3800 CL16 Team T Force Nighthawk |

| Video Card(s) | Evga FTW 3 Ultra 3080ti/ Gigabyte Gaming OC 4090 |

| Storage | lots of SSD. |

| Display(s) | A whole bunch OLED, VA, IPS..... |

| Case | 011 Dynamic XL/ Phanteks Evolv X |

| Audio Device(s) | Arctis Pro + gaming Dac/ Corsair sp 2500/ Logitech G560/Samsung Q990B |

| Power Supply | Seasonic Ultra Prime Titanium 1000w/850w |

| Mouse | Logitech G502 Lightspeed/ Logitech G Pro Hero. |

| Keyboard | Logitech - G915 LIGHTSPEED / Logitech G Pro |

I've seen up to 110% over a 3090. RDR2 completely maxed took me from 55-90 depending on location to 85-90 minimum and up to 160+ where I hit a cpu bottleneck. The problem with the 3090 was - well, the 3080, 3080s and 3080ti were very close in performance at half the price (ignoring the mining shaenanigans)

I've measured a ~22% difference with both locked to 400w. But yeah, your 25% seems on point.