-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA GeForce RTX 5050 8 GB

- Thread starter W1zzard

- Start date

- Joined

- Apr 1, 2023

- Messages

- 71 (0.08/day)

| Processor | ryzen 7 5700x |

|---|---|

| Motherboard | ASUS TUF GAMING B550-PLUS WIFI II |

| Cooling | DeepCool AK400 |

| Memory | 2x16gb Kingston FURY renegade black 3600MHz cl16 |

| Video Card(s) | Asus dual RTX 2070 SUPER (EVO OC) |

| Storage | Crucial P3 Plus 1TB NVMe |

| Display(s) | MSI Optix G241 |

| Case | Fractal Design Focus 2 Black Solid |

| Audio Device(s) | Creative Sound BlasterX AE-5 Plus |

| Power Supply | EVGA 600 GD |

| Software | windows 10 PRO |

because brand new at its current price and covered by warranty at respectable retailerI am not sure why we are talking about the RTX 3080. The 5050 is an entry level card, part of its market will be people with older systems upgrading from 4GB and 6GB cards. Limited power supply capacity will probably be a factor. According to TPU maximum power consumption of the 5050 is 131W and the 3080 335W.

it is a best price/performance gaming card in my region ...

and power consumption is not much of a factor (electricity doesn´t cost much here)

if you have 700w or above good power supply there is just nothing better at the moment

rtx 5060 and rtx 5060Ti 8gb are looking like bad jokes right now .

and paying 100-120 bucks premium over 80% slower rtx 5050 is also certainly worth it ,

even more so when you realize this card also needs external power connector .

the whole point of this card should have been a slot powered small and efficient video card for 1080p gaming on a budget and it even failed to do that ...

Last edited:

- Joined

- Mar 15, 2024

- Messages

- 11 (0.02/day)

thank you for the response! I guess I was going off the higher res + upscaling but when using 720p and upscaling to 1080p intermediate frames are smaller enough to offset the cost of the model for upscaling hmhm. Lowering texture quality is probably still much more significant than upscaling for vram saving. In germany 3060 12gb and 5050 cost the same but the 5060 is only 10% more expensive making the 5050 just terrible valuable inhouseUpscaling lowers the VRAM usage, because the game is rendered at lower resolution. Only FG increases VRAM usage

The 3060 12 GB, is kinda similar to the B580's situation. You do have more VRAM which will let you run bigger workloads, but your GPU horsepower is so limited that you will never get decent FPS in any of them, so you'll never run them in real-life. This means you'll use upscaling, for those resolutions, which brings the VRAM usage back down.

Unlike the B580, the 3060 has MUCH less raw GPU perf, which amplifies the problem. For DLSS Transformer upscaling specifically, the performance hit is much small on Blackwell than on older architectures, so this will cost you even more FPS and the extra VRAM won't help you one bit. But the 3060 is $220, I'd still pick the 5050 for 250 (if I had to choose between those two cards)

- Joined

- Jul 9, 2015

- Messages

- 3,575 (0.98/day)

| System Name | M3401 notebook |

|---|---|

| Processor | 5600H |

| Motherboard | NA |

| Memory | 16GB |

| Video Card(s) | 3050 |

| Storage | 500GB SSD |

| Display(s) | 14" OLED screen of the laptop |

| Software | Windows 10 |

| Benchmark Scores | 3050 scores good 15-20% lower than average, despite ASUS's claims that it has uber cooling. |

"vs 9060XT 8GB" comparison would make sense, but was surprisingly missing.

- Joined

- Mar 15, 2024

- Messages

- 11 (0.02/day)

Not really for most markets as the 9060 xt 8gb is priced 20% higher msrp and also real life here in germany. Unless amd releases a 250$ msrp 9060 or 200$ msrp 9050 the only sort of competition for the 5050 in that price range is the 7600/xt. I for one would love a slot powered 9050 for < 200 even

- Joined

- Feb 14, 2012

- Messages

- 2,388 (0.49/day)

| System Name | msdos |

|---|---|

| Processor | 8086 |

| Motherboard | mainboard |

| Cooling | passive |

| Memory | 640KB + 384KB extended |

| Video Card(s) | EGA |

| Storage | 5.25" |

| Display(s) | 80x25 |

| Case | plastic |

| Audio Device(s) | modchip |

| Power Supply | 45 watts |

| Mouse | serial |

| Keyboard | yes |

| Software | disk commander |

| Benchmark Scores | still running |

What I see: the 4060 8GB uses slightly less power, and is slightly faster.

- Joined

- Jan 20, 2019

- Messages

- 1,806 (0.76/day)

- Location

- London, UK

| System Name | ❶ Oooh (2024) ❷ Aaaah (2021) ❸ Ahemm (2017) |

|---|---|

| Processor | ❶ 5800X3D ❷ i7-9700K ❸ i7-7700K |

| Motherboard | ❶ X570-F ❷ Z390-E ❸ Z270-E |

| Cooling | ❶ ALFIII 360 ❷ X62 + X72 (GPU mod) ❸ X62 |

| Memory | ❶ 32-3600/16 ❷ 32-3200/16 ❸ 16-3200/16 |

| Video Card(s) | ❶ 3080 X Trio ❷ 2080TI (AIOmod) ❸ 1080TI |

| Storage | ❶ NVME/SATA-SSD/HDD ❷ <SAME ❸ <SAME |

| Display(s) | ❶ 1440/165/IPS ❷ 1440+4KTV ❸ 1080/144/IPS |

| Case | ❶ BQ Silent 601 ❷ Cors 465X ❸ S340-Elite |

| Audio Device(s) | ❶ HyperX C2 ❷ HyperX C2 ❸ Logi G432 |

| Power Supply | ❶ HX1200 Plat ❷ RM750X ❸ EVGA 650W G2 |

| Mouse | ❶ Logi G Pro ❷ Razer Bas V3 ❸ Razer Bas V3 |

| Keyboard | ❶ Logi G915 TKL ❷ Anne P2 ❸ Logi G610 |

| Software | ❶ Win 11 ❷ 10 ❸ 10 |

| Benchmark Scores | I have wrestled bandwidths, Tussled with voltages, Handcuffed Overclocks, Thrown Gigahertz in Jail |

From a business standpoint, AMDs RX 7600 still clings to that $275+ price range, and anything in this sort of performance territory (or class) is stuck with modest performance gains. With the 5050 barely pulling ahead in benchmarks, Nvidia isn't one to leave money on the table and so the 5050 gets parked at $250+. If these exploits rake in more cash and people dive in dropping cash, I can't see why the duopoly wouldn't chase those margins. They're hungry and its only too apparent nowadays the monopoly will prioritise margins and skimpery well above consumer satisfaction, reasonable value or ethics in general.

From a consumer perspective, the issue isn’t just inflated pricing across all tiers, its the widening performance gap between the top and bottom tiers. The fair value scaling across tiers is lost at sea and poor ole suppressed budget gamers are left with premium prices for hardware that barely moves the needle - especially when modern games hit like a heavy sledgehammer, crushing weaker hardware without remorse. Lets face it, hardware manufacturers have grown too fat and comfortable exploiting gamers and the vast majority of buyers don't even know they're being shafted - a problem which will see no resolve anytime soon.

In short, the RTX 5050 is realistically a $150-$200 card. The $250 mark is where the 5060 8GB should have landed, and thats bloody generous, considering it lacks 12GB which should have been the standard for any 60-tier GPU by now.

From a consumer perspective, the issue isn’t just inflated pricing across all tiers, its the widening performance gap between the top and bottom tiers. The fair value scaling across tiers is lost at sea and poor ole suppressed budget gamers are left with premium prices for hardware that barely moves the needle - especially when modern games hit like a heavy sledgehammer, crushing weaker hardware without remorse. Lets face it, hardware manufacturers have grown too fat and comfortable exploiting gamers and the vast majority of buyers don't even know they're being shafted - a problem which will see no resolve anytime soon.

In short, the RTX 5050 is realistically a $150-$200 card. The $250 mark is where the 5060 8GB should have landed, and thats bloody generous, considering it lacks 12GB which should have been the standard for any 60-tier GPU by now.

From a business standpoint, AMDs RX 7600 still clings to that $275+ price range, and anything in this sort of performance territory (or class) is stuck with modest performance gains. With the 5050 barely pulling ahead in benchmarks, Nvidia isn't one to leave money on the table and so the 5050 gets parked at $250+. If these exploits rake in more cash and people dive in dropping cash, I can't see why the duopoly wouldn't chase those margins. They're hungry and its only too apparent nowadays the monopoly will prioritise margins and skimpery well above consumer satisfaction, reasonable value or ethics in general.

From a consumer perspective, the issue isn’t just inflated pricing across all tiers, its the widening performance gap between the top and bottom tiers. The fair value scaling across tiers is lost at sea and poor ole suppressed budget gamers are left with premium prices for hardware that barely moves the needle - especially when modern games hit like a heavy sledgehammer, crushing weaker hardware without remorse. Lets face it, hardware manufacturers have grown too fat and comfortable exploiting gamers and the vast majority of buyers don't even know they're being shafted - a problem which will see no resolve anytime soon.

In short, the RTX 5050 is realistically a $150-$200 card. The $250 mark is where the 5060 8GB should have landed, and thats bloody generous, considering it lacks 12GB which should have been the standard for any 60-tier GPU by now.

Realistically, NVIDIA didn't create this die for gamers. They could sell it to workstation users for like $600 a card.

2816 CUDA RTX2000 ADA is currently like $800 bucks in a 128 bit stacked GDDR6 config (16GB). A weaker SM variant of the 4060 more or less, just more VRAM.

I still don't see Nvidia selling this under $200. They would be better off scrapping it. Die size is too close to GB206 (149mm2 vs 181mm2) and modern PCI5.0 layer count requirements are contributing to inflated pricing. Cards have to hit a specific SNR, even with x8.

Unironically, 5060 gives the most SM/$ this gen, throughout the entire Blackwell line.

Was looking at 8GB 9060XT's earlier. Seems AMD wont let Newegg sell them under a $270 with gift card promotions. I guess this is the price floor. Full die 32 CU card at 199mm2.

Again, I don't view it at these companies exploiting, but rather trying to keep established margins that have gone back decades. It's just perceived this way since raster improvements more or less tanked since GTX10. Both companies have been tacking on SM/CU's to compensate/scale RT.

Edit:

Not that there isn't any generational improvement per SM/CU, but it's been rather miniscule. 50 series seems more significant than others since they were able to match a 56 SM 4070S with a 48SM 5070. Upsell being DLSS4 + MFG.

Last edited:

- Joined

- Jan 20, 2019

- Messages

- 1,806 (0.76/day)

- Location

- London, UK

| System Name | ❶ Oooh (2024) ❷ Aaaah (2021) ❸ Ahemm (2017) |

|---|---|

| Processor | ❶ 5800X3D ❷ i7-9700K ❸ i7-7700K |

| Motherboard | ❶ X570-F ❷ Z390-E ❸ Z270-E |

| Cooling | ❶ ALFIII 360 ❷ X62 + X72 (GPU mod) ❸ X62 |

| Memory | ❶ 32-3600/16 ❷ 32-3200/16 ❸ 16-3200/16 |

| Video Card(s) | ❶ 3080 X Trio ❷ 2080TI (AIOmod) ❸ 1080TI |

| Storage | ❶ NVME/SATA-SSD/HDD ❷ <SAME ❸ <SAME |

| Display(s) | ❶ 1440/165/IPS ❷ 1440+4KTV ❸ 1080/144/IPS |

| Case | ❶ BQ Silent 601 ❷ Cors 465X ❸ S340-Elite |

| Audio Device(s) | ❶ HyperX C2 ❷ HyperX C2 ❸ Logi G432 |

| Power Supply | ❶ HX1200 Plat ❷ RM750X ❸ EVGA 650W G2 |

| Mouse | ❶ Logi G Pro ❷ Razer Bas V3 ❸ Razer Bas V3 |

| Keyboard | ❶ Logi G915 TKL ❷ Anne P2 ❸ Logi G610 |

| Software | ❶ Win 11 ❷ 10 ❸ 10 |

| Benchmark Scores | I have wrestled bandwidths, Tussled with voltages, Handcuffed Overclocks, Thrown Gigahertz in Jail |

Realistically, NVIDIA didn't create this die for gamers. They could sell it to workstation users for like $600 a card.

2816 CUDA RTX2000 ADA is currently like $800 bucks in a 128 bit stacked GDDR6 config (16GB). A weaker SM variant of the 4060 more or less, just more VRAM.

I still don't see Nvidia selling this under $200. They would be better off scrapping it. Die size is too close to GB206 (149mm2 vs 181mm2) and modern PCI5.0 layer count requirements are contributing to inflated pricing. Cards have to hit a specific SNR, even with x8.

Unironically, 5060 gives the most SM/$ this gen, throughout the entire Blackwell line.

Was looking at 8GB 9060XT's earlier. Seems AMD wont let Newegg sell them under a $270 with gift card promotions. I guess this is the price floor. Full die 32 CU card at 199mm2.

Again, I don't view it at these companies exploiting, but rather trying to keep established margins that have gone back decades. It's just perceived this way since raster improvements more or less tanked since GTX10. Both companies have been tacking on SM/CU's to compensate/scale RT.

Edit:

Not that there isn't any generational improvement per SM/CU, but it's been rather miniscule. 50 series seems more significant than others since they were able to match a 56 SM 4070S with a 48SM 5070. Upsell being DLSS4 + MFG.

Nah, the pricing is off for what the 5050 delivers.

Nvidia could sell that die for workstation use but if its showing up as a low-end gaming card, it should be priced like one. Die size/PCIe layer costs are worthy of note but they don’t fully explain the weak performance uplift and inflated prices. Especially when both Nvidia and AMD are still posting strong profits. On paper SM/dollar looks good (skimping elsewhere), but real world gains aren’t that impressive and the performance tier gaps are widening and its just not enough to take the position that DLSS and frame gen compensates. These features push people towards newer hardware and makes older cards feel worse. Thats not real progress... just clever upselling with strategically deployed performance segmenting and skimping which stinks.

Calling it “maintaining margins” is just a nice way of saying prices are staying high while value drops. Budget cards used to be solid but now they’re underpowered and overpriced. Its not just tech getting expensive... its a strategy built around prioritizing high margin supply, especially outside of gaming and it doesn’t do gamers any favours. You're essentially agreeing: 'they could sell it for a workstation card for much more' which is the reason why they get away with high prices for bottom barrel and barely a foot through the door gaming cards. Come on, this is textbook monopoly behaviour. You seriously think they're not exploiting the market? Gamings still pulling in healthy profits and its dwarfed with AI/data profits shooting through the roof.

Nah, the pricing is off for what the 5050 delivers.

Yet, if it was priced it at $220, it would unironically hold the highest frame per dollar in the entire Blackwell line via 1080p res.. It's off, but not as significant as you think.

AMD and Intel unfortunately wouldn't be beating it either. :\

Yes, and I actually agree that there's stagnation. I just factor external cost. It's a bit higher in 2025.Nvidia could sell that die for workstation use but if its showing up as a low-end gaming card, it should be priced like one. Die size/PCIe layer costs are worthy of note but they don’t fully explain the weak performance uplift and inflated prices. Especially when both Nvidia and AMD are still posting strong profits. On paper SM/dollar looks good (skimping elsewhere), but real world gains aren’t that impressive and the performance tier gaps are widening and its just not enough to take the position that DLSS and frame gen compensates. These features push people towards newer hardware and makes older cards feel worse. Thats not real progress... just clever upselling with strategically deployed performance segmenting and skimping which stinks.

I would love to see a 12GB 5060 around $300, but I doubt NVIDIA will follow through. They have leverage here since AMD can't use GDDR7 on RX9000.

Calling it “maintaining margins” is just a nice way of saying prices are staying high while value drops. Budget cards used to be solid but now they’re underpowered and overpriced. Its not just tech getting expensive... its a strategy built around prioritizing high margin supply, especially outside of gaming and it doesn’t do gamers any favours. You're essentially agreeing: 'they could sell it for a workstation card for much more' which is the reason why they get away with high prices for bottom barrel and barely a foot through the door gaming cards. Come on, this is textbook monopoly behaviour. You seriously think they're not exploiting the market? Gamings still pulling in healthy profits and its dwarfed with AI/data profits shooting through the roof.

Budget cards used to be cheaper to manufacturer and AIBs used to have higher margins. I don't think gaming is too much of a concern for NVIDIA.

Like I said before, they would prob scrap the 5050 before pricing it under $200, at least officially.

Last edited:

"The performance increase over the GeForce RTX 3050 is a respectable 56%"

This is a great improvement over a single generation. I do consider Lovelace and Blackwell as one big generation due to using same node and advances during that time were always iffy like that. In the past, GPUs didn't became faster when Nvidia was stuck on a same node. Node itself became a lot cheaper and Nvidia would simply start offering a lot more die for a cheaper price. Moore's Law is Dead and nodes are not getting cheaper, hence we are stuck with only architectural improvements which often isn't much.

This is one of the better Blackwell GPUs. I'm disappointed in people and how much they trash it for no reason. It works very well in modern titles. It is cheap. It is just not product for you. All that negativity from supposed tech enthusiasts is graining. These days they are more like tech haters than enthusiasts. If they will not change their attitudes, in the future there will be more and more slow years like these. Gone are the times of massive annual improvements. Party is over. Technology had matured. Now you will have to learn to appreciate more nuanced and sane improvements in technology.

This is a great improvement over a single generation. I do consider Lovelace and Blackwell as one big generation due to using same node and advances during that time were always iffy like that. In the past, GPUs didn't became faster when Nvidia was stuck on a same node. Node itself became a lot cheaper and Nvidia would simply start offering a lot more die for a cheaper price. Moore's Law is Dead and nodes are not getting cheaper, hence we are stuck with only architectural improvements which often isn't much.

This is one of the better Blackwell GPUs. I'm disappointed in people and how much they trash it for no reason. It works very well in modern titles. It is cheap. It is just not product for you. All that negativity from supposed tech enthusiasts is graining. These days they are more like tech haters than enthusiasts. If they will not change their attitudes, in the future there will be more and more slow years like these. Gone are the times of massive annual improvements. Party is over. Technology had matured. Now you will have to learn to appreciate more nuanced and sane improvements in technology.

"The performance increase over the GeForce RTX 3050 is a respectable 56%"

This is a great improvement over a single generation. I do consider Lovelace and Blackwell as one big generation due to using same node and advances during that time were always iffy like that. In the past, GPUs didn't became faster when Nvidia was stuck on a same node. Node itself became a lot cheaper and Nvidia would simply start offering a lot more die for a cheaper price. Moore's Law is Dead and nodes are not getting cheaper, hence we are stuck with only architectural improvements which often isn't much.

This is one of the better Blackwell GPUs. I'm disappointed in people and how much they trash it for no reason. It works very well in modern titles. It is cheap. It is just not product for you. All that negativity from supposed tech enthusiasts is graining. These days they are more like tech haters than enthusiasts. If they will not change their attitudes, in the future there will be more and more slow years like these. Gone are the times of massive annual improvements. Party is over. Technology had matured. Now you will have to learn to appreciate more nuanced and sane improvements in technology.

I think it's a twofold issue. People still expect massive generational jumps with linear pricing models from 10 years ago.

That party ended around the RTX 20 generation where a card like the 2070 only gained 20-30% jump vs the 1070 on a significantly bigger die. (314mm2> 455mm2). Previous 970 > 1070 generation was literally 50-60% gains. (398mm2 > 314mm2, Yes, they gained 50% performance while shrinking with 13>15 SM.)

In addition to that, NVIDIA started entering the market on their own with FE model's being priced significantly higher durring GTX 10. IIRC, it was $380 "MSRP" vs $450 for the NVIDIA built 1070 FE model. Lot of AIB's ended up matching FE pricing with premium coolers. This extended to RTX 20 where the $500 MSRP 2070 ended up at $600 for something like an ASUS STRIX.

This is also part of the reason NVIDIA ended up creating the TU116 for example. Tensor-less 20 series on a smaller 284mm2 chip. Would trade off with the previous 1070 depending on game and engine. CUDA was halved on 20 series, but had more SM units.

30 series directly increased SM count further and went back to a 2x CUDA config similar to 10 series. This would have been an amazing generation (per MSRP) if mining didn't completely destroy the gaming market. It was so bad for gamers that NVIDIA ended up canceling the 16GB 3070 and 20GB+ 3080 models. People seem to forget about this.

I actually agree. 40/50 series are just extensions of each other, but they actually made decent raster jumps here regardless. Like I mentioned up above, the 5070 @ 48 SM is matching the previous 4070S at 56 SM.

Either way, the last small die 50 series prior to 3050 was what? 1050TI?

Lets look at it:

- 132 mm2 Samsung 14nm

- 6 total SM units.

- $139 MSRP.

- 75W

- entry high low side mosfets and PCI 3.0. (less layers required.)

I hate to state the obvious, but it's a smaller die on a MUCH cheaper node, has close to 1/4 the total SM, consumes almost half the power, which directly relates to modern SPS config and is likely on a PCB with few less layers (cheaper). Doesn't have to hit the same SNR levels.

Adjusted inflation on a 1050TI is $188 USD. I legit want people to explain to me how NVIDIA would want to price the 5050 under $200. The margins of 10 series had to be absolutely insane from that perspective.

3050 > 5050 is die for die equal in regards to SM count, TDP, VRAM, price etc.. x8 4.0 vs x8 5.0

Die is bigger relative to 5050, but it's on a cheaper Samsung 8 node with only 20/30 units enabled. Order quantity likely higher since GA106 was used in the 3060. GB207 is 20/20 full die.. Not sure about the logistics on that, but they usually charge more for non defects. Adjusted inflation is $275 per 3050.

Either way. Is there stagnation? Yes. No one is defending NVIDIA in that area. I'll just argue on behalf of pricing. NVIDIA hasn't really changed their strategy. Most people are just naive.

Used market will adjust accordingly.

Last edited:

- Joined

- Jul 20, 2020

- Messages

- 1,339 (0.73/day)

| System Name | Gamey #2 / Office rescue |

|---|---|

| Processor | Ryzen 7 5800X3D / Core i5-7600 |

| Motherboard | Asrock B450M P4 / Dell Q270 |

| Cooling | IDCool SE-226-XT / Dell 65W |

| Memory | 32GB 3200 CL16 / 32GB 2400 CL17 |

| Video Card(s) | PNY 5070 / Pulse 6400 |

| Storage | 4TB Team MP34 / 1TB WD SN550 |

| Display(s) | LG 32GK650F 1440p 144Hz VA |

| Case | Corsair 4000Air / Opti 7050 SFF |

| Power Supply | EVGA 650 G3 / Dell 240W |

Adjusted inflation on a 1050TI is $188 USD. I legit want people to explain to me how NVIDIA would want to price the 5050 under $200. The margins of 10 series had to be absolutely insane from that perspective.

Adding to this, the 3050-6GB which is the 70W direct successor to the 1050 Ti/1650 has an MSRP of $180 right in line with inflation, and almost everyone complained about the price. People want inflation to magically evaporate and good 60-class GPUs to still be $250 like in 2017. Well that $250 is now $380, buy yourself an 8GB 5060 or 16GB 9060 XT.

Last edited:

GB207 is 20/20 full die

I would be glad for RTX 5040 based on defective GB207 dies. There is high chance that these dies will be gathered and repurposed. I have no doubt that tech youtbers will be fuming at this GPU. I have no need for those GPUs myself. I noticed a need and lack of any modern such GPUs for long periods of times. My friend works with government PCs (buying, maintain, writing them off) and they are truly crap. Later such PCs are sold off and small businesses can make gaming PCs for very low prices. And even their performance isn't bad. Even RTX 3050 6 GB outperforms integrated graphics from Ryzen processors. I had seen them going for 400 euros as refurbished and they are unbeatable value.

This is why I'm disappointed by all those youtube reviewers. They are out of touch with their own audience. Just last year I was looking through refurbished laptops to get a replacement for a woman who just needed it for her small business. These PCs present the best value which you could get by buying 'new'. These things are often a lifeline to poorer families. I often hear people buying the cheapest available stuff for their kids when study years start. These things are head and shoulders better than a cheap laptop. You actually have a GPU and upgradability.

- Joined

- Jul 20, 2020

- Messages

- 1,339 (0.73/day)

| System Name | Gamey #2 / Office rescue |

|---|---|

| Processor | Ryzen 7 5800X3D / Core i5-7600 |

| Motherboard | Asrock B450M P4 / Dell Q270 |

| Cooling | IDCool SE-226-XT / Dell 65W |

| Memory | 32GB 3200 CL16 / 32GB 2400 CL17 |

| Video Card(s) | PNY 5070 / Pulse 6400 |

| Storage | 4TB Team MP34 / 1TB WD SN550 |

| Display(s) | LG 32GK650F 1440p 144Hz VA |

| Case | Corsair 4000Air / Opti 7050 SFF |

| Power Supply | EVGA 650 G3 / Dell 240W |

I would be glad for RTX 5040 based on defective GB207 dies. There is high chance that these dies will be gathered and repurposed. I have no doubt that tech youtbers will be fuming at this GPU. I have no need for those GPUs myself. I noticed a need and lack of any modern such GPUs for long periods of times. My friend works with government PCs (buying, maintain, writing them off) and they are truly crap. Later such PCs are sold off and small businesses can make gaming PCs for very low prices. And even their performance isn't bad. Even RTX 3050 6 GB outperforms integrated graphics from Ryzen processors.

That's sandbagging the RTX 3050 6GB a bit as it performs 60-80% better in raster than the integrated Radeon 780M and 150+% when RT is baked in. And that's before adding the image quality advantage of DLSS Transformer Model vs FSR 2 and 3, which is IMO big selling point at the lower end where you need all the FPS help possible. All that before the flexibility to drop it into any old machine unlike IG, and then drop in a faster one in 4 years' time.

Yeah and tech youtubers love 780M while hate RTX 3050 6 GB. Maybe it is part of ever growing rift between them and manufacturers. I wouldn't shed a tear if they won't get courtesy GPU for reviews anymore.

I had in mind this video. FPS count is low, so it doesn't seem much of a difference, but percentage difference is huge.

And cheapest 780M laptops are going around twice the price of this computer. This is little off topic, but I just want to highlight why these low end GPUs are important. Why having even lower spec RTX 5050 is very important for a niche audience. RTX 3050 6 GB can be had from 180 euros. Such dirt cheap GPUs make massive difference in these office PCs and they still somehow perform better than a lot more expensive integrated graphics which everyone is so excited about. There was a huge gap in the market before RTX 3050 6 GB came and it was revolutionary. AMD doesn't service the market, their offerings at that range are laughable. Having a new Blackwell replacement would be a big deal for this market segment.

So, not to get more off topic, that will be my last post about it.

I had in mind this video. FPS count is low, so it doesn't seem much of a difference, but percentage difference is huge.

And cheapest 780M laptops are going around twice the price of this computer. This is little off topic, but I just want to highlight why these low end GPUs are important. Why having even lower spec RTX 5050 is very important for a niche audience. RTX 3050 6 GB can be had from 180 euros. Such dirt cheap GPUs make massive difference in these office PCs and they still somehow perform better than a lot more expensive integrated graphics which everyone is so excited about. There was a huge gap in the market before RTX 3050 6 GB came and it was revolutionary. AMD doesn't service the market, their offerings at that range are laughable. Having a new Blackwell replacement would be a big deal for this market segment.

So, not to get more off topic, that will be my last post about it.

Adding to this, the 3050-6GB which is the 70W direct successor to the 1050 Ti/1650 has an MSRP of $180 right in line with inflation, and almost everyone complained about the price. People want inflation to magically evaporate and good 60-class GPUs to still be $250 like in 2017. Well that $250 is now $380, buy yourself an 8GB 5060 or 16GB 9060 XT.

Higher without kickbacks on AMD side too... The 16GB 9060XT ASUS Prime is currently sitting at it's original $440 price point (pre incentive). Same card sold for $350 USD on release day.

You can get a dual fan Sapphire for $360 USD off Newegg/Amazon right now, but it's technically "discounted" from $380+

This is why I'm disappointed by all those youtube reviewers. They are out of touch with their own audience. Just last year I was looking through refurbished laptops to get a replacement for a woman who just needed it for her small business. These PCs present the best value which you could get by buying 'new'. These things are often a lifeline to poorer families. I often hear people buying the cheapest available stuff for their kids when study years start. These things are head and shoulders better than a cheap laptop. You actually have a GPU and upgradability.

I see it from both angles. I think tech tube has no idea of how much external cost is influencing the low end. They're just looking price and performance relative to previous generations. Ignoring everything else.

IE: If you owned a $400 MSRP 3060 TI, the current 5060/5060 TI looks rather terrible and isn't exactly an upgrade. From the perspective of someone who doesn't already own a GPU (or is on legacy GTX), the 5060 ends up as a strong value.. At least relative to FPS/$ if buying new. It's just limited long term in regards to VRAM.

Going back to 5050, I think it would be a solid $200-220 option and incentivize a purchase over the B580 and or 9060XT/5060 8GB @ $300. $250 is just pushing it a bit.

Last edited:

Nvidia would probably be more inclined to price it more aggressively if there will be any competition. Since there is no competition, we must evaluate it on its own versus 3050. Tech youtubers cannot see anything past their precious FPS/dollar data and doesn't understand that 50 class GPUs are in league of their own. They will sell like hotcakes, because everyone is looking the cheapest solution available. What happens when Timmy finishes high school? He needs a laptop for studies. He says that he wants to play video games too. Store clerk says that he needs a dedicated GPU card. Mommy can only afford the cheapest option. And laptop pricing is based on tiers. Cheapest RTX 5050M laptop will be considerably cheaper than cheapest RTX 5060M laptop. It happens same with desktops, but to a lower degree. A person has a certain budget and he picks computer to fit the budget rather than picking the best parts and then making up a budget. The fact that all those tech youtubers don't get how an average person behaves makes them pretty out of touch with your average gamer and customer.

There is a good reason why 4'th and 5'th places in Steam hardware survey is taken by 50s class GPUs. There is also a good reason why RTX 3060 just kept on selling. Did you saw their pricing recently? Still widely available, still selling at 250 euros for 8 GB version and that had pushed RTX 3060 as most popular desktop GPU while mobile RTX 4060 is first. There is a massive gap between the market and tech youtubers. They will keep on fuming. Making clickbaity videos about how Nvidia ruined gaming and the show will keep on going.

There is a good reason why 4'th and 5'th places in Steam hardware survey is taken by 50s class GPUs. There is also a good reason why RTX 3060 just kept on selling. Did you saw their pricing recently? Still widely available, still selling at 250 euros for 8 GB version and that had pushed RTX 3060 as most popular desktop GPU while mobile RTX 4060 is first. There is a massive gap between the market and tech youtubers. They will keep on fuming. Making clickbaity videos about how Nvidia ruined gaming and the show will keep on going.

Last edited:

- Joined

- Dec 12, 2012

- Messages

- 847 (0.18/day)

- Location

- Poland

| System Name | THU |

|---|---|

| Processor | Intel Core i5-13600KF |

| Motherboard | ASUS PRIME Z790-P D4 |

| Cooling | SilentiumPC Fortis 3 v2 + Arctic Cooling MX-2 |

| Memory | Crucial Ballistix 2x16 GB DDR4-3600 CL16 (dual rank) |

| Video Card(s) | MSI GeForce RTX 4070 Ventus 3X OC 12 GB GDDR6X (2505/21000 @ 0.91 V) |

| Storage | Lexar NM790 2 TB + Corsair MP510 960 GB + PNY XLR8 CS3030 500 GB + Toshiba E300 3 TB |

| Display(s) | LG OLED C8 55" + ASUS VP229Q |

| Case | Fractal Design Define R6 |

| Audio Device(s) | Yamaha RX-V4A + Monitor Audio Bronze 6 + Bronze FX | FiiO E10K-TC + Koss Porta Pro |

| Power Supply | Corsair RM650 |

| Mouse | Logitech M705 Marathon |

| Keyboard | Corsair K55 RGB PRO |

| Software | Windows 10 Home |

| Benchmark Scores | Benchmarks in 2025? |

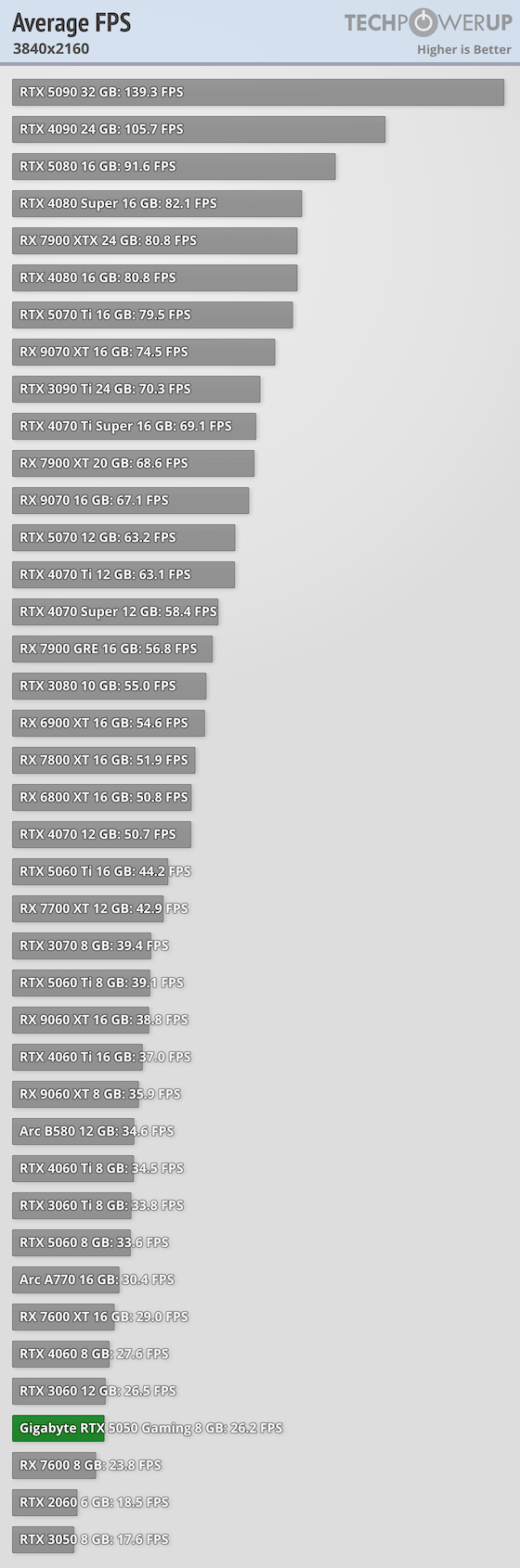

What results is the database based on? In this review, the 5060 is 29% faster in 1080p, but in the database it only says 8%.

- Joined

- May 29, 2017

- Messages

- 941 (0.32/day)

- Location

- Latvia

| Processor | AMD Ryzen™ 7 7700 |

|---|---|

| Motherboard | ASRock B650 PRO RS |

| Cooling | Thermalright Peerless Assassin 120 SE + Arctic P12 |

| Memory | XPG Lancer Blade 6000Mhz CL30 2x16GB |

| Video Card(s) | ASUS Prime Radeon™ RX 9070 XT OC Edition |

| Storage | Lexar NM790 2TB + Lexar NM790 2TB |

| Display(s) | HP X34 UltraWide IPS 165Hz |

| Case | Lian Li Lancool 207 + Arctic P12/14 |

| Audio Device(s) | Airpulse A100 + SW8 |

| Power Supply | Sharkoon Rebel P20 750W |

| Mouse | Cooler Master MM730 |

| Keyboard | Krux Atax PRO Gateron Yellow |

| Software | Windows 10 Pro |

@4k it's 28% faster and @1080p it's 30% faster don't look at database!What results is the database based on? In this review, the 5060 is 29% faster in 1080p, but in the database it only says 8%.

View attachment 407694

- Joined

- Jul 5, 2013

- Messages

- 31,936 (7.26/day)

That graph averages all metrics together for an aggregate score. Did you not know that?What results is the database based on? In this review, the 5060 is 29% faster in 1080p, but in the database it only says 8%.

View attachment 407694

- Joined

- Dec 12, 2012

- Messages

- 847 (0.18/day)

- Location

- Poland

| System Name | THU |

|---|---|

| Processor | Intel Core i5-13600KF |

| Motherboard | ASUS PRIME Z790-P D4 |

| Cooling | SilentiumPC Fortis 3 v2 + Arctic Cooling MX-2 |

| Memory | Crucial Ballistix 2x16 GB DDR4-3600 CL16 (dual rank) |

| Video Card(s) | MSI GeForce RTX 4070 Ventus 3X OC 12 GB GDDR6X (2505/21000 @ 0.91 V) |

| Storage | Lexar NM790 2 TB + Corsair MP510 960 GB + PNY XLR8 CS3030 500 GB + Toshiba E300 3 TB |

| Display(s) | LG OLED C8 55" + ASUS VP229Q |

| Case | Fractal Design Define R6 |

| Audio Device(s) | Yamaha RX-V4A + Monitor Audio Bronze 6 + Bronze FX | FiiO E10K-TC + Koss Porta Pro |

| Power Supply | Corsair RM650 |

| Mouse | Logitech M705 Marathon |

| Keyboard | Corsair K55 RGB PRO |

| Software | Windows 10 Home |

| Benchmark Scores | Benchmarks in 2025? |

That graph averages all metrics together for an aggregate score. Did you not know that?

If I knew that, why would I ask? And if you know that, can you tell me which metrics? Where does the 8% come from exactly?

- Joined

- Jul 5, 2013

- Messages

- 31,936 (7.26/day)

It's the difference between the performance of one GPU vs another. How are you not understanding that?Where does the 8% come from exactly?

- Joined

- Jul 20, 2020

- Messages

- 1,339 (0.73/day)

| System Name | Gamey #2 / Office rescue |

|---|---|

| Processor | Ryzen 7 5800X3D / Core i5-7600 |

| Motherboard | Asrock B450M P4 / Dell Q270 |

| Cooling | IDCool SE-226-XT / Dell 65W |

| Memory | 32GB 3200 CL16 / 32GB 2400 CL17 |

| Video Card(s) | PNY 5070 / Pulse 6400 |

| Storage | 4TB Team MP34 / 1TB WD SN550 |

| Display(s) | LG 32GK650F 1440p 144Hz VA |

| Case | Corsair 4000Air / Opti 7050 SFF |

| Power Supply | EVGA 650 G3 / Dell 240W |

If I knew that, why would I ask? And if you know that, can you tell me which metrics? Where does the 8% come from exactly?

Yeah the database is inaccurate and has been for a long time. Here's what it says at the bottom:

Based on 1080p data and then on 4K data for "2080 Ti and faster".

However as you pointed out, this review has the 5060 as 29% faster at 1080p.

Loads of numbers in the database are skewed by 20% or more, especially if you compare GPUs of different generations. It seems like a relative number is put in the db and then it's never updated so the rankings end up based on different games. For instance, one of the last times the 1080 Ti and 5700 XT were compared (3060 review), the 1080 Ti was 3% faster at 1080p yet in the database it's listed as 17% faster, as seen above.

Short answer: The TPU database is off by up to 20% so always find the most recent review here containing your 2 cards and compare there. If your 2 cards of interest never overlapped in a single review, then ignore the ranking and find a Youtuber who's compared them and hope they did a decent job.

- Joined

- May 14, 2004

- Messages

- 28,973 (3.75/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

I update it all the time. Let me checkIt seems like a relative number is put in the db and then it's never updated

Edit: The 5050 is listed at 1080p performance, the 5060 is listed at relative 4K performance, because it's faster than the 2080 Ti. So there's some area here where some cards are in a bit weird places. How to fix?

Edit2: reversed the sort order so that it's better aligned with tpu charts showing best at the top

Edit3: Maybe we could change 4K cutoff to RTX 3080, instead of 2080 Ti, to ensure all cards in the range have more than 8 GB VRAM?

Last edited: