- Joined

- Oct 9, 2007

- Messages

- 47,853 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Apart from being the industry's leading 3D graphics benchmark application, 3DMark has had a long history of 3D graphics hardware manufacturers cheating with their hardware using application-specific optimisations against Futuremark's guidelines to boost 3DMark scores. Often, this is done by drivers detecting the 3DMark executable, and downgrading image quality, so the graphics processor has to handle lesser amount of processing load from the application, and end up with a higher performance score. Time and again, similar application-specific optimisations have tarnished 3DMark's credibility as an industry-wide benchmark.

This time around, it's neither of the two graphics giants in the news for the wrong reasons, it's Intel. Although the company has a wide consumer base of integrated graphics, perhaps the discerning media user / very-casual gamer finds it best to opt for integrated graphics (IGP) solutions from NVIDIA or AMD. Such choices rely upon reviews evaluating the IGPs performance at accelerating video (where it's common knowledge that Intel's IGPs rely heavily on the CPU for smooth video playback, while competing IGPs fare better at hardware-acceleration), synthetic and real-world 3D benchmarks, among other application-specific tests.

Here's a shady trick Intel is using to up its 3DMark Vantage score: the drivers, upon seeing the 3DMark Vantage executable, change the way they normally function, ask the CPU to pitch in with its processing power, and gain significant performance according to an investigation by Tech Report. While the image quality of the application isn't affected, the load on the IGP is effectively reduced, deviating from the driver's usual working model. This is in violation of Futuremark's 3DMark Vantage Driver Approval Policy (read here), which says:

A perfmon (performance monitor) log of the benchmark as it progressed, shows stark irregularities in the CPU load graphs between the two, during the GPU tests, although the two remained largely the same during the CPU tests. An example of one such graphs is below:

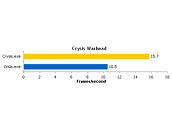

When asked for a comment to these findings, Intel replied by saying that its drivers are designed to utilize the CPU for some parts of the 3D rendering such as geometry rendering, when pixel and vertex processing saturates the IGP. Call of Juarez, Crysis, Lost Planet: Extreme Conditions, and Company of Heroes, are among other applications that the driver sees and quickly morphs the way the entire graphics subsystem works. A similar test run on Crysis Warhead yields a similar result:

Currently, Intel's 15.15.4.1872 drivers for Windows 7 aren't in Futuremark's list of approved drivers, none of Intel's Windows 7 drivers do. For a complete set of graphs, refer to the source article.

View at TechPowerUp Main Site

This time around, it's neither of the two graphics giants in the news for the wrong reasons, it's Intel. Although the company has a wide consumer base of integrated graphics, perhaps the discerning media user / very-casual gamer finds it best to opt for integrated graphics (IGP) solutions from NVIDIA or AMD. Such choices rely upon reviews evaluating the IGPs performance at accelerating video (where it's common knowledge that Intel's IGPs rely heavily on the CPU for smooth video playback, while competing IGPs fare better at hardware-acceleration), synthetic and real-world 3D benchmarks, among other application-specific tests.

Here's a shady trick Intel is using to up its 3DMark Vantage score: the drivers, upon seeing the 3DMark Vantage executable, change the way they normally function, ask the CPU to pitch in with its processing power, and gain significant performance according to an investigation by Tech Report. While the image quality of the application isn't affected, the load on the IGP is effectively reduced, deviating from the driver's usual working model. This is in violation of Futuremark's 3DMark Vantage Driver Approval Policy (read here), which says:

There's scope for ambiguity there. To prove that Intel's drivers indeed don't play fair at 3DMark Vantage, Tech Report put an Intel G41 Express chipset based motherboard with Intel's latest 15.15.4.1872 Graphics Media Accelerator drivers, through 3DMark Vantage 1.0.1. The reviewer simply renamed the 3DMark executable, in this case from "3DMarkVantage.exe" to "3DMarkVintage.exe", and there you are: a substantial performance difference.With the exception of configuring the correct rendering mode on multi-GPU systems, it is prohibited for the driver to detect the launch of 3DMark Vantage executable and to alter, replace or override any quality parameters or parts of the benchmark workload based on the detection. Optimizations in the driver that utilize empirical data of 3DMark Vantage workloads are prohibited.

A perfmon (performance monitor) log of the benchmark as it progressed, shows stark irregularities in the CPU load graphs between the two, during the GPU tests, although the two remained largely the same during the CPU tests. An example of one such graphs is below:

When asked for a comment to these findings, Intel replied by saying that its drivers are designed to utilize the CPU for some parts of the 3D rendering such as geometry rendering, when pixel and vertex processing saturates the IGP. Call of Juarez, Crysis, Lost Planet: Extreme Conditions, and Company of Heroes, are among other applications that the driver sees and quickly morphs the way the entire graphics subsystem works. A similar test run on Crysis Warhead yields a similar result:

Currently, Intel's 15.15.4.1872 drivers for Windows 7 aren't in Futuremark's list of approved drivers, none of Intel's Windows 7 drivers do. For a complete set of graphs, refer to the source article.

View at TechPowerUp Main Site

Last edited: