- Joined

- Oct 9, 2007

- Messages

- 47,895 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

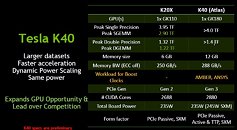

NVIDIA is readying its next big single-GPU compute accelerator, the Tesla K40, codenamed "Atlas." A company slide that's leaked to the web by Chinese publication ByCare reveals its specifications. The card is based on the new GK180 silicon. We've never heard of this one before, but looking at whatever limited specifications that are at hand, it doesn't look too different on paper from the GK110. It features 2,880 CUDA cores.

The card itself offers over 4 TFLOP/s of maximum single-precision floating point performance, with over 1.4 TFLOP/s double-precision. It ships with 12 GB of GDDR5 memory, double that of the Tesla K20X, with a memory bandwidth of 288 GB/s. The card appears to feature a dynamic overclocking feature, which works on ANSYS and AMBER workloads. The chip is configured to take advantage of PCI-Express gen 3.0 system bus. The card will be available in two form-factors, add-on card, and SXM, depending on which the maximum power draw is rated at 235W or 245W, respectively.

View at TechPowerUp Main Site

The card itself offers over 4 TFLOP/s of maximum single-precision floating point performance, with over 1.4 TFLOP/s double-precision. It ships with 12 GB of GDDR5 memory, double that of the Tesla K20X, with a memory bandwidth of 288 GB/s. The card appears to feature a dynamic overclocking feature, which works on ANSYS and AMBER workloads. The chip is configured to take advantage of PCI-Express gen 3.0 system bus. The card will be available in two form-factors, add-on card, and SXM, depending on which the maximum power draw is rated at 235W or 245W, respectively.

View at TechPowerUp Main Site