- Joined

- Oct 9, 2007

- Messages

- 47,871 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

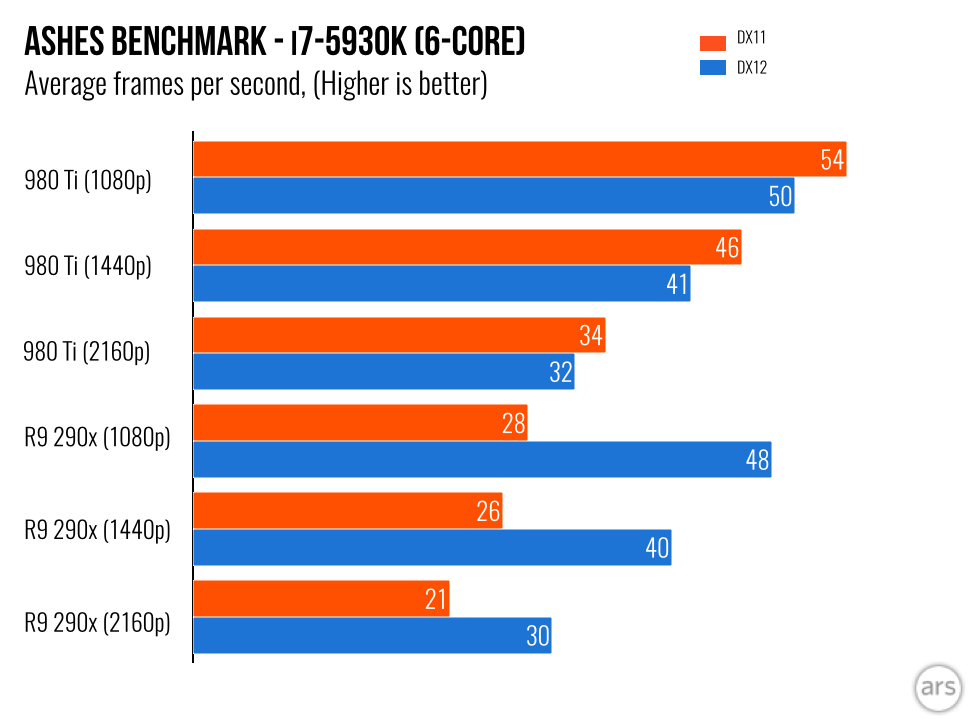

It turns out that NVIDIA's "Maxwell" architecture has an Achilles' heel after all, which tilts the scales in favor of competing AMD Graphics CoreNext architecture, in being better prepared for DirectX 12. "Maxwell" lacks support for async compute, one of the three highlight features of Direct3D 12, even as the GeForce driver "exposes" the feature's presence to apps. This came to light when game developer Oxide Games alleged that it was pressured by NVIDIA's marketing department to remove certain features in its "Ashes of the Singularity" DirectX 12 benchmark.

Async Compute is a standardized API-level feature added to Direct3D by Microsoft, which allows an app to better exploit the number-crunching resources of a GPU, by breaking down its graphics rendering tasks. Since NVIDIA driver tells apps that "Maxwell" GPUs supports it, Oxide Games simply created its benchmark with async compute support, but when it attempted to use it on Maxwell, it was an "unmitigated disaster." During to course of its developer correspondence with NVIDIA to try and fix this issue, it learned that "Maxwell" doesn't really support async compute at the bare-metal level, and that NVIDIA driver bluffs its support to apps. NVIDIA instead started pressuring Oxide to remove parts of its code that use async compute altogether, it alleges.

"Personally, I think one could just as easily make the claim that we were biased toward NVIDIA as the only "vendor" specific-code is for NVIDIA where we had to shutdown async compute. By vendor specific, I mean a case where we look at the Vendor ID and make changes to our rendering path. Curiously, their driver reported this feature was functional but attempting to use it was an unmitigated disaster in terms of performance and conformance so we shut it down on their hardware. As far as I know, Maxwell doesn't really have Async Compute so I don't know why their driver was trying to expose that. The only other thing that is different between them is that NVIDIA does fall into Tier 2 class binding hardware instead of Tier 3 like AMD which requires a little bit more CPU overhead in D3D12, but I don't think it ended up being very significant. This isn't a vendor specific path, as it's responding to capabilities the driver reports," writes Oxide, in a statement disputing NVIDIA's "misinformation" about the "Ashes of Singularity" benchmark in its press communications (presumably to VGA reviewers).

Given its growing market-share, NVIDIA could use similar tactics to keep game developers away from industry-standard API features that it doesn't support, and which rival AMD does. NVIDIA drivers tell Windows that its GPUs support DirectX 12 feature-level 12_1. We wonder how much of that support is faked at the driver-level, like async compute. The company is already drawing flack for using borderline anti-competitive practices with GameWorks, which effectively creates a walled garden of visual effects that only users of NVIDIA hardware can experience for the same $59 everyone spends on a particular game.

View at TechPowerUp Main Site

Async Compute is a standardized API-level feature added to Direct3D by Microsoft, which allows an app to better exploit the number-crunching resources of a GPU, by breaking down its graphics rendering tasks. Since NVIDIA driver tells apps that "Maxwell" GPUs supports it, Oxide Games simply created its benchmark with async compute support, but when it attempted to use it on Maxwell, it was an "unmitigated disaster." During to course of its developer correspondence with NVIDIA to try and fix this issue, it learned that "Maxwell" doesn't really support async compute at the bare-metal level, and that NVIDIA driver bluffs its support to apps. NVIDIA instead started pressuring Oxide to remove parts of its code that use async compute altogether, it alleges.

"Personally, I think one could just as easily make the claim that we were biased toward NVIDIA as the only "vendor" specific-code is for NVIDIA where we had to shutdown async compute. By vendor specific, I mean a case where we look at the Vendor ID and make changes to our rendering path. Curiously, their driver reported this feature was functional but attempting to use it was an unmitigated disaster in terms of performance and conformance so we shut it down on their hardware. As far as I know, Maxwell doesn't really have Async Compute so I don't know why their driver was trying to expose that. The only other thing that is different between them is that NVIDIA does fall into Tier 2 class binding hardware instead of Tier 3 like AMD which requires a little bit more CPU overhead in D3D12, but I don't think it ended up being very significant. This isn't a vendor specific path, as it's responding to capabilities the driver reports," writes Oxide, in a statement disputing NVIDIA's "misinformation" about the "Ashes of Singularity" benchmark in its press communications (presumably to VGA reviewers).

Given its growing market-share, NVIDIA could use similar tactics to keep game developers away from industry-standard API features that it doesn't support, and which rival AMD does. NVIDIA drivers tell Windows that its GPUs support DirectX 12 feature-level 12_1. We wonder how much of that support is faked at the driver-level, like async compute. The company is already drawing flack for using borderline anti-competitive practices with GameWorks, which effectively creates a walled garden of visual effects that only users of NVIDIA hardware can experience for the same $59 everyone spends on a particular game.

View at TechPowerUp Main Site

Last edited: