Uhhh have you not looked at benchmarks for the past year? I genuinely encourage you to go read them and then come back.

Ok you back? Good.

1) The 7970 overclocks better than anything that has been released since then. My 7970 ran at 1220/1840. My brother's 7950 ran at 1120/1800, and all of my crypto-mining 7950's ran at 1100+/1800+. Those are 40% overclocks lmao! My 7970 benches as well as a 980 in Deus Ex: MD and BF1. So drop that argument here.

2) 2-3 generations? You completely missed what I was saying. I said that withing a year of the 7970's launch it was ALREADY beating the 680 by 10-20% on average. Most people keep their cards for 2-3 years in my experience.

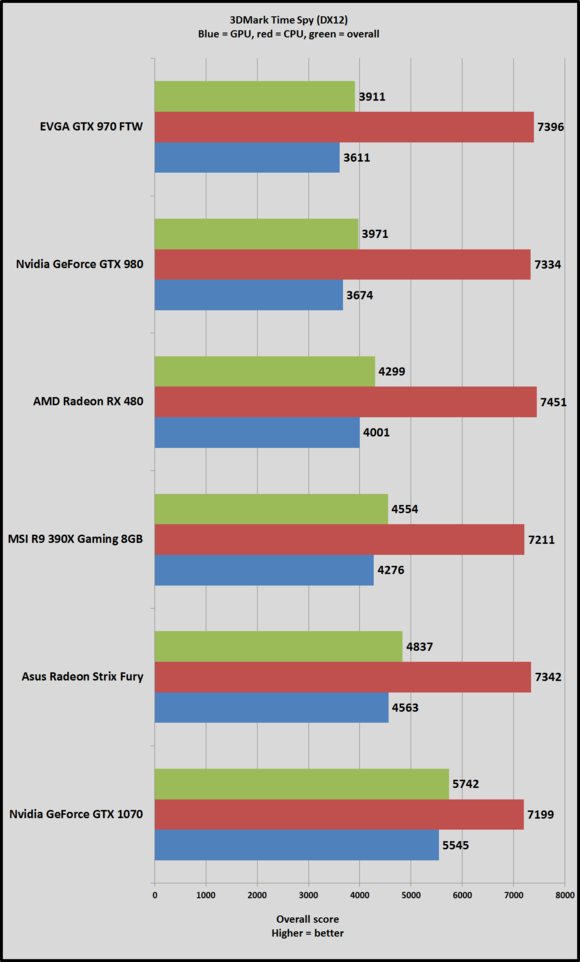

Furthermore just because it is 1-2 generations newer doesn't make a difference. Everyone CONSTANTLY complains about AMD's recent trend of re-branding old GPU's. I will admit that I think it is stupid too, but can you blaim them? Radeon is like 1-2 times smaller than Nvidia. If they can sell the 7970 2 years later and have it compete with the 970 they will lmao. Hence why I just bought a Fury for $310 - it beats the 1070 in TODAY's games. That's just stupid.

You're overstating the 680. The 680 did enough to stay ahead of the initial 7970. But AMD rereleased the 7970 as a GHz edition. It did perform on par if not better.

Given the driver optimisations AMD perform, it gained performance over the years. The 680 didn't because Nvidia get DX11 driver optimisations very quickly.

The question is, are you buying a card for now or two years down the line? You seem to take a hugely AMD slanted bias. I've seen your posts on other forums and it's clear you're a hater, you lack balance. For all your precise arguments, like any hater, you tend to use slanted evidence or ignore standard business practice.

I own a 980ti and it chuckles me to see it perform on par with a Fury X. Now that's in a DX11 version of a Gaming Evolved title. Sure, in DX12 the Fury X will gain some frames but why should I cry? I played the game at 1440p with very high settings (some maxed) at about 60fps. It was excellent.

In Doom Vulkan I was on far higher fps. Yes a Fury X would have got more but I've got a 60hz monitor. My gaming experiences have been great.

I bought the card a year ago. It hasn't let me down.

Going forward, I am no fan of Nvidia. I won't spend the money for a 1080, 1080ti or above because Vega is only 6 months away (hopefully at most). If Vega has a better perf/watt than Polaris and it's a far bigger chip it should match the 1080 in DX11 and it should absolutely own the bare metal API's. So Nvidia won't see my money again until Volta and even then, that's only if it performs.

So if Vega is twice as good as a 480, I'm on that next. But the reason I have no reason to move from my 980ti is because it still performs very, very well. If I can play AAA Gaming Evolved titles with butter smooth frames, I have nothing to feel cheated about. Only children get upset because someone elses card plays it faster than theirs.

Oh and one more thing, my Powecolor LCD 7970 clocked to the 1300 catslyst maximum. My MSI version (under an EKWB) only managed 1225.

I had more fun overclocking my original Titan using the voltage soft mod. I can't remember the clocks but they were scary for such a card. Two of those cards got recycled in TPU.