- Joined

- Oct 9, 2007

- Messages

- 47,901 (7.37/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

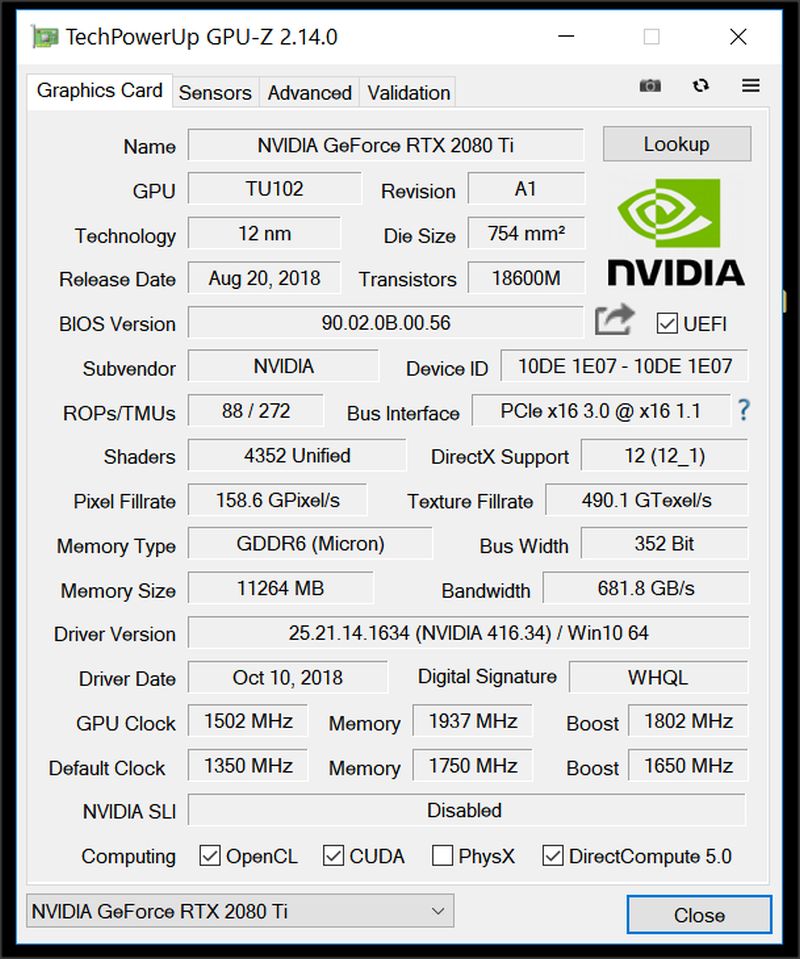

TechPowerUp today released the latest version of GPU-Z, the popular graphics subsystem information and diagnostic utility. Version 2.14.0 adds support for Intel UHD Graphics iGPUs embedded into 9th generation Core "Coffee Lake Refresh" processors. GPU-Z now calculates Pixel and Texture Fill-rates more accurately, by leveraging the boost clock instead of the base clock. This is particularly useful for scenarios such as iGPUs, which have a vast difference between the base and boost clocks. It's also relevant to some of the newer generations of GPUs, such as NVIDIA RTX 20-series.

A number of minor bugs were also fixed with GPU-Z 2.14.0, including a missing Intel iGPU temperature sensor, and malfunctioning clock-speed measurement on Intel iGPUs. For NVIDIA GPUs, power sensors show power-draw both as an absolute value and as a percentage of the GPU's rated TDP, in separate read-outs. This feature was introduced in the previous version, this version clarifies the labels by including "W" and "%" in the name. Grab GPU-Z from the link below.

DOWNLOAD: TechPowerUp GPU-Z 2.14.0

The change-log follows.

View at TechPowerUp Main Site

A number of minor bugs were also fixed with GPU-Z 2.14.0, including a missing Intel iGPU temperature sensor, and malfunctioning clock-speed measurement on Intel iGPUs. For NVIDIA GPUs, power sensors show power-draw both as an absolute value and as a percentage of the GPU's rated TDP, in separate read-outs. This feature was introduced in the previous version, this version clarifies the labels by including "W" and "%" in the name. Grab GPU-Z from the link below.

DOWNLOAD: TechPowerUp GPU-Z 2.14.0

The change-log follows.

- When available, boost clock is used to calculate fillrate and texture rate

- Fixed missing Intel GPU temperature sensor

- Fixed wrong clocks on some Intel IGP systems ("12750 MHz")

- NVIDIA power sensors now labeled with "W" and "%"

- Added support for Intel Coffee Lake Refresh

View at TechPowerUp Main Site