English source

AMD's Navi 21 GPU based high-end Radeon RX graphics cards are rumored to be twice as fast as Navi 10 'RX 5700 XT' and feature GDDR6 memory.

wccftech.com

Beyond this being a random post on a random BBS with zero reason for us to believe it ("According to people familiar with the matter at the Taiwan PTT Forum", lol), and some

very questionable assertions ("It was also pointed out that given the huge Die size of the GPU itself, the card will eventually not use HBM, but instead rely on GDDR6 " - yet this die is reportedly significantly

smaller than Fiji, which used HBM, and there's no reason two stacks of HBM2(E) wouldn't fit just fine next to a 505mm² die). Also, there's nothing new in that rumor, it's been rehashed over and over and over again on these forums and elsewhere. Still, let's be generous and assume it's somewhat accurate. The question then becomes: 505mm2

of what?

The density gains of the XSX would indicate more than 1:1 scaling from Navi 10, i.e. a 505mm2 chip would either have >80 CUs or some other stuff added on that we don't yet know about. Let's look closer at this.

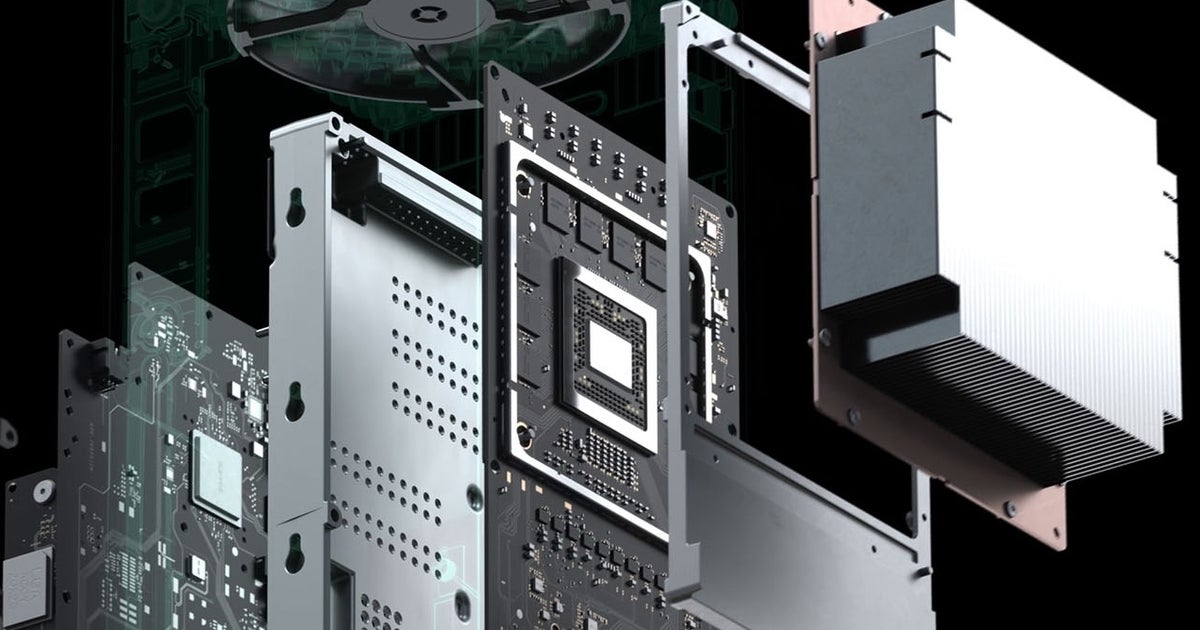

Navi 10 has 40 CUs, a 256-bit G6 bus and a single IF/PCIe 4.0 x16 link on a 251mm² die. The XSX die is 360mm² with 56 CUs, 8 Zen 2 cores, and I/O including a 320-bit G6 bus. A

Zen 2 CCD is 74 mm² with two 31.3 mm² CCXes, 16MB of L3, IF links and anything else that lives on that die. Let's be conservative and discount L3 completely - the XSX then uses at least 2x31.3 mm² = 62.6mm² of die area to its CPU cores (likely a bit more as it won't have zero L3 cache, but will also likely gain density from the node improvement. Some space will also be used for the IF links between the CPU, GPU and memory controllers). This leaves us with at most 360mm² - 63mm² = 297mm² for

52 56 CUs, all encode/decode blocks (which given the importance of streaming are likely to be fully featured and not cut down), a 320-bit GDDR6 PHY + controllers (compared to the 256-bit PHY and controllers of Navi 10, so 25% more die area for that), and at least two PCIe links for SSDs (unknown whether these are PCIe 3.0x4, PCIe 4.0x2 or PCIe 4.0x4 at this point), plus the chipset uplink etc. While the XSX does gain something in having slightly less I/O than a PC GPU, the gains from that are minor at best. Ignoring that, we have a 25% increase in VRAM die area + a

30% 40% increase in CUs with just an ~18% increase in die size (with the CPU subtracted, that is). And that

includes RT hardware.

While this is some real napkin math (we have no idea if anything beyond the die sizes here is actually accurate in terms of numbers, but IMO they shouldn't be too far off), it tells us that a 505mm² RDNA 2 GPU on the same improved 7nm node as the XSX either

must have more than 80 CUs - if the scaling roughly follows my calculations a 100% area increase would then be more like a 120% increase in CUs, or ~95 CUs - or use

a lot of die area for something else. Might we see significantly more RT power compared to shader pwerformance in the PC GPUs? Also, if it uses HBM2 rather than a stupidly large 512-bit G6 bus (which IMO sounds likely, despite what that BBS post says), the CU count could grow further (100?) as HBM controllers and PHYs are much more space efficient than G6.

Still, with all of this within the realm of IMO reasonable speculation (and it is

very much speculation at this point) we have no idea about power, clocks, or anything else. Performance would vary

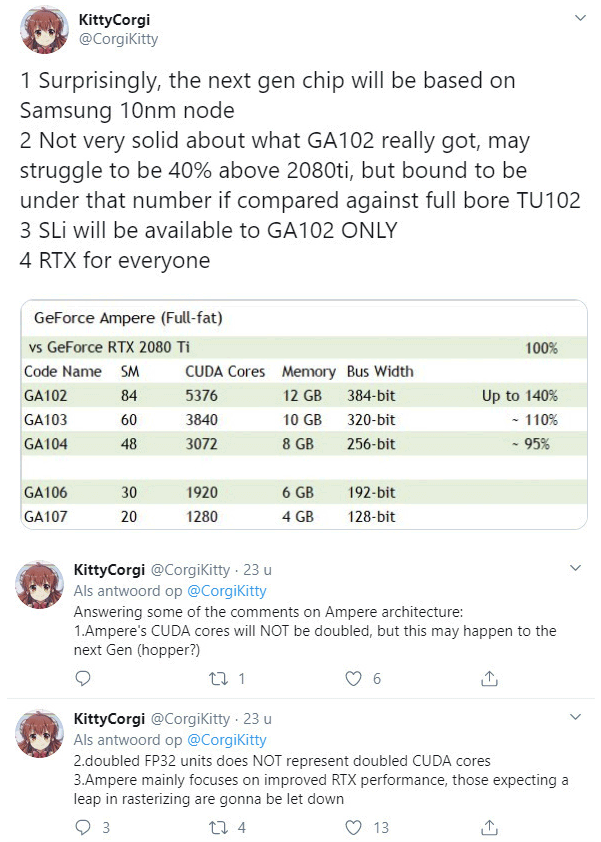

wildly based on all of this. Pricing is also crucial, and a 505mm2 die on TSMC 7nm is not going to be cheap. So, as I've said both here and elsewhere, I don't see a reason to doubt that AMD can bring about a true flagship this generation, but both the absolute performance and pricing is entirely up in the air at this point, as is its competitiveness with Nvidia's so far entirely unknown Ampere arch. There's absolutely no indication in any of this that this will

beat Ampere, simply because Ampere is entirely unknown. But will it be powerful? Absolutely.

Edit: I borked my numbers from about halfway through by calculating from the 52 active CUs in the XSX die rather than the 56 physically present ones. Fixed that; also added a note about possibly using "free" die space for more RT power compared to consoles.