- Joined

- Aug 29, 2005

- Messages

- 7,539 (1.04/day)

- Location

- Stuck somewhere in the 80's Jpop era....

| System Name | Lynni Zen | Lenowo TwinkPad L14 G2 | Tiny Tiger |

|---|---|

| Processor | AMD Ryzen 7 7700 Raphael | i5-1135G7 Tiger Lake-U | i9-9900k (Turbo disaabled) |

| Motherboard | ASRock B650M PG Riptide Bios v. 3.20 AMD AGESA 1.2.0.3a | Lenowo BDPLANAR Bios 1.68 | Lenowo M720q |

| Cooling | AMD Wraith Cooler | Lenowo C-267C-2 | Lenowo 01MN631 (65W) |

| Memory | Flare X5 2x16GB DDR5 6000MHZ CL36 (AMD EXPO) | Willk Elektronik 2x16GB 2666MHZ CL17 | Crucial 2x16GB |

| Video Card(s) | Sapphire PURE AMD Radeon™ RX 9070 Gaming OC 16GB | Intel® Iris Xe Graphics | Intel® UHD Graphics 630 |

| Storage | Gigabyte M30 1TB|Sabrent Rocket 2TB| HDD: 1TB | WD RED SN700 1TB | M30 1TB\ SSD 1TB HDD: 16TB\10TB |

| Display(s) | KTC M27T20S 1440p@165Hz | LG 48CX OLED 4K HDR | Innolux 14" 1080p |

| Case | Asus Prime AP201 White Mesh | Lenowo L14 G2 chassis | Lenowo M720q chassis |

| Audio Device(s) | Steelseries Arctis Pro Wireless |

| Power Supply | Be Quiet! Pure Power 12 M 750W Goldie | Cyberpunk GaN 65W USB-C charger | Lenowo 95W slim tip |

| Mouse | Logitech G305 Lightspeedy Wireless | Lenowo TouchPad & Logitech G305 |

| Keyboard | Ducky One 3 Daybreak Fullsize | L14 G2 UK Lumi |

| Software | Win11 IoT Enterprise 24H2 UK | Win11 IoT Enterprise LTSC 24H2 UK / Arch (Fan) |

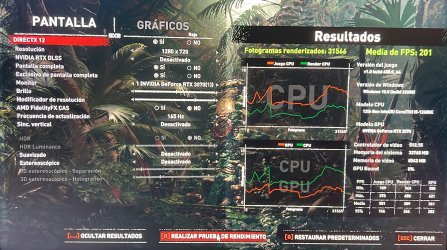

| Benchmark Scores | 3DMARK: https://www.3dmark.com/3dm/89434432? GPU-Z: https://www.techpowerup.com/gpuz/details/v3zbr |

As true as that might be but default DDR5 speeds 5200 and 5600MHz sadly ain't much faster than good DDR4 kit and most pre-builds with DDR5 at least in my country.Intel is running with ddr5 4800c40. Come on, people run it at 7000+ c30 or c32 already. And AMD is running with 3200c14 while my AMD system runs at 3800c13.

3200MHz CL16 = 10ns

3600MHz CL18 = 10ns

5200MHz CL38 = 14.615ns

So the higher speed and CL DDR5 memory is at default for people on the cheap stuff the faster DDR4 sadly is en latency if the calulation is right.

Link: https://notkyon.moe/ram-latency2.htm