T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 3,328 (3.84/day)

- Location

- South East, UK

| System Name | The TPU Typewriter |

|---|---|

| Processor | AMD Ryzen 5 5600 (non-X) |

| Motherboard | GIGABYTE B550M DS3H Micro ATX |

| Cooling | DeepCool AS500 |

| Memory | Kingston Fury Renegade RGB 32 GB (2 x 16 GB) DDR4-3600 CL16 |

| Video Card(s) | PowerColor Radeon RX 7800 XT 16 GB Hellhound OC |

| Storage | Samsung 980 Pro 1 TB M.2-2280 PCIe 4.0 X4 NVME SSD |

| Display(s) | Lenovo Legion Y27q-20 27" QHD IPS monitor |

| Case | GameMax Spark M-ATX (re-badged Jonsbo D30) |

| Audio Device(s) | FiiO K7 Desktop DAC/Amp + Philips Fidelio X3 headphones, or ARTTI T10 Planar IEMs |

| Power Supply | ADATA XPG CORE Reactor 650 W 80+ Gold ATX |

| Mouse | Roccat Kone Pro Air |

| Keyboard | Cooler Master MasterKeys Pro L |

| Software | Windows 10 64-bit Home Edition |

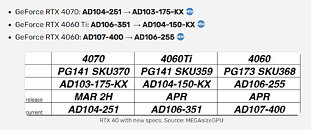

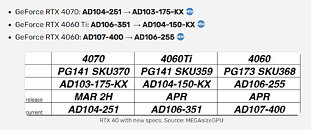

NVIDIA completed its last round of GeForce NVIDIA RTX 40-series GPU refreshes at the very end of January—new evidence suggests that another wave is scheduled for imminent release. MEGAsizeGPU has acquired and shared a tabulated list of new Ada Lovelace GPU variants—the trusted leaker's post presents a timetable that was supposed to kick off within the second half of this month. First up is the GeForce RTX 4070 GPU, with a current designation of AD104-251—the leaked table suggests that a new variant, AD103-175-KX, is due very soon (or overdue). Wccftech pointed out that the new ID was previously linked to NVIDIA's GeForce RTX 4070 SUPER SKU. Moving into April, next up is the GeForce RTX 4060 Ti—jumping from the current AD106-351 die to a new unit; AD104-150-KX. The third adjustment (allegedly) affects the GeForce RTX 4060—going from AD107-400 to AD106-255, also timetabled for next month. MEGAsizeGPU reckons that Team Green will be swapping chips, but not rolling out broadly adjusted specifications—a best case scenario could include higher CUDA, RT, and Tensor core counts. According to VideoCardz, the new die designations have popped up in freshly released official driver notes—it is inferred that the variants are getting an "under the radar" launch treatment.

View at TechPowerUp Main Site | Source

View at TechPowerUp Main Site | Source