So it's perfectly fine for you when 75% of these FPS show something inaccurate, unreal, approximated?

You are okay that you paid so much for your GPU and this is what you get?

Is this a joke? How on Earth would 5080 with 60% of 4090's processing units could be 20-30% faster in native?

Only with FG. Now imagine what would happen if RTX 4090 supported newest generation of FG.

NVIDIA AD102, 2520 MHz, 16384 Cores, 512 TMUs, 176 ROPs, 24576 MB GDDR6X, 1313 MHz, 384 bit

www.techpowerup.com

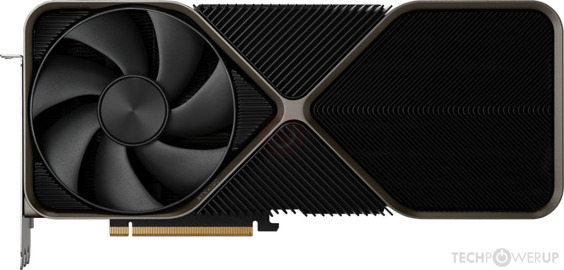

NVIDIA GB203, 2617 MHz, 10752 Cores, 336 TMUs, 112 ROPs, 16384 MB GDDR7, 1875 MHz, 256 bit

www.techpowerup.com