- Joined

- Jan 4, 2013

- Messages

- 1,212 (0.26/day)

- Location

- Denmark

| System Name | R9 5950x/Skylake 6400 |

|---|---|

| Processor | R9 5950x/i5 6400 |

| Motherboard | Gigabyte Aorus Master X570/Asus Z170 Pro Gaming |

| Cooling | Arctic Liquid Freezer II 360/Stock |

| Memory | 4x8GB Patriot PVS416G4440 CL14/G.S Ripjaws 32 GB F4-3200C16D-32GV |

| Video Card(s) | 7900XTX/6900XT |

| Storage | RIP Seagate 530 4TB (died after 7 months), WD SN850 2TB, Aorus 2TB, Corsair MP600 1TB / 960 Evo 1TB |

| Display(s) | 3x LG 27gl850 1440p |

| Case | Custom builds |

| Audio Device(s) | - |

| Power Supply | Silverstone 1000watt modular Gold/1000Watt Antec |

| Software | Win11pro/win10pro / Win10 Home / win7 / wista 64 bit and XPpro |

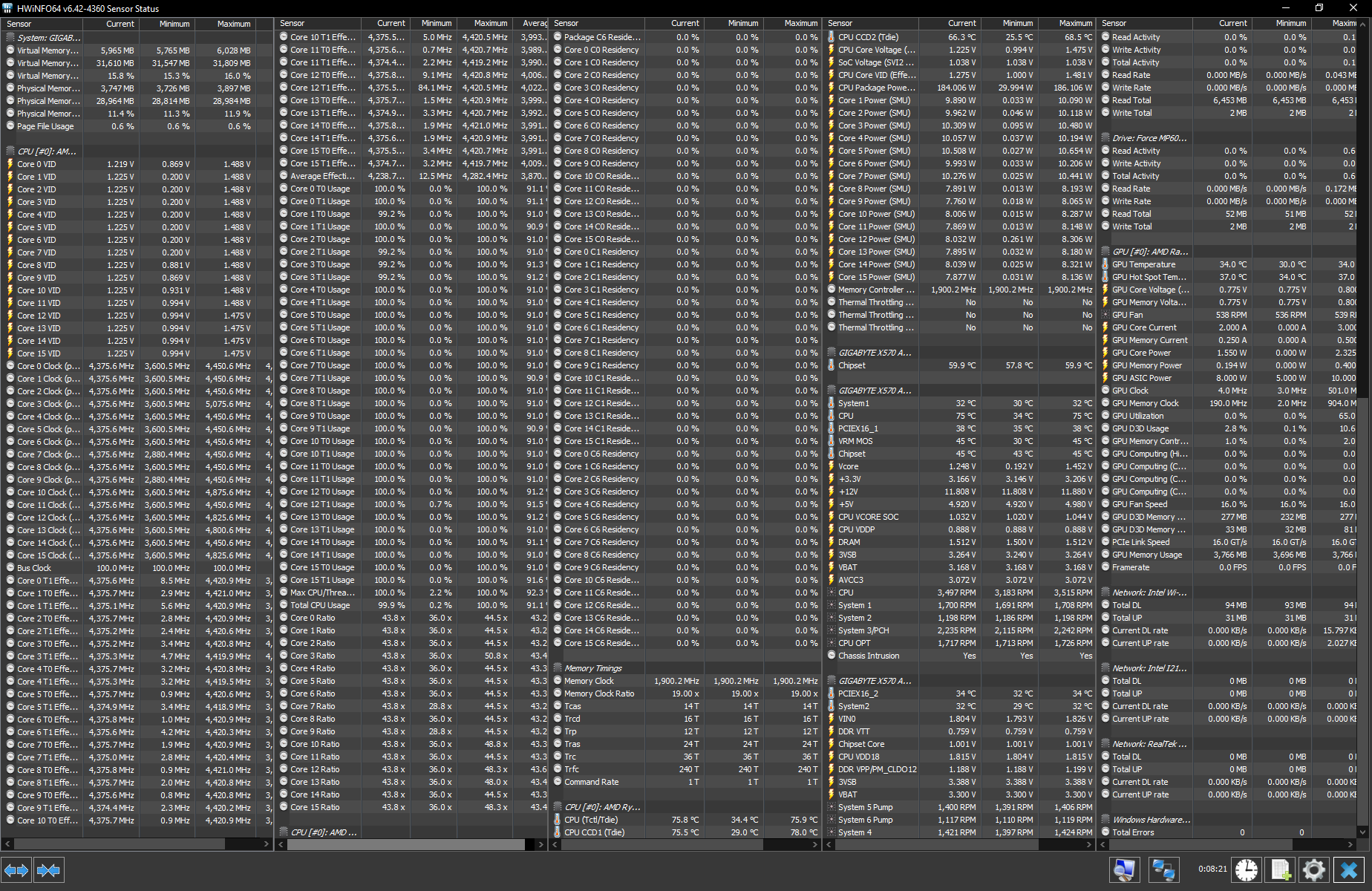

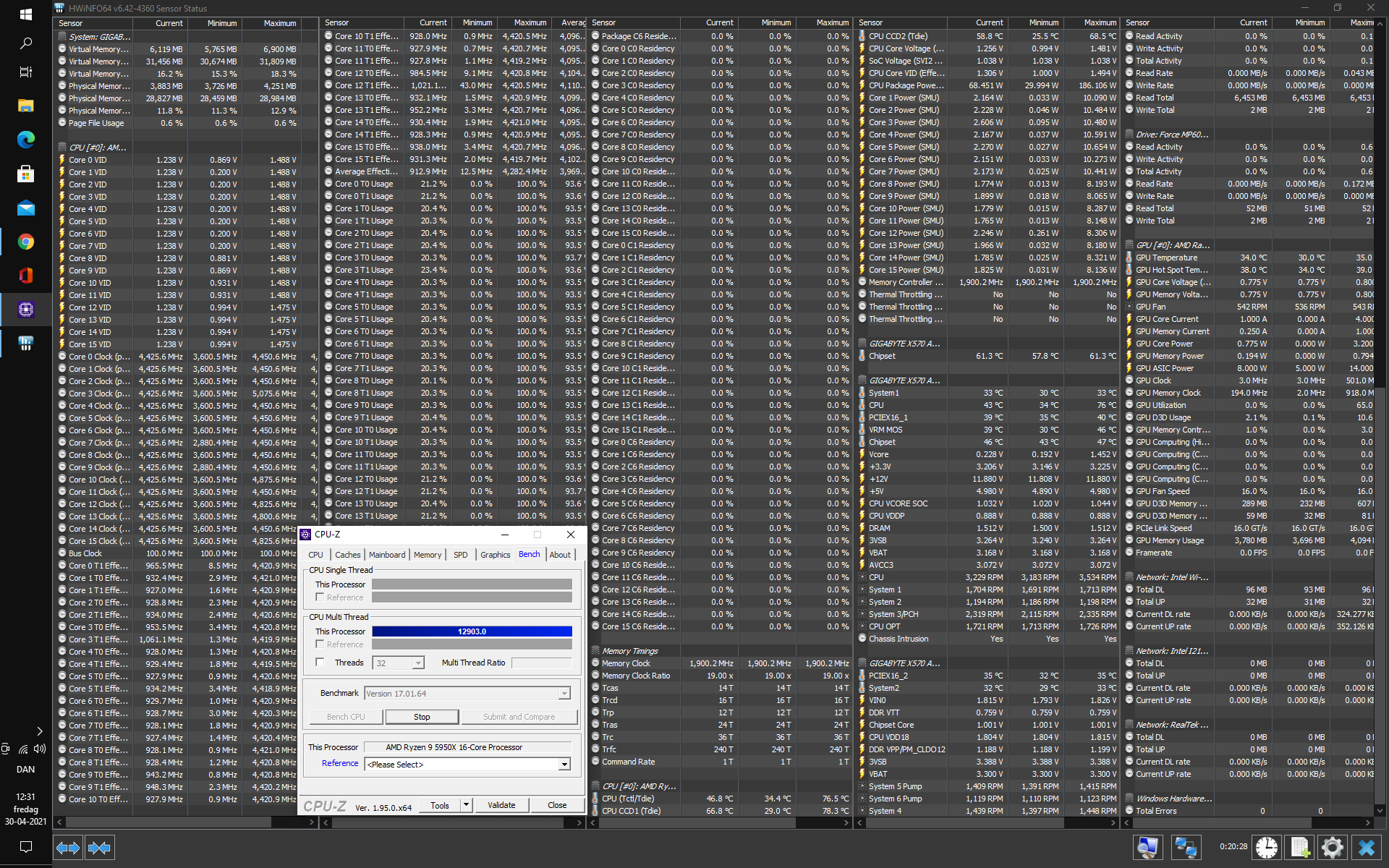

I was planing af repaste of my cpu to se if there would be any difference - and to be honest - I could not remember if I had used my new Noctua NT-H2 paste on my own cpu or only on som machine I have build during the last couple of months... Doing testing afterwards - burn in if you can call it that - I noticed that there was a 10c difference between CCD1 and CDD2

Looking at some earlier test from februar I saw almost excatly the same difference between the two cores - with CCD1 up and about 78-80c and CCD2 almost 10c lower - now theres always a difference in mount and paste, but that seems more or less continuously.

My question is what do other dual CCDs Owners experience? E.g. 3900x/3950x and 5900x/5950x models?

Did the "burn in" with CPUz stress test

Looking at some earlier test from februar I saw almost excatly the same difference between the two cores - with CCD1 up and about 78-80c and CCD2 almost 10c lower - now theres always a difference in mount and paste, but that seems more or less continuously.

My question is what do other dual CCDs Owners experience? E.g. 3900x/3950x and 5900x/5950x models?

Did the "burn in" with CPUz stress test