- Joined

- Oct 9, 2007

- Messages

- 47,684 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Did you see the NVIDIA keynote presentation at CES this year? For us, one of the highlights was the DLSS demo based on our 3DMark Port Royal ray tracing benchmark. Today, we're thrilled to announce that we've added this exciting new graphics technology to 3DMark in the form of a new NVIDIA DLSS feature test. This new test is available now in 3DMark Advanced and Professional Editions.

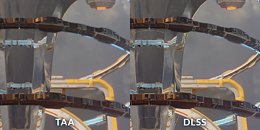

3DMark feature tests are specialized tests for specific technologies. The NVIDIA DLSS feature test helps you compare performance and image quality with and without DLSS processing. The test is based on the 3DMark Port Royal ray tracing benchmark. Like many games, Port Royal uses Temporal Anti-Aliasing. TAA is a popular, state-of-the-art technique, but it can result in blurring and the loss of fine detail. DLSS (Deep Learning Super Sampling) is an NVIDIA RTX technology that uses deep learning and AI to improve game performance while maintaining visual quality.

Comparing performance with the NVIDIA DLSS feature test

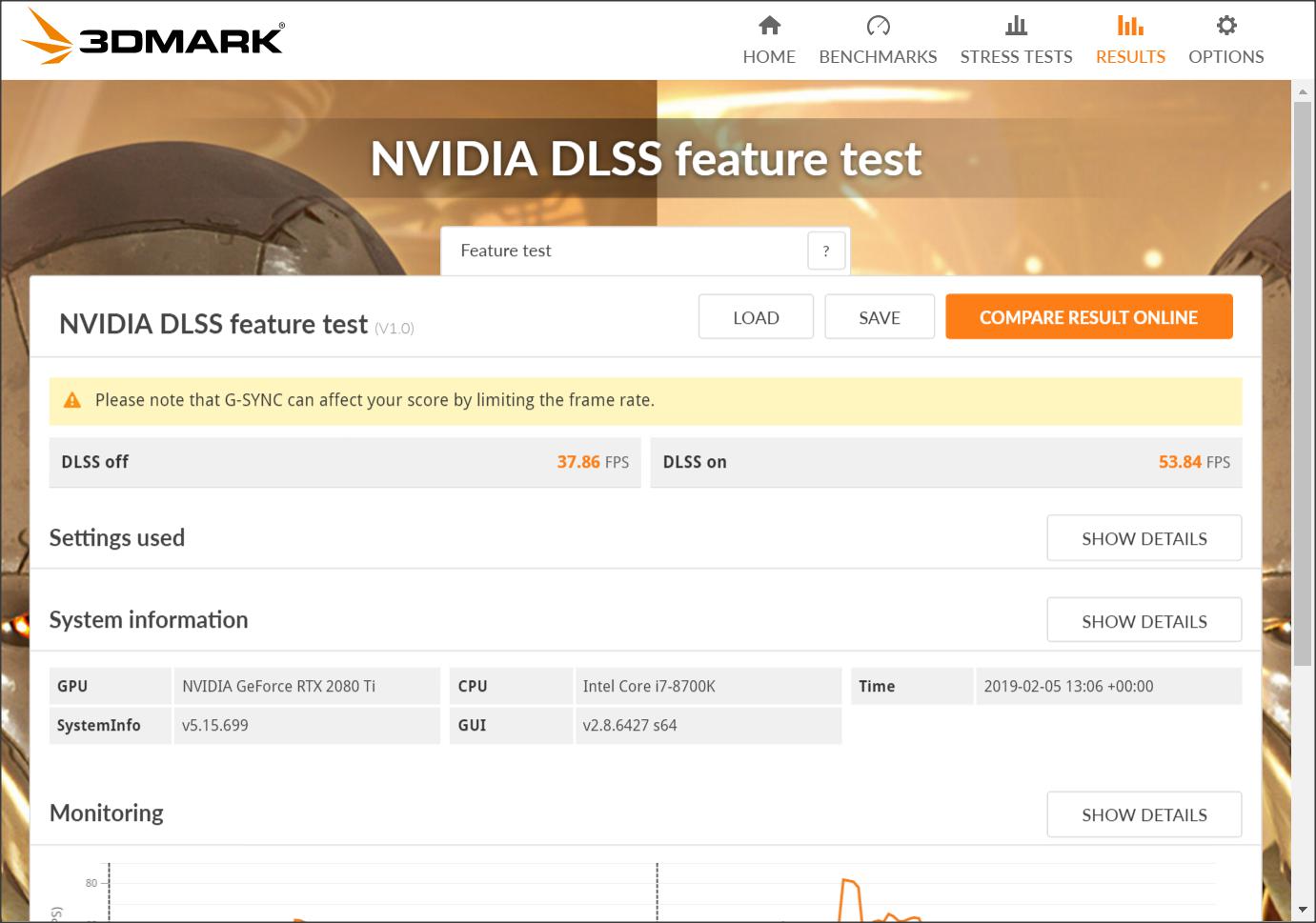

The NVIDIA DLSS feature test runs in two passes. The first pass renders Port Royal with DLSS disabled to measure baseline performance. The second pass renders Port Royal at a lower resolution then uses DLSS processing to create frames at the output resolution. The result screen reports the frame rate for each run.

DLSS is a proprietary NVIDIA technology, so naturally, you must have an NVIDIA graphics card that supports DLSS, such as a GeForce RTX series, Quadro RTX series or TITAN RTX, to run the test. You must also have the latest NVIDIA drivers for your graphics card. You can find more details in the 3DMark technical guide.

DLSS uses a pre-trained neural network to find jagged, aliased edges in an image and then adjust the colors of the affected pixels to create smoother edges and improved image quality. The result is a clear, crisp image with quality similar to traditional rendering but with higher performance.

3DMark is 85% off in the Steam Lunar Sale

We're celebrating Chinese New Year-and the sixth anniversary of 3DMark's original release-with a special week-long sale.

From now until February 11, 3DMark Advanced Edition is 85% off, only USD $4.49, from Steam and our website.

3DMark Advanced Edition owners who purchased 3DMark before January 8, 2019 will need to buy the Port Royal upgrade DLC to unlock the DLSS test. The upgrade costs USD $2.99. You can find out more about 3DMark updates and upgrades here.

3DMark Professional Edition

The NVIDIA DLSS feature test is available as a free update for 3DMark Professional Edition customers with a valid annual license. Customers with an older, perpetual Professional Edition license will need to purchase an annual license to unlock Port Royal.

View at TechPowerUp Main Site

3DMark feature tests are specialized tests for specific technologies. The NVIDIA DLSS feature test helps you compare performance and image quality with and without DLSS processing. The test is based on the 3DMark Port Royal ray tracing benchmark. Like many games, Port Royal uses Temporal Anti-Aliasing. TAA is a popular, state-of-the-art technique, but it can result in blurring and the loss of fine detail. DLSS (Deep Learning Super Sampling) is an NVIDIA RTX technology that uses deep learning and AI to improve game performance while maintaining visual quality.

Comparing performance with the NVIDIA DLSS feature test

The NVIDIA DLSS feature test runs in two passes. The first pass renders Port Royal with DLSS disabled to measure baseline performance. The second pass renders Port Royal at a lower resolution then uses DLSS processing to create frames at the output resolution. The result screen reports the frame rate for each run.

DLSS is a proprietary NVIDIA technology, so naturally, you must have an NVIDIA graphics card that supports DLSS, such as a GeForce RTX series, Quadro RTX series or TITAN RTX, to run the test. You must also have the latest NVIDIA drivers for your graphics card. You can find more details in the 3DMark technical guide.

DLSS uses a pre-trained neural network to find jagged, aliased edges in an image and then adjust the colors of the affected pixels to create smoother edges and improved image quality. The result is a clear, crisp image with quality similar to traditional rendering but with higher performance.

3DMark is 85% off in the Steam Lunar Sale

We're celebrating Chinese New Year-and the sixth anniversary of 3DMark's original release-with a special week-long sale.

From now until February 11, 3DMark Advanced Edition is 85% off, only USD $4.49, from Steam and our website.

3DMark Advanced Edition owners who purchased 3DMark before January 8, 2019 will need to buy the Port Royal upgrade DLC to unlock the DLSS test. The upgrade costs USD $2.99. You can find out more about 3DMark updates and upgrades here.

3DMark Professional Edition

The NVIDIA DLSS feature test is available as a free update for 3DMark Professional Edition customers with a valid annual license. Customers with an older, perpetual Professional Edition license will need to purchase an annual license to unlock Port Royal.

View at TechPowerUp Main Site