Power costs are quite high in many countries so I thought I could share my experiences using a wallplug measuring powerdraw. This is pc with 5600X 76W lim, 3060ti 200W, 3 case fans. Motherboard, SSD, fans etc draws 30-40W, CPU typically 30-60W in games and GPU 200W if uncapped, stock and GPU limited. Tweaked setting using UV on GPU (1620@731mv) and 60fps limit with RTSS:

Age 4 1080p high/highest:

Stock 280-300W (80-110fps)

Tweaked 130-140W (60 locked)

Cyberpunk 1080p high/highest dlss performance:

Stock 290-320W (90-140fps)

Tweaked 135-150W (60fps locked)

The gpu UV accounts for 80W savings when gpu bound, the rest is due to fps cap. If you are satisfied with 60fps and game 3hours a day this can save you up to 200kWh (typically a kWh cost 0.1-0.5usd depending on where you live) a year if that is something you care about, maybe more if you live in hot climate and can reduce AC usage, less if you live in cold climate and use your pc as a heater. Further improvements could be running 1560@700mv on GPU, this drops max powerdraw to 100W vs 120W at 731mv on my GPU, but vram downclocks to 10GHz so performance drops to 80% of stock vs 90% at 731mv. A lower powerlimit on CPU also can help, 45W limit makes allcore run at 3.7GHz vs 4.6GHz using 76W, performance multicore is around 15-20% lower, but SC is the same.

I already loved you from the RAM timing help you gave me, but to find that you also think the way i do about useless wasted power with gaming seals the deal

My system doesnt even break 400W monitor included, with a 3090. I always get accused of lying or faking the numbers, since people just max every clock speed and uncap FPS with vsync off.

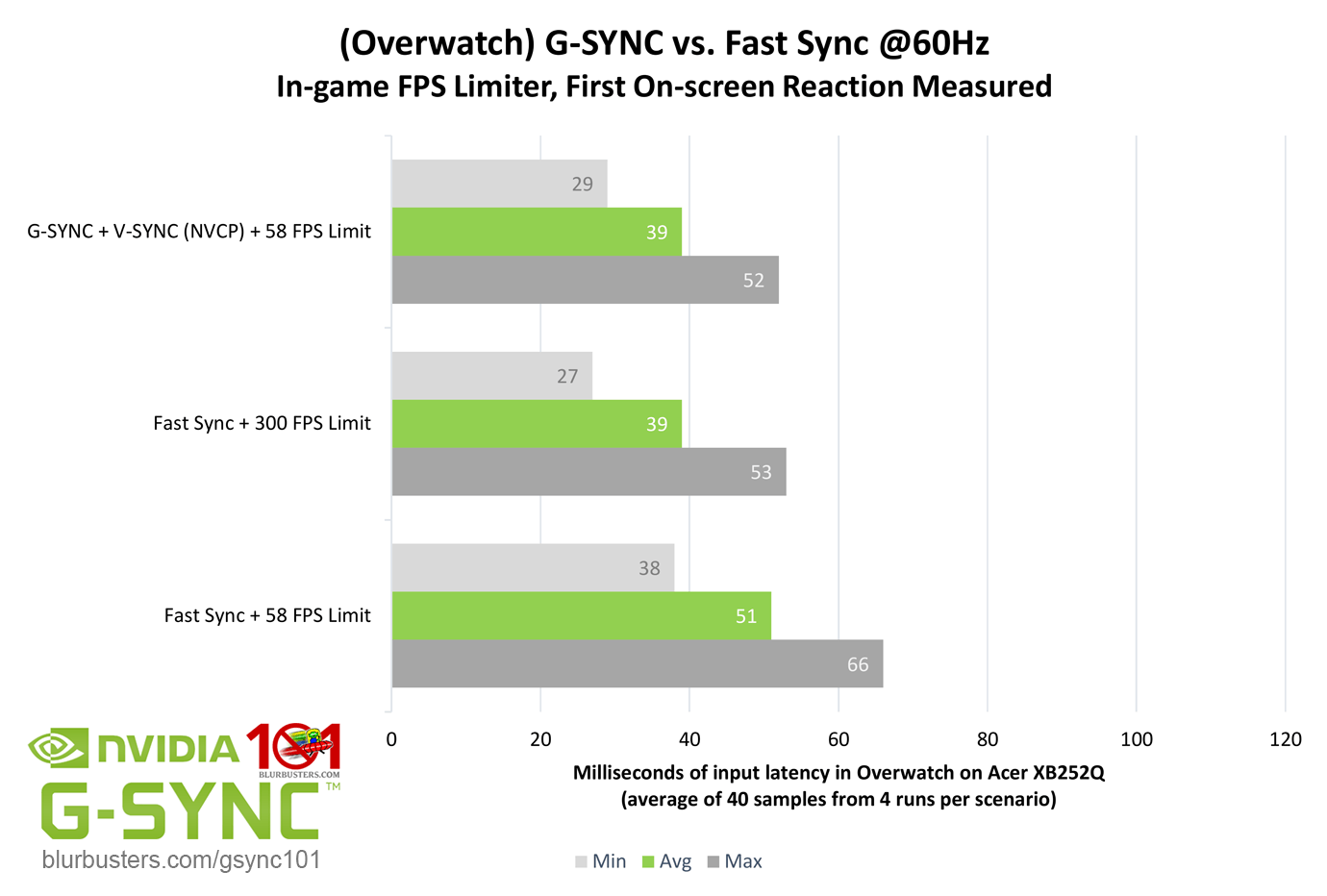

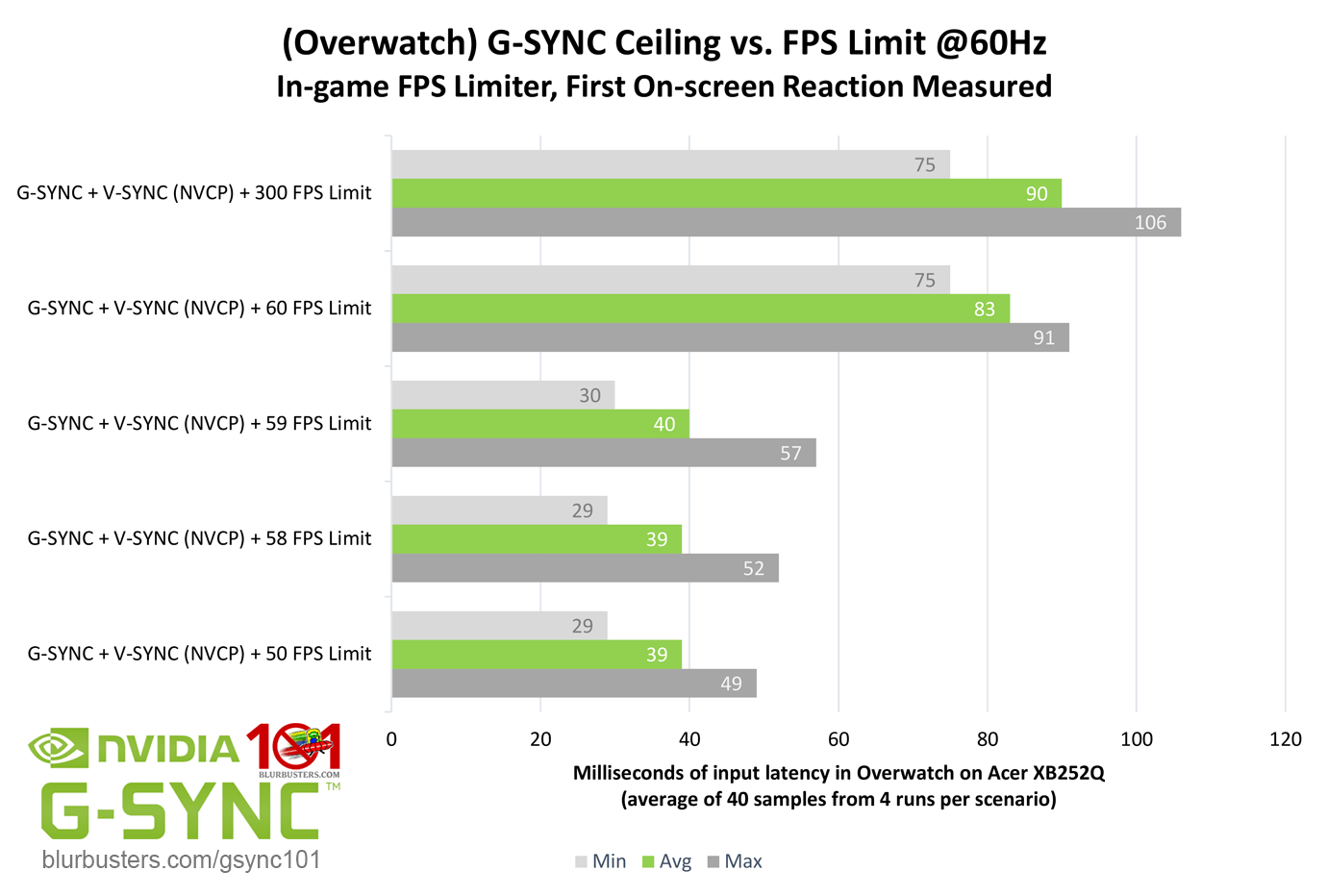

Always cap your FPS to at least two FPS below your refresh to prevent micro stutter and reduce 99% latency spikes. Never to your refresh rate.

IMO, if you are that concerned about saving energy, set your AC thermostat to 75°F instead of 72° and close the drapes and blinds. Set your furnace thermostat to 68°F instead of 72° and wear a sweater. Turn off the lights when you leave a room. Know what you want before you open the fridge door.

What I find puzzling is how some folks will spend a lot more money for a device that is only 3 - 5% more efficient. Computer power supplies are a perfect example. It takes many years to make up the difference in costs between a Titanium (94% efficient at 50% load) and a Gold (90% at 50% load) certified PSU. Yet people buy the Titanium, often in part, because they believe they are helping the planet. Yet the reality is, in many cases, it takes a lot more energy to manufacture those more efficient products.

Bill (woops the tags didnt work) that advice might seem super logical to you, but as someone from a very different climate it just uh... it wont work here. That sort of advice is extremely regional.

The rest of what you said is fine, i just laughed at the idea of an aussie house with a furnace, or that we even need lights for 80% of the year as opposed to the sun being visible through solid sheets of lead.

Second part:

Because the titanium parts often have longer warranties, and run colder due to that efficiency. My hx750i had a 10 year warranty and the fan doesnt even turn on when i game - i paid extra for *that*

I have a grandson who does the same. My points remain the same. The computer is still spending more time closer to idle consumption rates than maxed out rates.

(Yay this one worked)

Not with games - seriously, you can fire up games like WoW and minimise them and they'll still max out a lot of systems even if you're AFK, unless you modify settings to lower the FPS when they're in the background.

With MMO's and other social games this happens a lot - some of the people i play SC2 with literally leave their systems on and in game 24/7 unless servers are down for updates.

The FB groups i'm in have people constantly asking about bottlenecks with modern intel systems at 100% CPU usage in their esports games

Shit even battle.net adds 20-30W on most systems just from being open, since it fires up 3D clocks to display the stupid animations

In my examples above you save over 40% power on GPU alone when gaming and still getting 90% of stock perf. Gaming 3 hours a day is not that much, some friends of mine spend 8 hours on WOW. If your power costs 0.3usd pr kWh this becomes 150-200usd in powercost + maybe AC cooling cost in warm climate. Extra wear on equipment, more noise etc.

So... uhhh... we pay 23cents per kw/h.

USD has some really cheap electricity thanks to nuclear power

Why would you buy something and not use it? That surprise of yours makes no sense. A well optimized PC should see the gpu at 99% most of the time.

That's like buying a big SUV to go to the coffee shop

The only PC at 99% GPU all the time, is a PC with a too weak GPU.

I'm always surprised by people with that view on things you have.

Why are you not draining your phone to 0% battery? Why the hell did you let the screen go off?

Why is your car not in first gear at 10K RPM at all times, especially stopped at traffic lights?

Because it's stupid to max something out for no reason. You gain nothing for throwing hundreds of watts for an FPS gain you cant notice, and you're more likely to run into performance issues from overheating components and degradation years faster than just running things at a reasonable setting in the first place

I don't underclock or undervolt nothing. My GPU is OC'd as temps are pretty low gaming, same for CPU. It just shows how much better a custom loop is than air cooling. Even though the temps are rising ere now, my temps are still very acceptable.

As I have said before, I'm not fussed about power use, not gonna cry about it. If you want a powerful gaming rig accept it, or cripple it to lower power use/temps. I don't have 3 rigs or mine as some do, maybe it's them that should change their behaviour if they don't like high power use.

My GPU is 1080ti, monitor is 1440p/165hz i go for highest FPS i can get in games. 60fps=bleeurgh

Aww man no wonder we disagreed on GPU undervolting in other threads - the 1080Ti is one of the best power efficiency cards out there. My regular GTX 1080 is a fucking champ, but even overclocked its total wattage was *nothing* like the madness 3080 and 3090 suffer from.

It was seriously an epiphany moment to compare my 1080 experience with your 1080ti, vs the 3080 i had that died and now the 3090.

You can overclock and add 30W for 5% gains.

Me? My cards already overclocked out of the box to add 100W for those 5% gains, and there isn't enough power for the entire board already - i cant OC the VRAM without power starving the GPU, on a 350W BIOS. If i overclock the GPU, the VRAM clocks go down to power the GPU. It's madness.

You've simply missed out on the joys that the new cards aren't as good in that aspect - and we want the cards to cut back to be just as power efficient as what you're enjoying

Seriously - the 20 and 30 series cards have so many limits and throttles. If i overclock my VRAM, the GPU gets power starved and tanks to 1.2GHz. Underclocking one part, leaves more wattage for the board to use elsewhere - and yes thats totally F'ed up, but it's how the high end cards are now.

Maxing out at 50% means that idling is inefficient. That's just too much PSU wattage for your system and money wasted.

Whaaaaa

No way. My PSU is rated for fan off til 300W, the loss off the efficiency curve you're talking about would be in the single digits.

Just because a sweet spot exists on a curve doesn't mean that moving from that spot has to be a big problem

150W to 800W might as well be the same, and the efficiency loss of being at 100W is smaller because... a higher percentage of a small number, is small.