OpenAI's chaos does not add up - Built Not Found

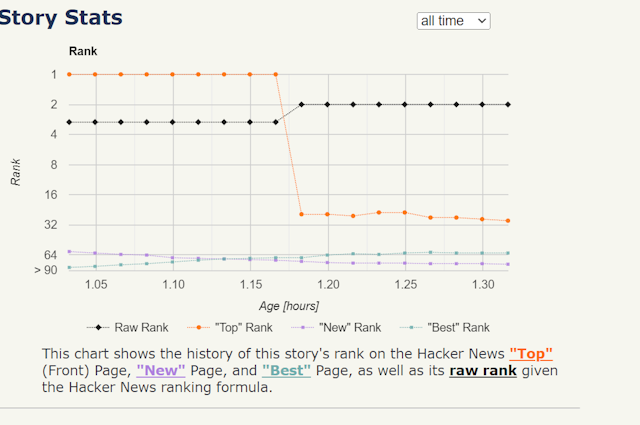

Update: this post has been instantly demoted from #1 to #26 on HN frontpage :) Hmm.builtnotfound.proseful.com

This crap just doesn't add up at all. At this point I'm just trying to make sense of everything.

How to aqcuire a very valuable company for next to nothing 101. I mean, I don't know but there's really two possible scenarios:

1- OpenAI board is super super dumb and decided to commit company suicide by overplaying their hand and giving their most valuable assets - the talent working there - away to a willing competitor.

2- Microsoft engaged in some very anti competitive, shady and unethical behaviour by negotiating special deals with OpenAI talent and the boad overreacted and made microsoft's job even easier.

Either way looks like OpenAI is finished. The special thing they had was the talent working there, the tech is not particularly special - a variation of google's transformer model that everyone else can and is replicating - and they simply have no business model to commercialize their LLM and won't be able to support the costs of running the service when Microsoft pulls out.