T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 3,198 (3.97/day)

- Location

- South East, UK

| System Name | The TPU Typewriter |

|---|---|

| Processor | AMD Ryzen 5 5600 (non-X) |

| Motherboard | GIGABYTE B550M DS3H Micro ATX |

| Cooling | DeepCool AS500 |

| Memory | Kingston Fury Renegade RGB 32 GB (2 x 16 GB) DDR4-3600 CL16 |

| Video Card(s) | PowerColor Radeon RX 7800 XT 16 GB Hellhound OC |

| Storage | Samsung 980 Pro 1 TB M.2-2280 PCIe 4.0 X4 NVME SSD |

| Display(s) | Lenovo Legion Y27q-20 27" QHD IPS monitor |

| Case | GameMax Spark M-ATX (re-badged Jonsbo D30) |

| Audio Device(s) | FiiO K7 Desktop DAC/Amp + Philips Fidelio X3 headphones, or ARTTI T10 Planar IEMs |

| Power Supply | ADATA XPG CORE Reactor 650 W 80+ Gold ATX |

| Mouse | Roccat Kone Pro Air |

| Keyboard | Cooler Master MasterKeys Pro L |

| Software | Windows 10 64-bit Home Edition |

AMD issued briefing material earlier this month, teasing an upcoming reveal of its next generation FidelityFX at GDC 2023. True to form, today the hardware specialist has announced that FidelityFX Super Resolution 3.0 is incoming. The company is playing catch up with rival NVIDIA, who have already issued version 3.0 of its DLSS graphics enhancer/upscaler for a small number of games. AMD says that FSR 3.0 is in an early stage of development, but it is hoped that its work on temporal upscaling will result in a number of improvements over the previous generation.

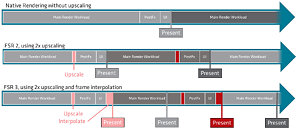

The engineering team is aiming for a 2x frame performance improvement over the existing FSR 2.0 technique, which it claims is already capable of: "computing more pixels than we have samples in the current frame." This will be achieved by generating a greater number of pixels in a current frame, via the addition of interpolated frames. It is highly likely that the team will reach a point in development where one sample, at least, will be created for every interpolated pixel. The team wants to prevent feedback loops from occurring - an interpolated frame will only be shown once, and any interpolation artifact would only remain for one frame.

However, a number of potential setbacks were noted - a reliance on color clamping to correct color of outdated samples is not entirely feasible. It will be difficult to produce non linear motion interpolation on 2D screen space motion vectors, and the interpolation of final frames will mean that all post-processing needs to be interpolated, also counting the user interface in the foreground. One of AMD's diagrams shows how a native rendering technique stacks up to FSR 2.0 and 3.0.

FSR 3.0 will enable a smoother overall gaming experience, and simultaneously this allows developers to focus more GPU time on visual quality. Latency reduction is a key focus area for FSR 3.0 - AMD has the gamer in mind, with high frame rates and the lowest achievable latency as basic requirements. The engineers are also aiming for a smooth upgrade path from titles that currently utilize version 2.0 of FSR.

View at TechPowerUp Main Site | Source

The engineering team is aiming for a 2x frame performance improvement over the existing FSR 2.0 technique, which it claims is already capable of: "computing more pixels than we have samples in the current frame." This will be achieved by generating a greater number of pixels in a current frame, via the addition of interpolated frames. It is highly likely that the team will reach a point in development where one sample, at least, will be created for every interpolated pixel. The team wants to prevent feedback loops from occurring - an interpolated frame will only be shown once, and any interpolation artifact would only remain for one frame.

However, a number of potential setbacks were noted - a reliance on color clamping to correct color of outdated samples is not entirely feasible. It will be difficult to produce non linear motion interpolation on 2D screen space motion vectors, and the interpolation of final frames will mean that all post-processing needs to be interpolated, also counting the user interface in the foreground. One of AMD's diagrams shows how a native rendering technique stacks up to FSR 2.0 and 3.0.

FSR 3.0 will enable a smoother overall gaming experience, and simultaneously this allows developers to focus more GPU time on visual quality. Latency reduction is a key focus area for FSR 3.0 - AMD has the gamer in mind, with high frame rates and the lowest achievable latency as basic requirements. The engineers are also aiming for a smooth upgrade path from titles that currently utilize version 2.0 of FSR.

View at TechPowerUp Main Site | Source