- Joined

- Aug 19, 2017

- Messages

- 3,057 (1.08/day)

AMD has launched its RDNA 2 based graphics cards, codenamed Navi 21. These GPUs are set to compete with NVIDIA's Ampere offerings, with the lineup covering the Radeon RX 6800, RX 6800 XT, and RX 6900 XT graphics cards. Until now, we have had reviews of the former two, but not the Radeon RX 6900 XT. That is because the card is coming at a later date, specifically on December 8th, in just a few days. As a reminder, the Radeon RX 6900 XT GPU is a Navi 21 XTX model with 80 Compute Units that give a total of 5120 Stream Processors. The graphics card uses a 256-bit bus that connects the GPU with 128 MB of its Infinity Cache to 16 GB of GDDR6 memory. When it comes to frequencies, it has a base clock of 1825 MHz, with a boost speed of 2250 MHz.

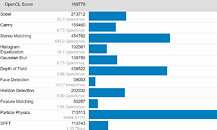

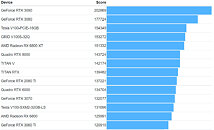

Today, in a GeekBench 5 submission, we get to see the first benchmarks of AMD's top-end Radeon RX 6900 XT graphics card. Running an OpenCL test suite, the card was paired with AMD's Ryzen 9 5950X 16C/32T CPU. The card managed to pass the OpenCL test benchmarks with a score of 169779 points. That makes the card 12% faster than RX 6800 XT GPU, but still slower than the competing NVIDIA GeForce RTX 3080 GPU, which scores 177724 points. However, we need to wait for a few more benchmarks to appear to jump to any conclusions, including the TechPowerUp review, which is expected to arrive once NDA lifts. Below, you can compare the score to other GPUs in the GeekBench 5 OpenCL database.

View at TechPowerUp Main Site

Today, in a GeekBench 5 submission, we get to see the first benchmarks of AMD's top-end Radeon RX 6900 XT graphics card. Running an OpenCL test suite, the card was paired with AMD's Ryzen 9 5950X 16C/32T CPU. The card managed to pass the OpenCL test benchmarks with a score of 169779 points. That makes the card 12% faster than RX 6800 XT GPU, but still slower than the competing NVIDIA GeForce RTX 3080 GPU, which scores 177724 points. However, we need to wait for a few more benchmarks to appear to jump to any conclusions, including the TechPowerUp review, which is expected to arrive once NDA lifts. Below, you can compare the score to other GPUs in the GeekBench 5 OpenCL database.

View at TechPowerUp Main Site