It's unlikely that MS would favor AMD over NVIDIA "just because". It is known that AMD in general works better in DX12. NVIDIA has a way higher market share. Unless MS wants to create a more equal balance by doing so in favor of AMD. Then again, MS games aren't sold in numbers as high as other games so... not relly sure.

Actually, it's a common misperception that nVidia has a 'higher market share'. Yes, on just the PC platform, nVidia has higher market share, but people forget that the PS/4 and XBOX consoles are really just x86 PCs with some custom hardware tweaks. If you look at it THAT way, then you can see that AMD Radeons likely power >60% of the x86 gaming market. nVidia is actually slowly getting squeezed out of the x86 gaming market.

Here's a link from 2016 showing AMD with 56% of the x86+GPU market--I'm projecting that it's higher now, since consoles generally outsell PC graphics cards (if anyone has anything more recent, please post):

https://www.pcgamesn.com/amd/57-per-cent-gamers-on-radeon

Game developers know this, and they are in fact migrating their coding over to Radeon-optimized code paths. And before anybody jumps on this and says 'but nVidia customers spend more money on hardware, well, it's important to understand that to a game developper, some millionaire with two GTX1080 Ti's is only worth the same $60 as a 12 year old with an XBox One. They're both spending $60 on the latest World of Battlefield 7 (yes, made up game) title, so in the end, the developer is looking at which GPU is in the most target platforms.

If I'm Bethesda, for example, I realize that over 60% of the x86 machines that have sufficiently powerful GPUs (XBox, Playstation, PC) that I'd like to put my game on are powered by AMD Radeons. It's only logical that I'm therefore going to build the game engine to run very smoothly on Radeons. All Radeons since the HD7000-series in 2011 have hardware schedulers, which makes them capable of efficiently scheduling/feeding the compute/rendering pipelines from multiple CPU cores right in the GPU hardware. This makes them DX12 optimized, whereas nVidia GPUs, even up to today's 1000-series, still don't have this built-in hardware.

The extra hardware does increase the Radeon's power draw somewhat, and nVidia has made much of how their GPUs are more 'power efficient', but in reality, they're simply missing additional hardware schedulers, which if included in their design, would probably put them on an even level with AMD's power consumption. It's a bit like saying my car is slightly more fuel efficient than yours because I removed the back seats. Sure, you will use a little less gas, but you can't carry any passengers in the back seat, so it's a dubious 'advantage' you're pushing there.

Those who bought "future proof" AMD cards back then are still waiting for them to take off, basing their anecdotes on games which are outliers rather than the norm, and concluding that every game can perform like that on this (superior) hardware.

Meantime, in the real world the competition looks better than ever.

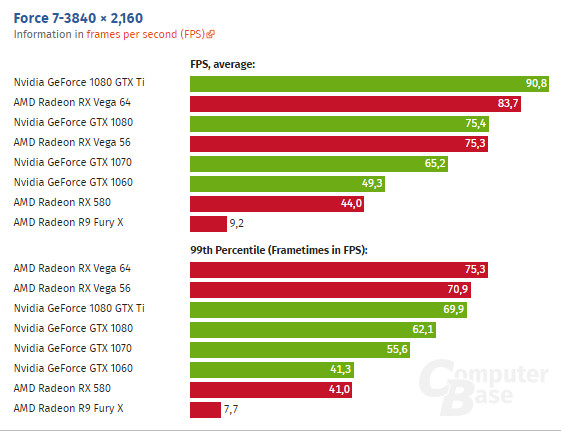

Well, I bought a Radeon R9 290 for $259 Canadian back in December of 2013, which has played every game I like extremely well, and it might just run upcoming fully optimized DX12 titles like Forza-7 as well as or better than an $800 1080 Ti in the 99% frame times, so I'd hardly call that a fail. In fact, when the custom-cooled Vegas hit, I'm probably going to upgrade to one of those in my own rig, and put the R9 290 in my HTPC, because it'll probably still be pumping out great performance for a couple more years to come.

.

.