Yes you can't believe it because its not truewell , just cant belive that 7nm amd cpu loose intel 14nm cpu??!!!

intel win.

hope techpower put same test then, when intel release comet lake and of coz 1when intels 10nm intel ice lake is out.

again, excellent test and,tx!

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Ryzen 9 3900X, SMT on vs SMT off, vs Intel 9900K

- Thread starter W1zzard

- Start date

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

Overclocking potential with it disabled would have been a great value add to this article.

Thanks for the testing as is!

Thanks for the testing as is!

- Joined

- Nov 24, 2017

- Messages

- 853 (0.30/day)

- Location

- Asia

| Processor | Intel Core i5 4590 |

|---|---|

| Motherboard | Gigabyte Z97x Gaming 3 |

| Cooling | Intel Stock Cooler |

| Memory | 8GiB(2x4GiB) DDR3-1600 [800MHz] |

| Video Card(s) | XFX RX 560D 4GiB |

| Storage | Transcend SSD370S 128GB; Toshiba DT01ACA100 1TB HDD |

| Display(s) | Samsung S20D300 20" 768p TN |

| Case | Cooler Master MasterBox E501L |

| Audio Device(s) | Realtek ALC1150 |

| Power Supply | Corsair VS450 |

| Mouse | A4Tech N-70FX |

| Software | Windows 10 Pro |

| Benchmark Scores | BaseMark GPU : 250 Point in HD 4600 |

But not seen any monitor with that resulation. My 20 Inches monitor is 768p, all 20 and 19 Inches monitor I have seen is 768p and people game on that resulation, not 720p. I game on my monitor on 768p not 720p.720p like 1080p, 480p, 1440p is standard.

- Joined

- Dec 18, 2015

- Messages

- 142 (0.04/day)

| System Name | Avell old monster - Workstation T1 - HTPC |

|---|---|

| Processor | i7-3630QM\i7-5960x\Ryzen 3 2200G |

| Cooling | Stock. |

| Memory | 2x4Gb @ 1600Mhz |

| Video Card(s) | HD 7970M \ EVGA GTX 980\ Vega 8 |

| Storage | SSD Sandisk Ultra li - 480 GB + 1 TB 5400 RPM WD - 960gb SDD + 2TB HDD |

Yes you can't believe it because its not true

Yes, win in some games, lose in others... also, differences below 5% are within the error margin.

I think that keeping the same architecture by half a decade has had some advantages for blue side: stability and great optimization.

- Joined

- May 8, 2018

- Messages

- 1,609 (0.61/day)

- Location

- London, UK

But not seen any monitor with that resulation. My 20 Inches monitor is 768p, all 20 and 19 Inches monitor I have seen is 768p and people game on that resulation, not 720p. I game on my monitor on 768p not 720p.

Video industry standard is different than monitor resolution standard. For example 1600p, 1200p, 1024p and 768p were the most used monitor resolutions. None of them are standard but I understand your point.

I could agree that in games like CS:GO which is being played by 450.000 avg (according to steam) 768p is widely being used.But not seen any monitor with that resulation. My 20 Inches monitor is 768p, all 20 and 19 Inches monitor I have seen is 768p and people game on that resulation, not 720p. I game on my monitor on 768p not 720p.

https://csgopedia.com/csgo-pro-setups/ (on Mobile watch in landscape mode; rotate screen to get each players specs)

Since its release, the 9400f is still the best cost benefit modern cpu.

See this is what I mean, I didn´t see media gaving attention to this little chip! I´m very surprised by these results and impressed by the 9400F. 10% slower than 9900k in gaming? Damn. 9400F + gtx 1660ti seems a killer combo right now for gaming

- Joined

- Jun 1, 2011

- Messages

- 4,988 (0.96/day)

- Location

- in a van down by the river

| Processor | faster at instructions than yours |

|---|---|

| Motherboard | more nurturing than yours |

| Cooling | frostier than yours |

| Memory | superior scheduling & haphazardly entry than yours |

| Video Card(s) | better rasterization than yours |

| Storage | more ample than yours |

| Display(s) | increased pixels than yours |

| Case | fancier than yours |

| Audio Device(s) | further audible than yours |

| Power Supply | additional amps x volts than yours |

| Mouse | without as much gnawing as yours |

| Keyboard | less clicky than yours |

| VR HMD | not as odd looking as yours |

| Software | extra mushier than yours |

| Benchmark Scores | up yours |

It's more about removing the GPU from the equation then posting results on a resolution that may be slightly more popular.But not seen any monitor with that resulation. My 20 Inches monitor is 768p, all 20 and 19 Inches monitor I have seen is 768p and people game on that resulation, not 720p. I game on my monitor on 768p not 720p.

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

why would anyone care?Why would someone recommend an İntel/Nvidia setup in an AMD SMT On/Off thread?!

this. Though I disagree with using an artificially low resolution to exaggerate a difference which doesnt scale up.It's more about removing the GPU from the equation then posting results on a resolution that may be slightly more popular.

Last edited:

Sorry if my question is silly but:

Is it possible to switch on/off SMT on the fly, without restarting or going to BIOS?

I'm asking because if answer is yes, then maybe it's possible to make some ghost program with information (small database) about Game, CPU and SMT option (on/off) and using this option when or before every game is started? Or if it's possible to made such option in BIOS? Question maybe to AMD or BIOS makers.

In this case you could have always best option choosen for every game and the average of games performance would be even 2-3% higher.

Is it possible to switch on/off SMT on the fly, without restarting or going to BIOS?

I'm asking because if answer is yes, then maybe it's possible to make some ghost program with information (small database) about Game, CPU and SMT option (on/off) and using this option when or before every game is started? Or if it's possible to made such option in BIOS? Question maybe to AMD or BIOS makers.

In this case you could have always best option choosen for every game and the average of games performance would be even 2-3% higher.

Last edited:

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

AFAIK, no.Is it possible to switch on/off SMT on the fly, without restarting or going to BIOS?

- Joined

- Sep 15, 2007

- Messages

- 3,956 (0.61/day)

| Processor | OCed 5800X3D |

|---|---|

| Motherboard | Asucks C6H |

| Cooling | Air |

| Memory | 32GB |

| Video Card(s) | OCed 9070XT red devil |

| Storage | NVMees |

| Display(s) | 32" Dull curved 1440 |

| Case | Freebie glass idk |

| Audio Device(s) | Sennheiser, Custom 5.1 |

| Power Supply | Don't even remember |

very true but the the topic was gaming

Add in background tasks and you've lost 5% more. I kept checking and rechecking benchmarks on a gaming PC I built that had all of their crap installed (for gaming only). It literally lost 5% in multithreaded tests. I guess if you don't use anything at all, but discord and other shit adds up.

6 cores are basically yesteryear. 6 cores without SMT are dead to me.

- Joined

- Aug 21, 2013

- Messages

- 2,243 (0.51/day)

- Location

- Estonia

| System Name | DarkStar |

|---|---|

| Processor | AMD Ryzen 7 5800X3D |

| Motherboard | Gigabyte X570 Aorus Master 1.0 (BIOS F39g) |

| Cooling | Arctic Liquid Freezer II 420mm AIO (rev4) |

| Memory | 4x8GB Patriot Viper DDR4 4400C19 @ 3733Mhz 14-14-13-27 1T |

| Video Card(s) | Gigabyte Radeon RX 9070 XT Gaming OC 16GB GDDR6 @ 3400Mhz Core/22Gbps Mem |

| Storage | 1TB Samsung 990 Pro (OS);2TB Samsung PM9A1;4TB XPG S70 Blade (Games);14TB WD UltraStar HC530 (Video) |

| Display(s) | 27" LG UltraGear 27GS85Q-B @ 2560x1440 @ 200Hz, Nano-IPS |

| Case | be quiet! Dark Base Pro 900 Rev.2 |

| Audio Device(s) | SteelSeries Arctis Nova Pro Wireless |

| Power Supply | 1000W Seasonic PRIME Ultra Titanium;600W APC SMT750i UPS |

| Mouse | Logitech G604 |

| Keyboard | Logitech G910 Orion Spark |

| Software | Windows 11 Pro x64 24H2 (Build 26100.4351) |

There should be no performance difference between disabling SMT vs assigning cores 0-11 to a specific process with project lasso. So i don't think there is any need to disable SMT.

- Joined

- Jun 1, 2011

- Messages

- 4,988 (0.96/day)

- Location

- in a van down by the river

| Processor | faster at instructions than yours |

|---|---|

| Motherboard | more nurturing than yours |

| Cooling | frostier than yours |

| Memory | superior scheduling & haphazardly entry than yours |

| Video Card(s) | better rasterization than yours |

| Storage | more ample than yours |

| Display(s) | increased pixels than yours |

| Case | fancier than yours |

| Audio Device(s) | further audible than yours |

| Power Supply | additional amps x volts than yours |

| Mouse | without as much gnawing as yours |

| Keyboard | less clicky than yours |

| VR HMD | not as odd looking as yours |

| Software | extra mushier than yours |

| Benchmark Scores | up yours |

Add in background tasks and you've lost 5% more. I kept checking and rechecking benchmarks on a gaming PC I built that had all of their crap installed (for gaming only). It literally lost 5% in multithreaded tests. I guess if you don't use anything at all, but discord and other shit adds up.

6 cores are basically yesteryear. 6 cores without SMT are dead to me.

When you built said gaming PC and it delivered 95FPS instead of 100FPS, did the PC collapse onto itself in sheer embarrassment causing a mini-implosion...cause that would be cool.

P.S. I feel the same way about guys whose neck tie color matches their dress shirt color as you do about 6 cores without SMT...I feel your pain.

Last edited:

- Joined

- May 22, 2015

- Messages

- 14,421 (3.87/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

That's not accurate. It can act anything in between half a core (worst case scenario, completely bandwidth starved) and a full core (best case scenario, in-place, computing intensive tasks).When multithreading really counts, an SMT acts as half a core, in Rendering/baking tests 12cores/24 threads is AVG. %50 fasters than 12cores. That's really impressive imo.

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

Can you link to testing that shows HT/SMT with 100%/2x gains over not HT loads?That's not accurate. It can act anything in between half a core (worst case scenario, completely bandwidth starved) and a full core (best case scenario, in-place, computing intensive tasks).

- Joined

- May 22, 2015

- Messages

- 14,421 (3.87/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

Nope.Can you link to testing that shows HT/SMT with 100%/2x gains over not HT loads?

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

I didn't think that point was true... it was always somewhere around that 50% mark give or take many percentage points depending on the testing. I've never seen it hit 2x/100% more before... figured perhaps you have since you said as much.

- Joined

- May 22, 2015

- Messages

- 14,421 (3.87/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

No, I said that based on how HT works. As I told you, you'd get that under a specific kind of workload (let's not kid ourselves, we're still talking some missing hardware here, not a full core), but I don't know of benchmarks that measure that.I didn't think that point was true... it was always somewhere around that 50% mark give or take many percentage points depending on the testing. I've never seen it hit 2x/100% more before... figured perhaps you have since you said as much.

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

And I'm simply saying that doesn't happen (2x/100%)... Over the last 10-15 years I have been doing this, not one test I have seen puts it at that value. If you run into one, LMK so I can update the 'internal database'.No, I said that based on how HT works. As I told you, you'd get that under a specific kind of workload (let's not kid ourselves, we're still talking some missing hardware here, not a full core), but I don't know of benchmarks that measure that.

- Joined

- May 22, 2015

- Messages

- 14,421 (3.87/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

Now that you mentioned it, I could try and write one (God knows when I'll find the time). My PC doesn't have HT, but I think my laptop does.And I'm simply saying that doesn't happen (2x/100%)... Over the last 10-15 years I have been doing this, not one test I have seen puts it at that value. If you run into one, LMK so I can update the 'internal database'.

- Joined

- Dec 31, 2009

- Messages

- 19,431 (3.41/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

ok... GL with that. But theory and application in the real world are clearly different. Hit me up when you can make that or run across a real world 2x result.

- Joined

- Mar 23, 2016

- Messages

- 4,938 (1.44/day)

| Processor | Intel Core i7-13700 PL2 150W |

|---|---|

| Motherboard | MSI Z790 Gaming Plus WiFi |

| Cooling | Cooler Master Hyper 212 Halo Black |

| Memory | G Skill F5-6800J3446F48G 96GB kit |

| Video Card(s) | Gigabyte Radeon RX 9070 GAMING OC 16G |

| Storage | 970 EVO NVMe 500GB, WD850N 2TB |

| Display(s) | Samsung 28” 4K monitor |

| Case | Corsair iCUE 4000D RGB AIRFLOW |

| Audio Device(s) | EVGA NU Audio, Edifier Bookshelf Speakers R1280 |

| Power Supply | TT TOUGHPOWER GF A3 Gold 1050W |

| Mouse | Logitech G502 Hero |

| Keyboard | Logitech G G413 Silver |

| Software | Windows 11 Professional v24H2 |

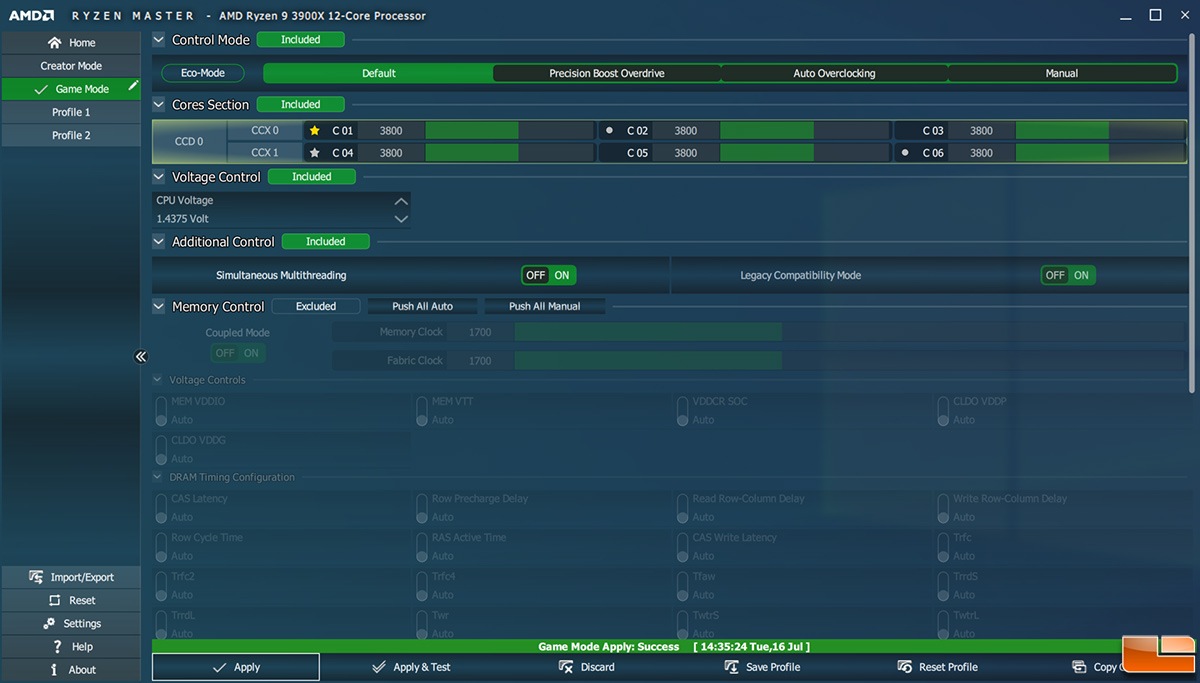

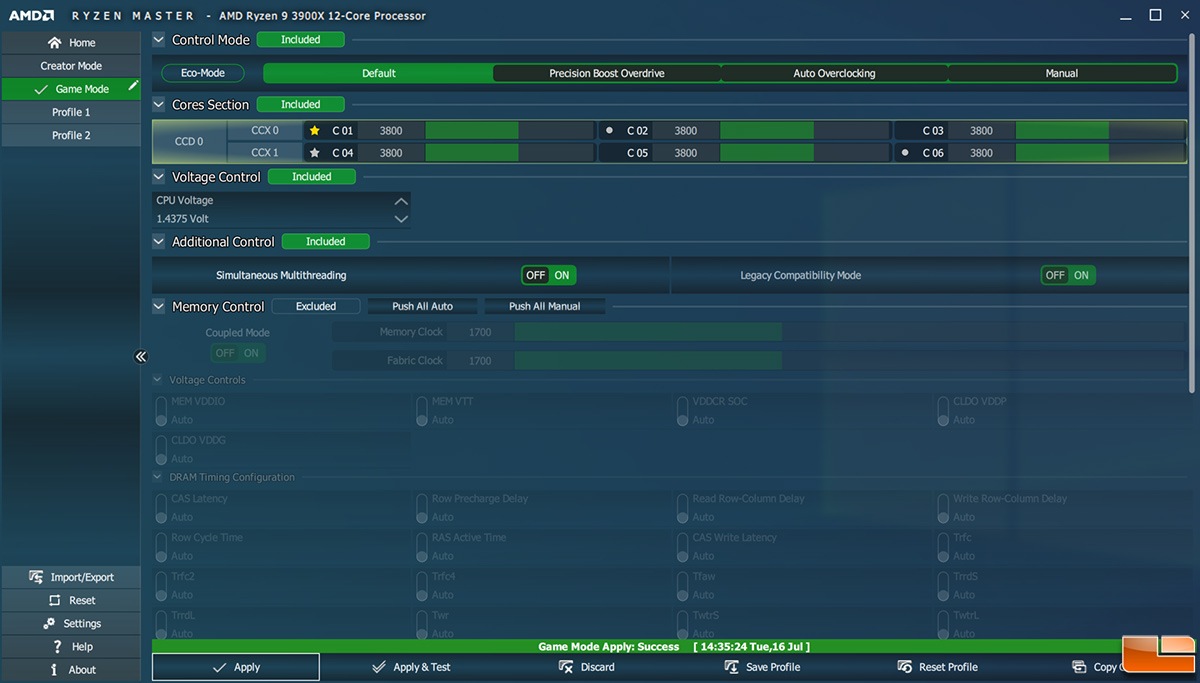

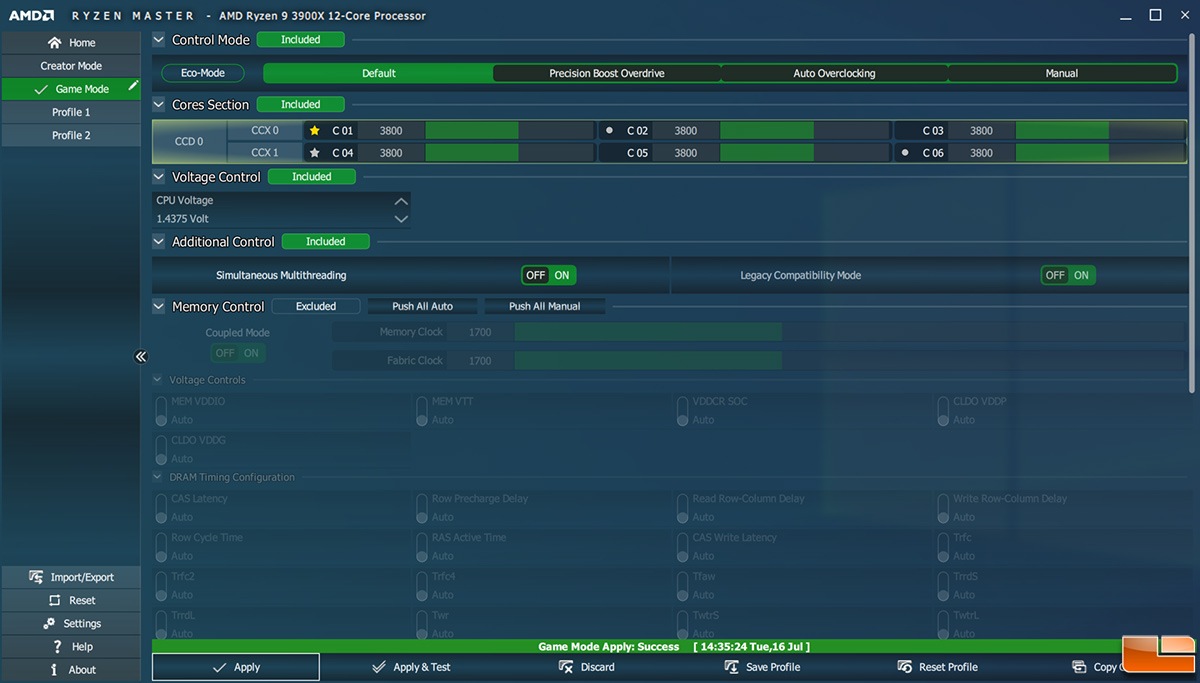

In other news Game Mode in Ryzen Master still serves a purpose. Even after AMD said Windows 10 v1903 was now optimized for all Zen processors with faster clock ramping and improved topology awareness.

Positive result:

Negative Result:

www.legitreviews.com

www.legitreviews.com

Positive result:

Negative Result:

Game Mode Might Boost Performance On AMD Ryzen 9 3900X Processors - Legit Reviews

- Joined

- May 22, 2015

- Messages

- 14,421 (3.87/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

I hope that was sarcastic, because that link says:In other news Game Mode in Ryzen Master still serves a purpose. Even after AMD said Windows 10 v1903 was now optimized for all Zen processors with faster clock ramping and improved topology awareness.

Positive result:

Negative Result:

Game Mode Might Boost Performance On AMD Ryzen 9 3900X Processors - Legit Reviews

www.legitreviews.com

When we ran other game titles with ‘Game Mode’ enabled on the 3900X we found that performance dropped. We only ran Rainbow Six: Siege, Far Cry 5 and Metro Exodus in our CPU test suite and of those titles only Metro Exodus showed a performance improvement with Game Mode enabled. It is likely that 99% of game titles run better in ‘Creator Mode’ and we hope that is the case.

Similar threads

- Replies

- 203

- Replies

- 6

- Replies

- 3

- Replies

- 89