If it was strictly RT games I wanted to play at 1080/1440p then I would go Intel truth be told. But in the real world for the sake of the 4 or 5 games that some cards do really well in (truth be told that's all it is) is it worth sacrificing performance in the near 100% other games.IIrc both of you were arguing about the RT speed, not the raster. Alchemist was delayed™ to death, and they settled to compete in the lower mid-range. Here's a reminder of the context in which Arc was launched: the RX6800XT has always been waaaaaay out reach from Arc alchemist, and they even though that they needed to undercut the RX 6700XT to have a chance...in the current market Arc is just lost, many Europeans stores even stopped selling them altogether, the few that are left are so badly priced it's not even funnyA RX 6800 is currently 50€ more expensive than a A770. Yup. You read that right.

View attachment 346102

We know that Intel fumbled when it comes to general rendering performance, in theory the A770 should have been faster than it is...but it failed to deliver. However, TPU review showed that the hit that Arc is taking when RT is on is lower when compared to RDNA 2 and not that far behind ampere. Now that doesn't mean that Battlemage will manage to battle it out with Blackwell and RDNA 4, but if they can figure out the general rendering performance, they might realistically target an overall parity with Ada upper midrange (and nvidia even made the job easier for them)

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD to Redesign Ray Tracing Hardware on RDNA 4

- Thread starter btarunr

- Start date

- Joined

- Dec 29, 2017

- Messages

- 5,504 (2.05/day)

- Location

- Swansea, Wales

| System Name | Silent/X1 Yoga/S25U-1TB |

|---|---|

| Processor | Ryzen 9800X3D @ 5.4ghz AC 1.18 V, TG AM5 High Performance Heatspreader/1185 G7/Snapdragon 8 Elite |

| Motherboard | ASUS ROG Strix X870-I, chipset fans replaced with Noctua A14x25 G2 |

| Cooling | Optimus Block, HWLabs Copper 240/40 x2, D5/Res, 4x Noctua A12x25, 1x A14G2, Conductonaut Extreme |

| Memory | 64 GB Dominator Titanium White 6000 MT, 130 ns tRFC, active cooled, TG Putty Pro |

| Video Card(s) | RTX 3080 Ti Founders Edition, Conductonaut Extreme, 40 W/mK 3D Graphite pads, Corsair XG7 Waterblock |

| Storage | Intel Optane DC P1600X 118 GB, Samsung 990 Pro 2 TB |

| Display(s) | 34" 240 Hz 3440x1440 34GS95Q LG MLA+ W-OLED, 31.5" 165 Hz 1440P NanoIPS Ultragear, MX900 dual VESA |

| Case | Sliger SM570 CNC Alu 13-Litre, 3D printed feet, TG Minuspad Extreme, LINKUP Ultra PCIe 4.0 x16 White |

| Audio Device(s) | Audeze Maxwell Ultraviolet w/upgrade pads & Leather LCD headband, Galaxy Buds 3 Pro, Razer Nommo Pro |

| Power Supply | SF1000 Plat, 13 A transparent custom cables, Sentinel Pro 1500 Online Double Conversion UPS w/Noctua |

| Mouse | Razer Viper V3 Pro 8 KHz Mercury White w/Pulsar Supergrip tape, Razer Atlas, Razer Strider Chroma |

| Keyboard | Wooting 60HE+ module, TOFU-R CNC Alu/Brass, SS Prismcaps W+Jellykey, LekkerL60 V2, TLabs Leath/Suede |

| Software | Windows 11 IoT Enterprise LTSC 24H2 |

| Benchmark Scores | Legendary |

Disingenuous.

4070 Ti S is cheaper than the 7900XTX and faster in RT across all resolutions.

4070 Ti 12 faster at all resolutions except 4K, but in path tracing is significantly faster even at 4K.

Edit: the Arc GPUs are literally chart topping in TPU performance/dollar too.

Raster is irrelevant in this discussion.

4070 Ti S is cheaper than the 7900XTX and faster in RT across all resolutions.

4070 Ti 12 faster at all resolutions except 4K, but in path tracing is significantly faster even at 4K.

Edit: the Arc GPUs are literally chart topping in TPU performance/dollar too.

Raster is irrelevant in this discussion.

Ah yes of course, when Nvidia has faster GPUs in RT they can charge a disproportionally large premium over AMD and it's all cool, if AMD has a faster GPU in RT than Intel they can't because uhm...uhm...

Ah, shucks, I forgot about the "Nvidia good, AMD bad" infallible logic once again, my bad.

Of course you are, the 7900XTX is still faster in raster and if you want a GPU that can comfortably beat it at that metric from Nvidia you actually have to go all the way up to the eye watering 1600$ 4090.

I know you are totally obsessed with RT and if it was after you literally nothing else would matter but that's not how it works in the real world. AMD can ask for a premium for better raster performance in the same way Nvidia can for RT, it must be real hard to wrap your head around the concept but it's actually a real phenomena.

The thing is Intel sucks in both raster and RT so you shouldn't be so bewildered that AMD cards are much more expensive than Intel's.

Last edited:

- Joined

- Jun 2, 2017

- Messages

- 9,828 (3.40/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

For a £300+ card I want to be able to use whatever resolution and settings I please.

Besides, GPUs are traditionally tested at higher resolutions, and CPUs at lower resolutions, to avoid bottlenecks from other parts of the system.

It's true, 1080p/1440p is the range these cards can comfortably operate with settings maxed. However the A770 in particular is decent as a productivity card, and doesn't mind higher resolutions for gaming either.

Wow. This is not 2016. A GPU for $300 today is only good enough for 1080P. Then you claim that an ARC770 is better at $300 than the rest. Do you realize how foolish this argument is? RDNA4 is still opinion and it would not matter if it's performance in in RT was as good as a 4090. That notion about resolutions is foolish when the market already dictates GPU hierarchy by pricing at retail.

- Joined

- Apr 30, 2020

- Messages

- 1,116 (0.61/day)

| System Name | S.L.I + RTX research rig |

|---|---|

| Processor | Ryzen 7 5800X 3D. |

| Motherboard | MSI MEG ACE X570 |

| Cooling | Corsair H150i Cappellx |

| Memory | Corsair Vengeance pro RGB 3200mhz 32Gbs |

| Video Card(s) | 2x Dell RTX 2080 Ti in S.L.I |

| Storage | Western digital Sata 6.0 SDD 500gb + fanxiang S660 4TB PCIe 4.0 NVMe M.2 |

| Display(s) | HP X24i |

| Case | Corsair 7000D Airflow |

| Power Supply | EVGA G+1600watts |

| Mouse | Corsair Scimitar |

| Keyboard | Cosair K55 Pro RGB |

In terms of efficiency AMD RT cores/units/accelerators are 8-10% faster in RDNA3 than they are in RDNA2.

In terms of technical AMD RNDA3 RT cores/units/accelerators specs they're starved for data in RDNA3, so could easily understand why they instantly gained almost 25% increase in possible performance form changes

On The NVidia side Nvidia may have more generations of RT out but their RT gains have been at a steady 6% increase in efficiency per-generation so far.

In terms of technical AMD RNDA3 RT cores/units/accelerators specs they're starved for data in RDNA3, so could easily understand why they instantly gained almost 25% increase in possible performance form changes

On The NVidia side Nvidia may have more generations of RT out but their RT gains have been at a steady 6% increase in efficiency per-generation so far.

- Joined

- Nov 4, 2005

- Messages

- 12,176 (1.71/day)

| System Name | Compy 386 |

|---|---|

| Processor | 7800X3D |

| Motherboard | Asus |

| Cooling | Air for now..... |

| Memory | 64 GB DDR5 6400Mhz |

| Video Card(s) | 7900XTX 310 Merc |

| Storage | Samsung 990 2TB, 2 SP 2TB SSDs, 24TB Enterprise drives |

| Display(s) | 55" Samsung 4K HDR |

| Audio Device(s) | ATI HDMI |

| Mouse | Logitech MX518 |

| Keyboard | Razer |

| Software | A lot. |

| Benchmark Scores | Its fast. Enough. |

I feel more of a AI RT compute unit coming.

And I don’t want it. AI could be used to enhance games but instead it’s a crappy Google for people who can’t Google and looks through your photos to turn you in for wrong think.

And I don’t want it. AI could be used to enhance games but instead it’s a crappy Google for people who can’t Google and looks through your photos to turn you in for wrong think.

- Joined

- Jan 14, 2019

- Messages

- 15,798 (6.86/day)

- Location

- Midlands, UK

| System Name | My second and third PCs are Intel + Nvidia |

|---|---|

| Processor | AMD Ryzen 7 7800X3D @ 45 W TDP Eco Mode |

| Motherboard | MSi Pro B650M-A Wifi |

| Cooling | be quiet! Shadow Rock LP |

| Memory | 2x 24 GB Corsair Vengeance DDR5-4800 |

| Video Card(s) | PowerColor Reaper Radeon RX 9070 XT |

| Storage | 2 TB Corsair MP600 GS, 4 TB Seagate Barracuda |

| Display(s) | Dell S3422DWG 34" 1440 UW 144 Hz |

| Case | Corsair Crystal 280X |

| Audio Device(s) | Logitech Z333 2.1 speakers, AKG Y50 headphones |

| Power Supply | 750 W Seasonic Prime GX |

| Mouse | Logitech MX Master 2S |

| Keyboard | Logitech G413 SE |

| Software | Bazzite (Fedora Linux) KDE Plasma |

With current prices and performance levels, midrange is more than enough for me.Two generations too late for good upscaling or RT so only targeting mid range. Fingers crossed for RDNA 5 if it's called that.

I wonder if Battlemage will have a higher end card than RDNA 4. Intel's upscaler and RT tech is already ahead.

I don't think people realise the work involved in getting RT/PT working in games. There's two PT games at the moment that had the full development weight of NVidia behind them. It really does need that amount of work and lots of money to do right. Honestly, you are looking at three years at least before that becomes the norm. The level of RT/PT your seeing now is what will continue for the near future.

- Joined

- Nov 26, 2021

- Messages

- 1,879 (1.50/day)

- Location

- Mississauga, Canada

| Processor | Ryzen 7 5700X |

|---|---|

| Motherboard | ASUS TUF Gaming X570-PRO (WiFi 6) |

| Cooling | Noctua NH-C14S (two fans) |

| Memory | 2x16GB DDR4 3200 |

| Video Card(s) | Reference Vega 64 |

| Storage | Intel 665p 1TB, WD Black SN850X 2TB, Crucial MX300 1TB SATA, Samsung 830 256 GB SATA |

| Display(s) | Nixeus NX-EDG27, and Samsung S23A700 |

| Case | Fractal Design R5 |

| Power Supply | Seasonic PRIME TITANIUM 850W |

| Mouse | Logitech |

| VR HMD | Oculus Rift |

| Software | Windows 11 Pro, and Ubuntu 20.04 |

That is true. However, like AMD, past Intel GPUs have built off previous generations. Therefore, it is likely that Battlemage will be similar to Alchemist. People expecting huge performance increases are likely to be disappointed. Now, Alchemist is very good at ray tracing and even if the best Battlemage can do is match the 4070, it is likely to match the 4070 in all respects. On the other hand, the rumoured specifications of Navi 48, the largest RDNA4 SKU, indicate that it will probably match or surpass the 4070 Ti Super in raster, but probably fall back in ray tracing.And the 7900XTX (full chip) is massively more powerful on paper than the 4080S (another full chip) and yet is only slightly ahead and in only several pure raster scenarios. Same story with, for example, 4070S vs 7900 GRE There’s a reason why comparing sheer stats doesn’t work between different architectures.

- Joined

- Apr 30, 2020

- Messages

- 1,116 (0.61/day)

| System Name | S.L.I + RTX research rig |

|---|---|

| Processor | Ryzen 7 5800X 3D. |

| Motherboard | MSI MEG ACE X570 |

| Cooling | Corsair H150i Cappellx |

| Memory | Corsair Vengeance pro RGB 3200mhz 32Gbs |

| Video Card(s) | 2x Dell RTX 2080 Ti in S.L.I |

| Storage | Western digital Sata 6.0 SDD 500gb + fanxiang S660 4TB PCIe 4.0 NVMe M.2 |

| Display(s) | HP X24i |

| Case | Corsair 7000D Airflow |

| Power Supply | EVGA G+1600watts |

| Mouse | Corsair Scimitar |

| Keyboard | Cosair K55 Pro RGB |

does the Path tracing completely remove all uses of any rasterization?I don't think people realise the work involved in getting RT/PT working in games. There's two PT games at the moment that had the full development weight of NVidia behind them. It really does need that amount of work and lots of money to do right. Honestly, you are looking at three years at least before that becomes the norm. The level of RT/PT your seeing now is what will continue for the near future.

Nope, not at all. Everything else apart from AI, game mechanics and lighting in a RT/PT game is raster. In a normal non RT/PT game the lighting is raster. All RT/PT is doing is taking over how the lighting is done and how it reacts to the textures and materials applied to the 3d models. You see even the textures and materials are raster. All that happens is that maps get applied to those textures and materials. You have common maps like colour maps, transparency maps, specular maps and bump maps. This where shader programmers earn there money. They work with these maps to get the effects you get in games.does the Path tracing completely remove all uses of any rasterization?

I think you would all agree, people that turn around and say the game is fully RT/PT do not know what they are talking about. That cannot happen. You need models, textures, materials, the basic building blocks of a game are all raster.

As an example, download Blender (a free ray tracing program). Put any shape you like on the screen. Raster. Texture or put a material on it. Raster. Put a light in there. Render. Only the light affecting the model and material is RT.

- Joined

- Jun 6, 2021

- Messages

- 838 (0.59/day)

| System Name | Red Devil |

|---|---|

| Processor | AMD 9950X3D- Granite Ridge - GNR-B0 |

| Motherboard | MSI MPG Carbon WIFI (MS-7E49) |

| Cooling | NZXT Kraken Z73 360mm; 14 x Corsair QL 120mm RGB Case Fans |

| Memory | G.SKill Trident Z Neo 5 64GB DDR5-6000 CL26 1.40v |

| Video Card(s) | Asus Astral 5090 OC Edition 176 ROPs (lol) |

| Storage | 1 x Crucial Gen 5 T705 2GB; 1 x WD Black SN850X 4TB; 1 x Samsung SSD 870EVO 2TB |

| Display(s) | 1 x MSI MPG 321URX QD-OLED 4K; 2 x Asus VG27AQL1A |

| Case | Corsair Obsidian 1000D |

| Audio Device(s) | Raz3r Nommo V2 Pro ; Steel Series Arctis Nova Pro X Wireless (XBox Version) |

| Power Supply | HX1500i Digital ATX - 1500w - 80 Plus Titanium |

| Mouse | Razer Basilisk V3 |

| Keyboard | Razer Huntsman V2 - Optical Gaming Keyboard |

| Software | Windows 11 |

Man I would love to see that too. Unfortunately, AMD is not making a card to compete with the 5090. I've been cheering on AMD to go back to the ATi days because that 9700pro was a beast of a card. We'll see what happens moving forward with RDNA 5.Would love to see amd pull a 9700pro level performance on nvidia for rdna4 but I’m not too hopeful

Both companies show leadership in different areas, Nvidia being more successful in more of those areas. Majority of buyers buy more into one feature set and minority into alternative feature set. And that's fine, as buyers make their own choices based on whatever they perceive and think, erroneously or not, is relevant for them.As you said, hoping to see some leadership from AMD in the GPU space would be a good thing.

For example, I cannot care less as to which company has better RT. It's not a selling point I'd consider when buying GPU. It's like asking which colour of apple I'd prefer to chew. I care more about seeing content in good HDR quality on OLED display and having enough VRAM for a few good years so that GPU does not choke too soon in titles I play.

AMD leads complex and bumpy, yet necessary for high-NA EUV production in future, transition to chiplet-based GPUs, which is often not appreciated enough. Those GPU chiplets do not rain from clouds. They also offer new video interface DP 2.1 and more VRAM on several models. Those features also appeal to some buyers.

Although Nvidia leads in various aspects of client GPU, they have also managed to slow down the transition to DP 2.1 ports in entire monitor industry for at least two years. They abandoned the leadership in this area, which they had in 2016 when they introduced DP 1.4. The reason? They decided to go cheap on PCB and recycle video traces on the main board from 3000 into 4000 series, instead of innovating and prompting monitor industry to accelerate the transition. The result is limited video pipeline on halo cards that is not capable of driving Samsung's 57-inch 8K/2K/240Hz monitor beyond 120Hz. This will not be fixed until 5000 series. Also, due to being skimpy on VRAM, several classes of cards with 8GB become obsolete quicker in growing number of titles. For example, paltry 8GB+RT on then very expensive 3070/Ti series have become a joke just ~3 years later.

AMD needs to work on several features, there is no doubt about it, but it's not that Nvidia has nothing to fix or address, including basic hardware on PCB.

- Joined

- Nov 22, 2023

- Messages

- 552 (1.05/day)

AMD tends to follow the Alton brown GPU design philosophy: No single use tools.

RT in AMD is handled by a bulked out SP, and they have one of these SPs in each CU. Its a really elegant design philosophy that sidesteps the need for specialized hardware and keeps their die sizes a bit more svelte than Nvidia. When the GPU isn't doing an RT workload, the SP is able to contribute to rendering the rasterized scene unlike Nvidia and Intel.

However they get a double penalty when rendering RT because they lose raster performance in order to actually do the RT calculations unlike Intel and Nvidia who have dedicated hardware for it.

It would be interesting if not only is the RT unit redesigned, but there are maybe two or more of them dedicated to a CU. Depending on the complexity of the RT the mix of SPs dedicated to RT calculations or Raster calculations could adjust on the fly...

RT in AMD is handled by a bulked out SP, and they have one of these SPs in each CU. Its a really elegant design philosophy that sidesteps the need for specialized hardware and keeps their die sizes a bit more svelte than Nvidia. When the GPU isn't doing an RT workload, the SP is able to contribute to rendering the rasterized scene unlike Nvidia and Intel.

However they get a double penalty when rendering RT because they lose raster performance in order to actually do the RT calculations unlike Intel and Nvidia who have dedicated hardware for it.

It would be interesting if not only is the RT unit redesigned, but there are maybe two or more of them dedicated to a CU. Depending on the complexity of the RT the mix of SPs dedicated to RT calculations or Raster calculations could adjust on the fly...

- Joined

- May 3, 2018

- Messages

- 2,881 (1.13/day)

I think that's the point of this article. AMD has realised ultimately it can't rely on software and shaders any longer to compete. They need some specialised ASIC to help handle RTing operations. I'm getting into this argument over whether RT is a waste of time, because alas that ship. has sailed and Nvidia is calling the shots. SOny's embracing it, Microsoft's embracing it, Intel's embracing it, Samsung's embracing it, AMD just have to live with this fact and get on board. Hopefully if they can deliver compelling update it stops this mindless fanboy bashing they get over something most people don't even use anyway, but hold over them. I'm not against RT but with a 6800XT I've never enabled it and frankly I don't have any interest in the few games that seem to promote it like Cyberpunk. A lot of the games I've seen demoed for RT look worse to me.The major problem with the AMD cards is the way they use the shaders. Where NVidia have dedicated tensor and RT cores to do upscaling/Ray Tracing etc, AMD use the shaders they have and dual issue them. Very good for light RT games as their shaders are good, probably the best pure shaders out there, but when RT gets heavy then they lose about 20-40% having to do the RT calculations and the raster work. Dedicated shaders or cores will help out a lot as like I said the shaders are excellent, power wise not far off 4090 performance when used correctly. But AMD do like their programmable shaders.

Personally I'm actually hoping AMD greatly improve AI performance in the GPU as I'm using AI software that could greatly benefit from this.

- Joined

- Nov 11, 2016

- Messages

- 3,624 (1.17/day)

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

Well let hope rx8000 aren't gonna lose 50-65% of FPS just by enabling RTGI + Reflections + RTAO, combine with AI Upscaling then RT will be a lot more palatable.

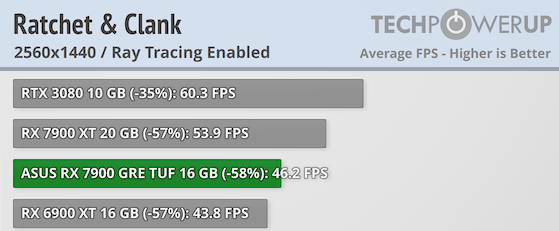

For example the 7900GRE lose 58% of its FPS with RT enabled in Ratchet&Clank

If the impact of RT is minimized to 35% (like with Ampere/Ada), the 7900GRE would be getting 71FPS, a very comfortable FPS for 1440p or 4K with Upscaling+Frame Generation.

RT GI, Reflections, Shadows, Ambient Occlusion are superior to the rasterized version of them, so when anyone asked for better visual, RT was always the answer. For people who don't care about graphics, then RT is not for them.

For example the 7900GRE lose 58% of its FPS with RT enabled in Ratchet&Clank

If the impact of RT is minimized to 35% (like with Ampere/Ada), the 7900GRE would be getting 71FPS, a very comfortable FPS for 1440p or 4K with Upscaling+Frame Generation.

RT GI, Reflections, Shadows, Ambient Occlusion are superior to the rasterized version of them, so when anyone asked for better visual, RT was always the answer. For people who don't care about graphics, then RT is not for them.

Last edited:

- Joined

- Nov 22, 2023

- Messages

- 552 (1.05/day)

Well let hope rx8000 aren't gonna lose 50-65% of FPS just by enabling RTGI + Reflections + RTAO, combine with AI Upscaling then RT will be a lot more palatable.

For example the 7900GRE lose 58% of its FPS with RT enabled in Ratchet&Clank

View attachment 346203

If the impact of RT is minimized to 35% (like with Ampere/Ada), the 7900GRE would be getting 71FPS, a very comfortable FPS for 1440p or 4K with Upscaling+Frame Generation.

RT GI, Reflections, Shadows, Ambient Occlusion are superior to the rasterized version of them, so when anyone asked for better visual, RT was always the answer. For people who don't care about graphics, then RT is not for them.

-I would honestly say GI and AO are qualitatively better with RT.

Shadows and Reflections are more preferential and crapshoot if people can even tell the difference, but GI and AO are basically 100% better with RT.

- Joined

- Sep 28, 2005

- Messages

- 3,441 (0.48/day)

- Location

- Canada

| System Name | Alienware R10 Rebuild |

|---|---|

| Processor | Ryzen 5700X3D |

| Motherboard | Alienware Mobo |

| Cooling | AIO (Alienware) |

| Memory | 2x16GB GSkill Ripjaws 3600MT/s |

| Video Card(s) | Dell RTX 3080 |

| Storage | 1x 2TB NVME XPG GAMMIX S70 BLADE |

| Display(s) | LG 32" 1440p |

| Case | Alienware R10 |

| Audio Device(s) | Onboard |

| Power Supply | 1000W Dell PSU |

| Mouse | Steelseries |

| Keyboard | Blackweb Walmart Special Mechanical |

| Software | Windows 11 |

I dunno if it has been said or not.

But do you guys remember Physx and Hairworks? How AMD Struggled or couldn't operate with it? I mean, there was dedicated cards for it, heck I had one for that space bugs game on that cold planet? I cant remember the name. But yeah, it was used heavily and AMD Couldn't work with it. Had to get another card.

Anyway, what I am getting at is that AMD is late to the game, as usual. RT is the new Physx and Hairworks. Even bigger actually. And a game changer to lighting. Hell, it is fantastic for horror games.

I am glad they are now being active in looking into it. But at this point, for midrange, I don't care who it is (AMD, Intel, Nvidia), so long as I can get a cheaper GPU that can implement RT, then I will go for it.

But do you guys remember Physx and Hairworks? How AMD Struggled or couldn't operate with it? I mean, there was dedicated cards for it, heck I had one for that space bugs game on that cold planet? I cant remember the name. But yeah, it was used heavily and AMD Couldn't work with it. Had to get another card.

Anyway, what I am getting at is that AMD is late to the game, as usual. RT is the new Physx and Hairworks. Even bigger actually. And a game changer to lighting. Hell, it is fantastic for horror games.

I am glad they are now being active in looking into it. But at this point, for midrange, I don't care who it is (AMD, Intel, Nvidia), so long as I can get a cheaper GPU that can implement RT, then I will go for it.

- Joined

- Apr 19, 2018

- Messages

- 1,227 (0.48/day)

| Processor | AMD Ryzen 9 5950X |

|---|---|

| Motherboard | Asus ROG Crosshair VIII Hero WiFi |

| Cooling | Arctic Liquid Freezer II 420 |

| Memory | 32Gb G-Skill Trident Z Neo @3806MHz C14 |

| Video Card(s) | MSI GeForce RTX2070 |

| Storage | Seagate FireCuda 530 1TB |

| Display(s) | Samsung G9 49" Curved Ultrawide |

| Case | Cooler Master Cosmos |

| Audio Device(s) | O2 USB Headphone AMP |

| Power Supply | Corsair HX850i |

| Mouse | Logitech G502 |

| Keyboard | Cherry MX |

| Software | Windows 11 |

It would be so funny to see AMD as fast or faster at RT than nGreedia in just two generations, who we know have been holding back on their RT hardware for years now, with minimal improvements.

It is being looked in to. I don't think it will be as quick as NVidias implementation still but it will be improved. The thing is these are computationally very heavy calculations, years ago things like this needed render farms to do a single frame. Are AMD slow to the game? Yes of course depending in who you speak to and the games you play. Like I have said previously before in another thread, you will still need a lot of raster power, games are getting bigger, more detailed and with more models, textures and materials. Don't just dismiss the amount of raster power you will still need.I dunno if it has been said or not.

But do you guys remember Physx and Hairworks? How AMD Struggled or couldn't operate with it? I mean, there was dedicated cards for it, heck I had one for that space bugs game on that cold planet? I cant remember the name. But yeah, it was used heavily and AMD Couldn't work with it. Had to get another card.

Anyway, what I am getting at is that AMD is late to the game, as usual. RT is the new Physx and Hairworks. Even bigger actually. And a game changer to lighting. Hell, it is fantastic for horror games.

I am glad they are now being active in looking into it. But at this point, for midrange, I don't care who it is (AMD, Intel, Nvidia), so long as I can get a cheaper GPU that can implement RT, then I will go for it.

- Joined

- Oct 10, 2009

- Messages

- 961 (0.17/day)

| System Name | Desktop |

|---|---|

| Processor | AMD Ryzen 7 5800X3D |

| Motherboard | MAG X570S Torpedo Max |

| Cooling | Corsair H100x |

| Memory | 64GB Corsair CMT64GX4M2C3600C18 @ 3600MHz / 18-19-19-39-1T |

| Video Card(s) | EVGA RTX 3080 Ti FTW3 Ultra |

| Storage | Kingston KC3000 1TB + Kingston KC3000 2TB + Samsung 860 EVO 1TB |

| Display(s) | 32" Dell G3223Q (2160p @ 144Hz) |

| Case | Fractal Meshify 2 Compact |

| Audio Device(s) | ifi Audio ZEN DAC V2 + Focal Radiance / HyperX Solocast |

| Power Supply | Super Flower Leadex V Platinum Pro 1000W |

| Mouse | Razer Viper Ultimate |

| Keyboard | Razer Huntsman V2 Optical (Linear Red) |

| Software | Windows 11 Pro x64 |

I honestly can't see why anyone would be against RT/PT in games...

Once hardware matures and engines make efficient use of the tech, it's going to benefit everyone, including developers. For consumers, games will appear visually more impressive and natural, have less artifacts, distractions, and shortfalls (screen-space technologies anyone?), and for developers it'd mean less hacking together of believable approximations (some implementations are damn impressive, though), less baked lighting, texture work, and so on.

The upscaling technology that makes all this more viable on current hardware may not be perfect, but it's pretty darn impressive in most cases. Traditionally, I've was a fan of more raw rendering; often avoided TAA, many forms of post processing, motion blur, etc, due to the loss of perceived sharpness in the final image, but DLSS/DLAA have converted me. In most games that support the tech, I've noticed very little visual issues. There is an overall softer finish to the image, but it takes the roughness of aliasing away - makes it feel less 'artificial' and blends the scene for a more cohesive presentation. Even in fast-paced shooters like Call of Duty, artifacting with motion is very limited and does not seem to affect my gameplay and competitiveness. Each release of DLSS only enhances it. I have not experienced Intel or AMD's equivalent technologies, but I'm sure they're both developing them at full steam.

Yes, I'm an Nvidia buyer, and have been for many generations, but I'm all for competition. Rampant capitalism without competition leads to less choice, higher prices and stagnation. Intel and AMD not only staying in the game, but also innovating and hopefully going toe-to-toe with Nvidia, will benefit everyone, regardless which 'team' you're on.

Technology is what interests me the most, not the brand, so whoever brings the most to the table, for an acceptable price, is who gets my attention...

Could it be that one day there is no rasterisation, or traditional rendering techniques, in games at all, in future? Would AI models just infer everything? Sora is already making videos and Suno generating music, is it much of a stretch to think game rendering is next?

Once hardware matures and engines make efficient use of the tech, it's going to benefit everyone, including developers. For consumers, games will appear visually more impressive and natural, have less artifacts, distractions, and shortfalls (screen-space technologies anyone?), and for developers it'd mean less hacking together of believable approximations (some implementations are damn impressive, though), less baked lighting, texture work, and so on.

The upscaling technology that makes all this more viable on current hardware may not be perfect, but it's pretty darn impressive in most cases. Traditionally, I've was a fan of more raw rendering; often avoided TAA, many forms of post processing, motion blur, etc, due to the loss of perceived sharpness in the final image, but DLSS/DLAA have converted me. In most games that support the tech, I've noticed very little visual issues. There is an overall softer finish to the image, but it takes the roughness of aliasing away - makes it feel less 'artificial' and blends the scene for a more cohesive presentation. Even in fast-paced shooters like Call of Duty, artifacting with motion is very limited and does not seem to affect my gameplay and competitiveness. Each release of DLSS only enhances it. I have not experienced Intel or AMD's equivalent technologies, but I'm sure they're both developing them at full steam.

Yes, I'm an Nvidia buyer, and have been for many generations, but I'm all for competition. Rampant capitalism without competition leads to less choice, higher prices and stagnation. Intel and AMD not only staying in the game, but also innovating and hopefully going toe-to-toe with Nvidia, will benefit everyone, regardless which 'team' you're on.

Technology is what interests me the most, not the brand, so whoever brings the most to the table, for an acceptable price, is who gets my attention...

Could it be that one day there is no rasterisation, or traditional rendering techniques, in games at all, in future? Would AI models just infer everything? Sora is already making videos and Suno generating music, is it much of a stretch to think game rendering is next?

Last edited:

- Joined

- Dec 26, 2006

- Messages

- 4,164 (0.62/day)

- Location

- Northern Ontario Canada

| Processor | Ryzen 5700x |

|---|---|

| Motherboard | Gigabyte X570S Aero G R1.1 BiosF5g |

| Cooling | Noctua NH-C12P SE14 w/ NF-A15 HS-PWM Fan 1500rpm |

| Memory | Micron DDR4-3200 2x32GB D.S. D.R. (CT2K32G4DFD832A) |

| Video Card(s) | AMD RX 6800 - Asus Tuf |

| Storage | Kingston KC3000 1TB & 2TB & 4TB Corsair MP600 Pro LPX |

| Display(s) | LG 27UL550-W (27" 4k) |

| Case | Be Quiet Pure Base 600 (no window) |

| Audio Device(s) | Realtek ALC1220-VB |

| Power Supply | SuperFlower Leadex V Gold Pro 850W ATX Ver2.52 |

| Mouse | Mionix Naos Pro |

| Keyboard | Corsair Strafe with browns |

| Software | W10 22H2 Pro x64 |

I'd rather have a card with no RT and give me the discount in %$ of % less transistors.

- Joined

- Sep 16, 2018

- Messages

- 10,422 (4.30/day)

- Location

- Winnipeg, Canada

| Processor | AMD R9 9900X @ booost |

|---|---|

| Motherboard | Asus Strix X670E-F |

| Cooling | Thermalright Phantom Spirit 120 EVO, 2x T30 |

| Memory | 2x 16GB Lexar Ares @ 6400 28-36-36-68 1.55v |

| Video Card(s) | Zotac 4070 Ti Trinity OC @ 3045/1500 |

| Storage | WD SN850 1TB, SN850X 2TB, 2x SN770 1TB |

| Display(s) | LG 50UP7100 |

| Case | Asus ProArt PA602 |

| Audio Device(s) | JBL Bar 700 |

| Power Supply | Seasonic Vertex GX-1000, Monster HDP1800 |

| Mouse | Logitech G502 Hero |

| Keyboard | Logitech G213 |

| VR HMD | Oculus 3 |

| Software | Yes |

| Benchmark Scores | Yes |

What the heck you guys?

For how long have you all been saying RT doesn't matter.. Raster the world!

And now this

For how long have you all been saying RT doesn't matter.. Raster the world!

And now this

Ummm, no. All that will happen is that they will get more complex. Even if AI generates them the models, textures and materials are still raster. RT/PT only affects the lighting and how the light reacts with materials/textures. What is so hard to grasp about this? It just happens to be the most computationally expensive thing to do in a graphics pipeline. We've had the same way of doing 3d for how many years now in one of the most forward looking industries and the way a 3d game is made is basically the same. The only thing that has changed is resolution, texture/material quality, the game engines are better quality and the hardware has got faster to display it. If a way of doing things different has not been found by now, don't bank on it changing anytime soon.Could it be that one day there is no rasterisation, or tradiontal rendering techniques, in games at all, in future? Would AI models just infer everything? Sora is already making videos and Suno generating music, is it much of a stretch to think game rendering is next?

- Joined

- Nov 22, 2023

- Messages

- 552 (1.05/day)

What the heck you guys?

For how long have you all been saying RT doesn't matter.. Raster the world!

And now this

- I think it's important to be careful with words and also point in time sampling etc.

RT still really doesn't and really hasn't "mattered" for the last several gens. It doesn't look much if any better than Raster and it's performance hit is way too high. Every game except Metro Exodus has kept a rasterized lighting system with RT hap hazardly thrown on top.

Nothing about RDNA4 is going to change that equation.

That doesn't mean some people don't prefer RT lighting, or just like the idea of the tech even if they aren't really gungho about the practical differences vs Raster.